Research Articles

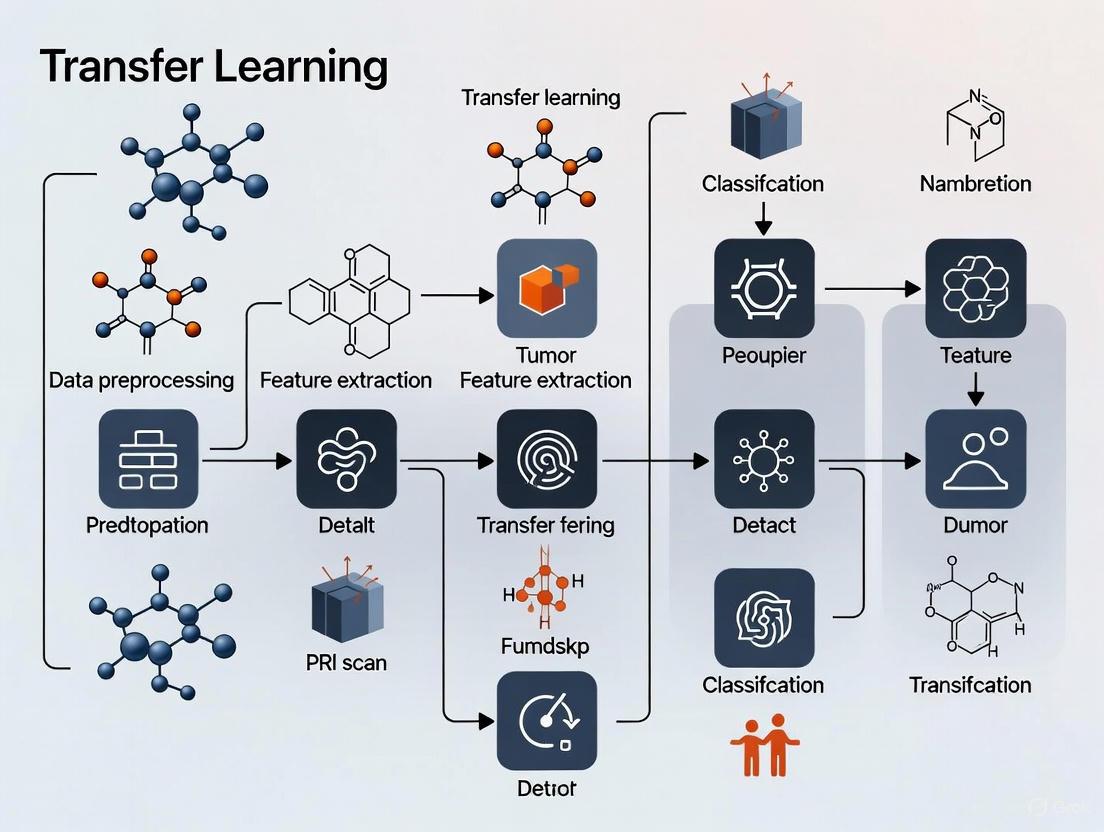

Transfer Learning for MRI Brain Tumor Detection: Advanced Models, Clinical Implementation, and Future Directions

This article comprehensively reviews the application of transfer learning (TL) for brain tumor detection in MRI scans, tailored for researchers and drug development professionals. It explores the foundational principles of TL and its necessity in medical imaging, details state-of-the-art methodologies including hybrid CNN-Transformer architectures and attention mechanisms, and addresses key challenges like data scarcity and model interpretability. The scope also includes a rigorous comparative analysis of model performance and validation techniques, synthesizing findings to discuss future trajectories for integrating these AI tools into biomedical research and clinical diagnostics to enhance precision medicine.

Unlocking Cancer Insights: A Comprehensive Guide to Natural Language Processing for EHRs in Oncology Research and Drug Development

This article provides a detailed examination of Natural Language Processing (NLP) applications for analyzing Electronic Health Records (EHRs) in oncology. Tailored for researchers, scientists, and drug development professionals, it covers the foundational role of NLP in addressing the global cancer burden by transforming unstructured clinical notes into analyzable data. The scope spans from core methodologies like information extraction and text classification to performance comparisons of advanced models, including bidirectional transformers. It further addresses key implementation challenges such as model generalizability and integration into clinical workflows, and validates the real-world feasibility of NLP through case studies in lung, prostate, and brain cancer. The synthesis offers a roadmap for leveraging NLP to accelerate cancer research, enhance clinical trial design, and pave the way for data-driven, personalized cancer care.

NGS for Microsatellite Instability Testing: A Comprehensive Review of Performance, Applications, and Clinical Validation

This article provides a comprehensive analysis of next-generation sequencing (NGS) for detecting microsatellite instability (MSI), a critical biomarker for immunotherapy response and Lynch syndrome identification. Tailored for researchers, scientists, and drug development professionals, it explores the foundational biology of MSI, details the development and application of novel NGS algorithms and panels, addresses key troubleshooting and optimization challenges, and presents extensive validation data comparing NGS performance against traditional methods like immunohistochemistry (IHC) and PCR. The synthesis of recent large-scale studies offers crucial insights for implementing NGS-MSI in clinical research and therapeutic development.

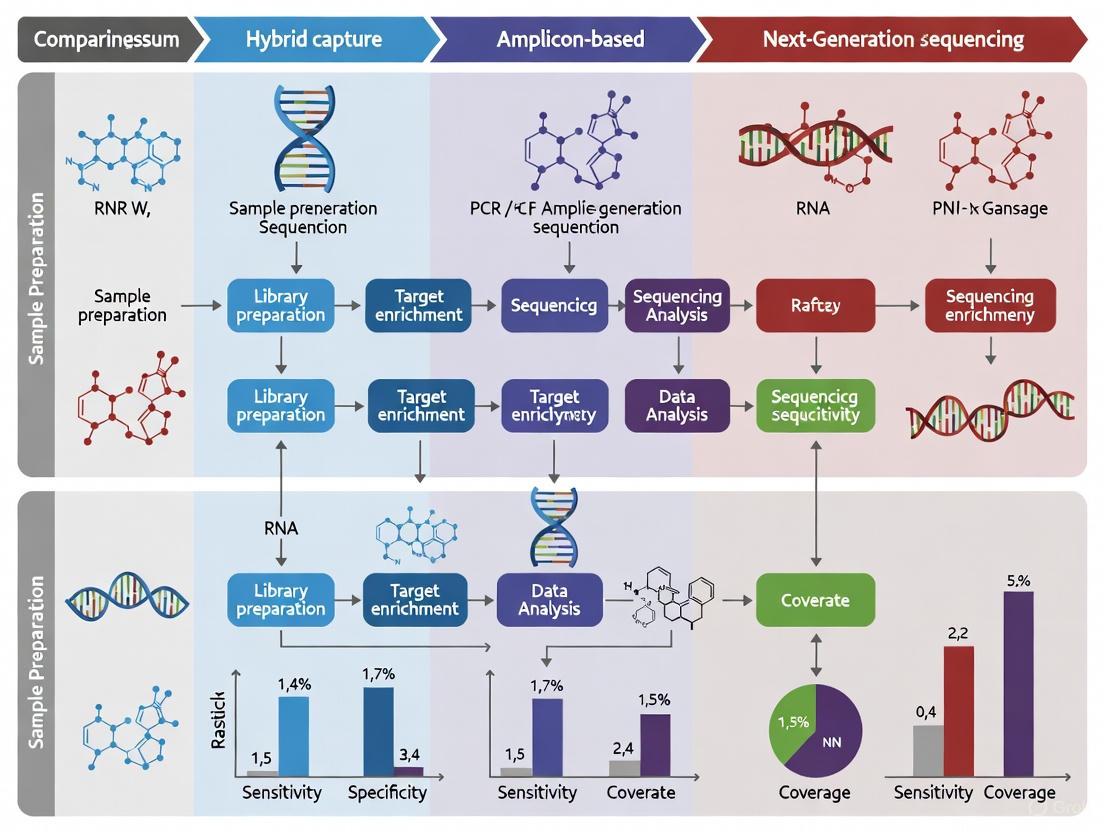

Hybrid Capture vs. Amplicon-Based NGS: A Comprehensive Guide for Biomedical Researchers

Targeted next-generation sequencing (NGS) is a cornerstone of modern genomics research and clinical diagnostics, with hybridization capture and amplicon-based methods being the two predominant target enrichment techniques. This article provides a comprehensive comparison for researchers, scientists, and drug development professionals, detailing the fundamental principles, optimal applications, and technical considerations for each method. It explores foundational workflows, guides method selection based on specific research goals like large panel sequencing versus focused variant detection, addresses common challenges and optimization strategies and synthesizes performance data from recent studies to empower informed experimental design and implementation in biomedical research.

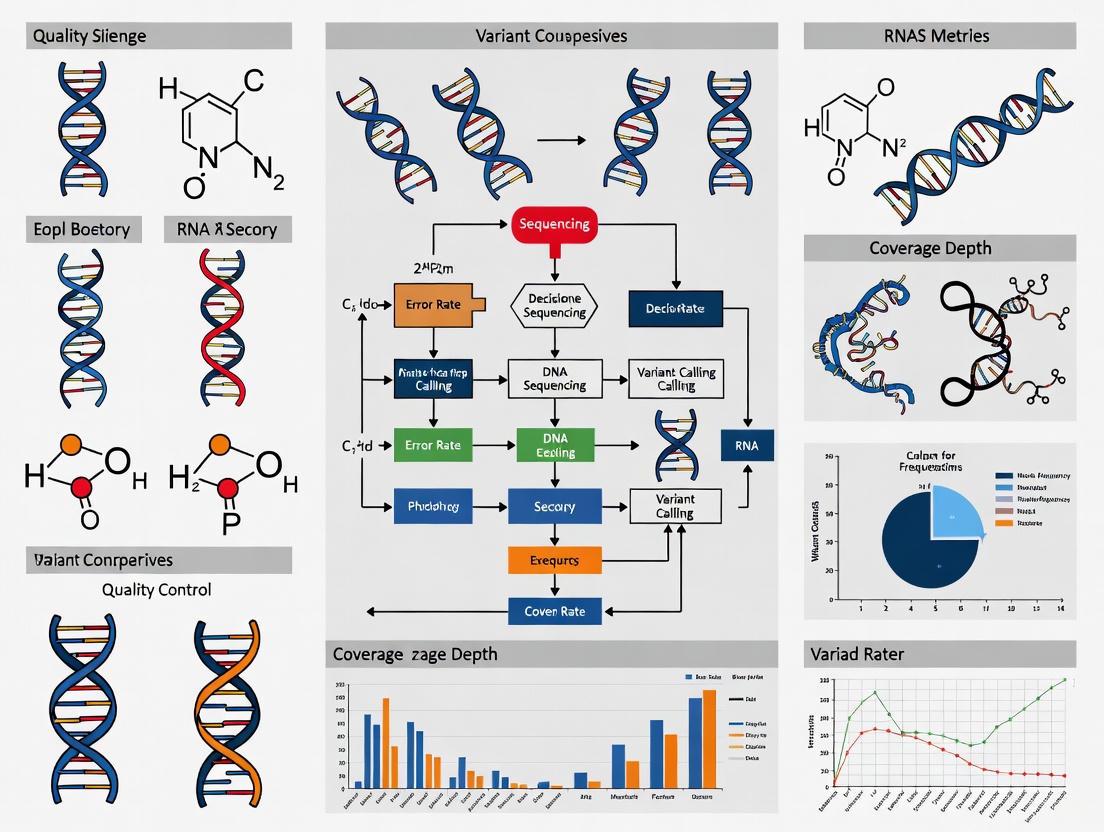

Ensuring Precision in Oncology: A Comprehensive Guide to NGS Quality Control Metrics for Cancer Diagnostics

Next-generation sequencing (NGS) has become a cornerstone of precision oncology, enabling comprehensive genomic profiling that guides diagnosis, prognostication, and therapeutic selection. However, the clinical utility of NGS data is entirely dependent on rigorous quality control (QC) throughout the entire workflow. This article provides researchers, scientists, and drug development professionals with a detailed framework for implementing robust NGS QC metrics. We cover foundational principles, methodological applications for both tissue and liquid biopsy samples, troubleshooting for common pitfalls, and best practices for analytical validation. By synthesizing current standards and emerging practices, this guide aims to support the generation of reliable, clinically actionable genomic data that can safely inform patient care and therapeutic development.

Liquid Biopsy Workflow for Cancer Monitoring: A Comprehensive Guide for Precision Oncology and Drug Development

This article provides a comprehensive overview of the liquid biopsy workflow for cancer monitoring, tailored for researchers and drug development professionals. It explores the foundational principles of circulating biomarkers, including ctDNA, CTCs, and epigenetic markers. The content details advanced methodological approaches from sample collection to data analysis, addresses key challenges in sensitivity and standardization, and presents recent validation studies and comparative performance data. By synthesizing current evidence and future directions, this guide aims to support the integration of liquid biopsy into clinical trial design and precision oncology strategies, enabling non-invasive, real-time tumor dynamics monitoring.

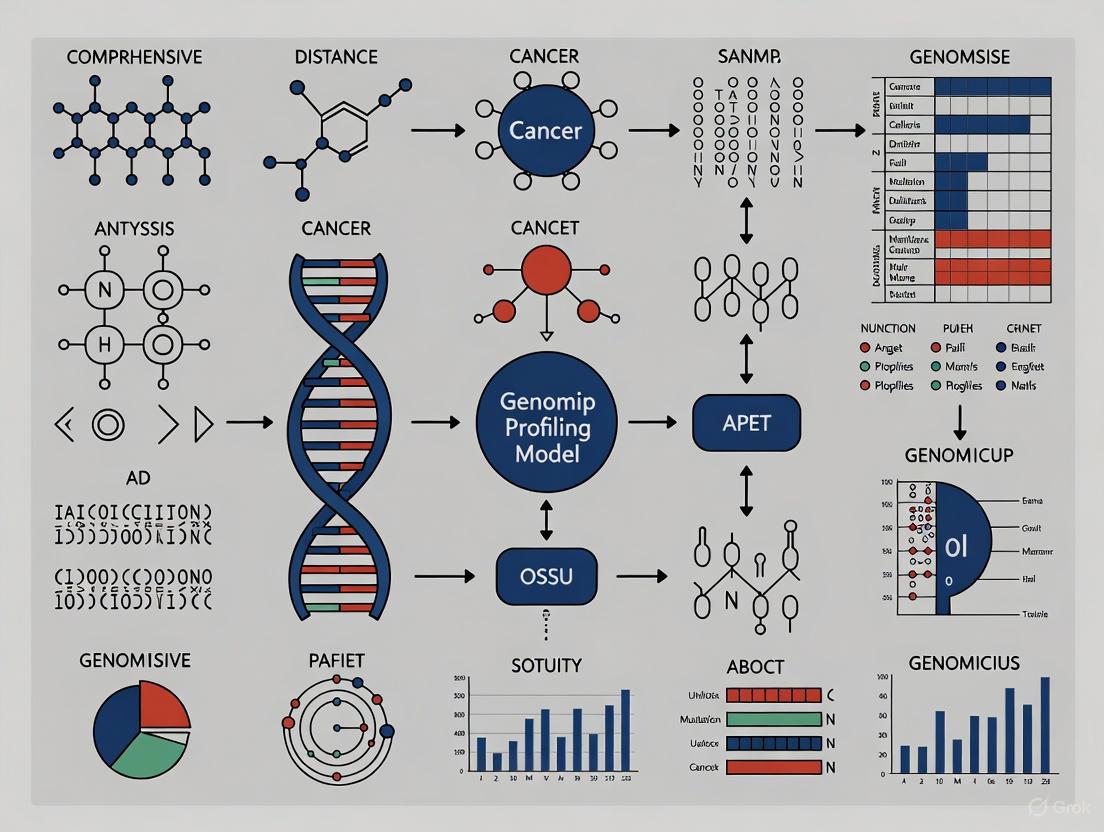

Comprehensive Genomic Profiling in Oncology: From Diagnostic Revolution to Therapeutic Precision

Comprehensive genomic profiling (CGP) represents a paradigm shift in cancer diagnostics, moving beyond single-gene testing to simultaneously analyze hundreds of cancer-related genes and genomic signatures. This article examines the foundational principles, methodological applications, and clinical validation of CGP technologies, highlighting their critical role in identifying targetable alterations, refining tumor classification, and guiding precision therapeutic strategies. Through exploration of emerging evidence and implementation challenges, we provide researchers and drug development professionals with a comprehensive framework for understanding how CGP is transforming oncology research and clinical trial design while addressing current limitations and future directions in the field.

DNA Sequence Representation Methods: A Comparative Analysis for Biomedical Research and Clinical Applications

This article provides a comprehensive comparative analysis of DNA sequence representation methods, tracing their evolution from foundational computational techniques to advanced AI-driven models. Tailored for researchers, scientists, and drug development professionals, it explores core methodologies including k-mer analysis, alignment-free approaches, and large language models (LLMs) like Scorpio and BERTax. The scope covers foundational principles, practical applications in genomics and diagnostics, strategies for troubleshooting and optimization, and rigorous validation techniques. By synthesizing current trends and performance data, this analysis serves as a critical guide for selecting and implementing the most effective sequence representation strategies to drive innovation in biomedical research and clinical practice.

Beyond the Data Desert: Innovative Strategies to Overcome Scarcity in Medical Genomics Research

This article addresses the critical challenge of data scarcity in medical genomics, a major bottleneck hindering drug discovery and precision medicine. It explores the root causes of data scarcity, including lack of diversity in genomic datasets, complex data-sharing regulations, and analytical hurdles. The article provides a comprehensive guide to modern solutions, such as synthetic data generation with Generative AI, federated learning, and the strategic use of multi-omics data. Aimed at researchers, scientists, and drug development professionals, it offers practical methodologies, troubleshooting advice for data integration, and frameworks for validating research findings within data-constrained environments to ensure robust and equitable genomic discoveries.

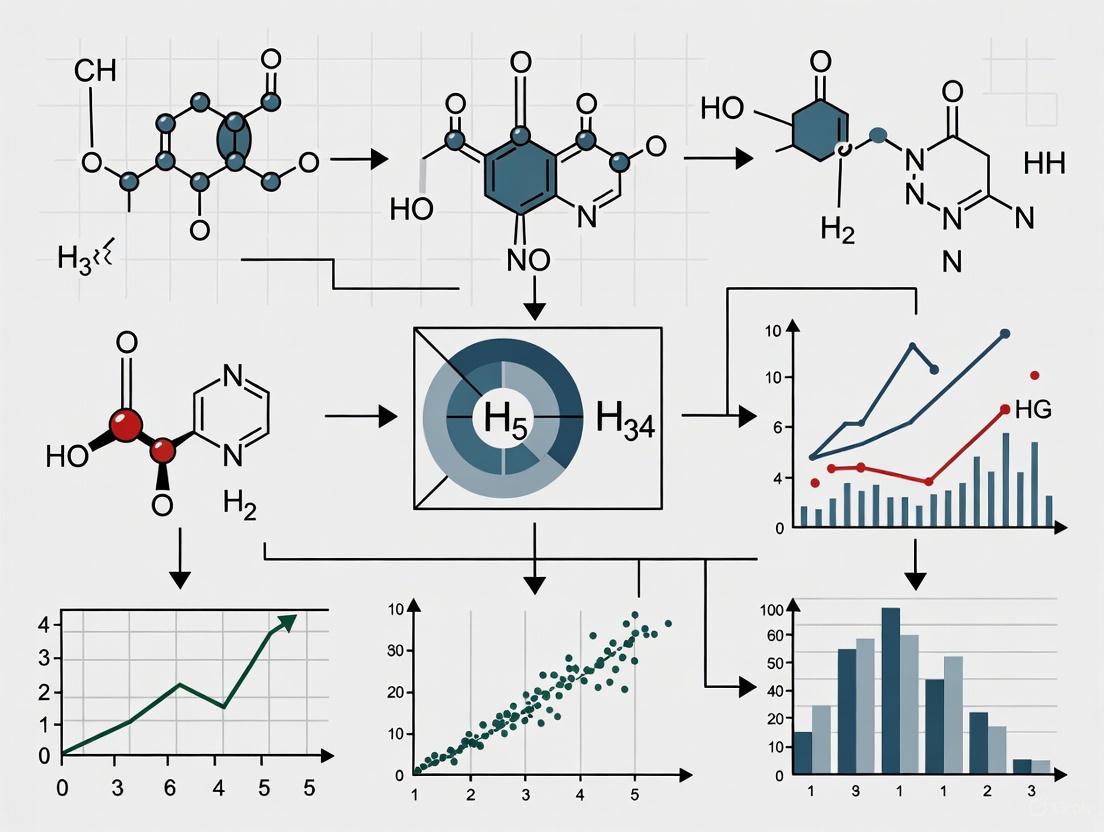

Enhancing Pharmacophore Models: Strategies for Superior Specificity and Selectivity in Drug Discovery

This article provides a comprehensive guide for researchers and drug development professionals on advancing pharmacophore model specificity and selectivity. It covers foundational principles, explores advanced methodological approaches including structure-based and ligand-based modeling, and details optimization techniques such as exclusion volumes and machine-learned informacophores. The content further addresses rigorous validation protocols using statistical metrics like ROC-AUC and EF, alongside comparative analysis of software tools. By synthesizing these strategies, the article serves as a roadmap for creating highly predictive pharmacophore models that improve virtual screening success rates and accelerate the identification of novel therapeutic candidates.