Validating Real-Time Oncology Data from EHRs: A Framework for Reliable Real-World Evidence in Cancer Research and Drug Development

This article provides a comprehensive framework for the validation of real-time oncology data extracted from Electronic Health Records (EHRs), tailored for researchers and drug development professionals.

Validating Real-Time Oncology Data from EHRs: A Framework for Reliable Real-World Evidence in Cancer Research and Drug Development

Abstract

This article provides a comprehensive framework for the validation of real-time oncology data extracted from Electronic Health Records (EHRs), tailored for researchers and drug development professionals. It explores the critical imperative for timely, high-quality data in oncology surveillance and research. The content details advanced methodological approaches for data extraction and harmonization, including the use of common data models and AI-driven curation. It further addresses pervasive challenges such as data fragmentation and interoperability, offering practical optimization strategies. Finally, the article synthesizes validation frameworks and comparative studies, presenting key metrics for assessing data fitness-for-use in generating reliable real-world evidence for regulatory and health technology assessment decisions.

The Imperative for Real-Time Data in Modern Oncology

The Growing Burden of Cancer and the Limitations of Traditional Surveillance

The global burden of cancer continues to rise, necessitating robust surveillance systems to generate accurate, comprehensive data for public health interventions and clinical research. Traditional cancer surveillance methodologies face significant challenges in data standardization, interoperability, and adaptability to diverse healthcare settings. This is particularly evident in the context of utilizing electronic health records (EHRs), which contain valuable real-world data but present substantial extraction and standardization hurdles. The transition from EHRs to reliable real-world evidence requires sophisticated approaches to data validation, especially in precision oncology where data complexity is substantial. This article examines current methodologies for validating oncology data extracted from EHRs, comparing traditional and emerging approaches to address these critical challenges.

Experimental Protocols for EHR Data Validation

Research teams have developed distinct methodological frameworks to ensure the quality and reliability of data extracted from EHRs for oncology applications. These protocols generally fall into three categories: manual abstraction as a gold standard, traditional natural language processing (NLP) pipelines, and emerging large language model (LLM)-based approaches.

1. Manual Abstraction and Gold Standard Validation The most established validation approach uses manual chart abstraction by clinical experts to create a gold standard dataset. In one implementation, researchers pulled 106 lung cancer and 45 sarcoma patient cases from databases complying with the Precision Oncology Core Data Model (Precision-DM). This reference dataset enabled quantitative evaluation of automated extraction tools, though with variable results—descriptive fields were accurately retrieved, but temporal variables like Date of Diagnosis and Treatment Start Date showed accuracy ranging from 50% to 86%, limiting reliable calculation of key oncology endpoints such as Overall Survival and Time to First Treatment [1].

2. Traditional NLP and Data Mining Pipelines Prior to the advent of LLMs, many institutions implemented toolkits incorporating data mining scripts and rule-based NLP to automatically retrieve structured variables from EHRs. These pipelines faced challenges with the predominantly unstructured nature of clinical notes (approximately 80% of EHR data), requiring extensive customization to handle site-specific documentation styles and coding practices [1] [2]. The infrastructure based on Precision-DM standardization demonstrated potential for cross-institutional adoption but required enhancement for improved accuracy on specific variables [1].

3. LLM-Based Extraction Frameworks More recently, research teams have developed structured frameworks specifically for evaluating LLM-based data extraction. Flatiron Health's Validation of Accuracy for LLM/ML-Extracted Information and Data (VALID) Framework implements a GDPR-compliant platform for duplicate abstraction, where two expert reviewers independently extract clinical data from patient records. This enables calculation of performance metrics (recall, precision, F1 score) to benchmark LLMs against human extraction across different healthcare systems [3]. Similarly, researchers at Ontada employed prompt engineering with oncology-specific terminology to guide LLMs in extracting structured data from unstructured clinical documents, validating outputs against a gold standard created by clinical specialists [4].

Comparative Performance Evaluation

The table below summarizes quantitative performance data for different approaches to oncology data extraction from EHRs:

Table 1: Performance Metrics of Oncology Data Extraction Methodologies

| Extraction Methodology | Cancer Types Evaluated | Key Data Elements | Reported Performance | Reference Dataset |

|---|---|---|---|---|

| Traditional NLP Pipeline | Lung cancer, sarcoma | Descriptive fields, Date of Diagnosis, Treatment Start Date | Accuracy: 50%-86% for temporal variables | 151 patient cases from Precision-DM databases [1] |

| LLM with Prompt Engineering | 26 solid tumors, 14 hematologic malignancies | Cancer diagnosis, histology, grade, TNM staging | F1 scores ≥0.85 for all key clinical elements | Validation against manual extraction by clinical specialists [4] |

| AI-Enhanced Cancer Surveillance (Meta-analysis) | Cervical, oral, urological, gastrointestinal, thoracic | Diagnostic accuracy across imaging and screening | Pooled sensitivity: 88.5% (95% CI 83.2–92.6), specificity: 84.3% (95% CI 78.9–88.7) | 5 studies across 1,234,093 patients or imaging cases [5] |

| Smartphone-Based AI Screening | Oral cancer | Visual detection of suspicious lesions | Sensitivity: 96.7%, Specificity: 96.7% | 108,948 images [5] |

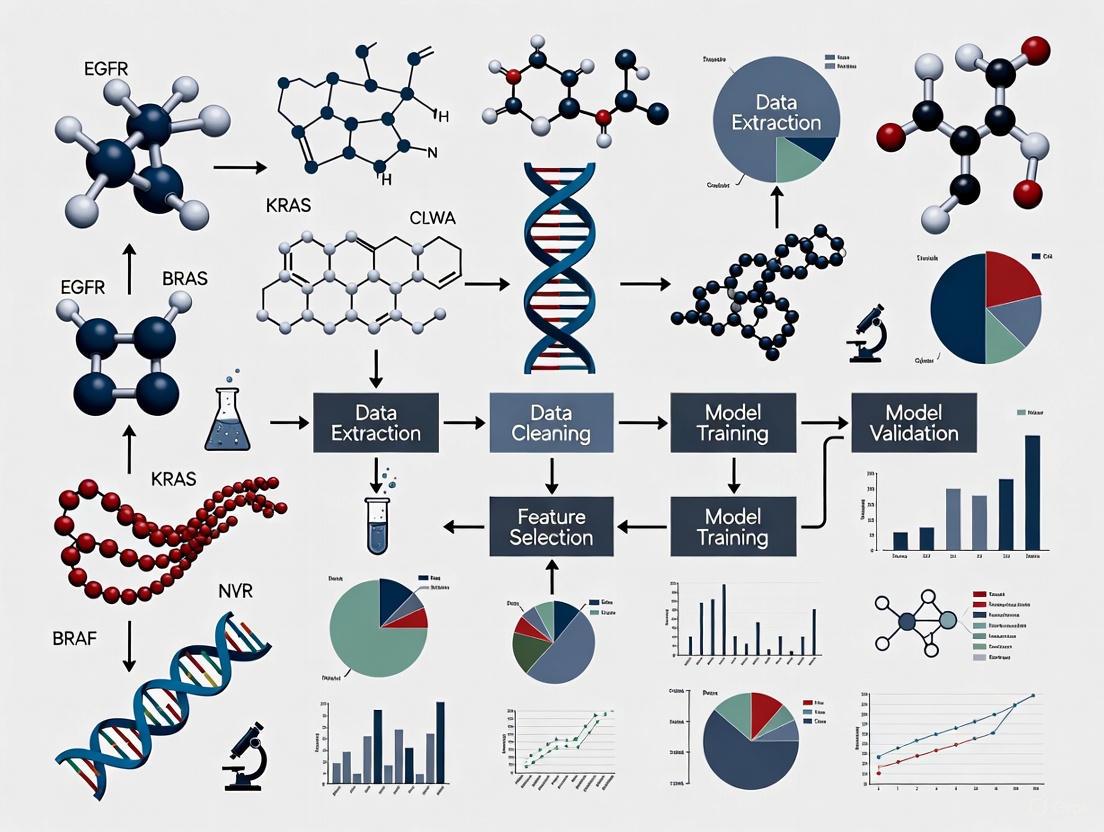

Visualization of EHR Data Extraction Workflows

The following diagram illustrates the core workflow for validating oncology data extracted from electronic health records:

EHR Data Validation Workflow

Research Reagent Solutions for Oncology Data Extraction

The table below details key technologies and platforms used in advanced oncology data extraction and validation:

Table 2: Essential Research Tools for Oncology EHR Data Extraction

| Tool/Platform | Type | Primary Function | Key Features |

|---|---|---|---|

| Flatiron Health VALID Framework | Validation Framework | Evaluating LLM-extracted information | GDPR-compliant platform, duplicate abstraction, recall/precision/F1 metrics [3] |

| Precision-DM (Precision Oncology Core Data Model) | Data Standardization | Standardizing EHR data for precision oncology | Common data model for molecular tumor boards, structured data elements [1] |

| Ontada LLM Platform | Large Language Model | Extracting structured oncology data from clinical notes | Prompt engineering with oncology terminology, validation against manual abstraction [4] |

| IQVIA RWE Platform | Real-World Evidence Platform | Clinical trial data management and analysis | Centralized data management, advanced analytics, regulatory compliance [6] |

| TriNetX | Real-World Evidence Platform | Clinical research and trial optimization | Data encryption, access controls, audit trails, advanced analytics [6] |

Discussion

The growing burden of cancer demands more sophisticated approaches to surveillance that can overcome the limitations of traditional systems. Current research demonstrates that while traditional NLP pipelines for EHR data extraction have provided a foundation for automation, they face significant challenges with variable accuracy, particularly for temporal oncology endpoints [1]. The emergence of LLM-based approaches represents a substantial advancement, achieving high F1 scores (≥0.85) across diverse cancer types by leveraging prompt engineering with oncology-specific terminology [4].

Critical to the adoption of these technologies are robust validation frameworks like Flatiron's VALID, which implements systematic approaches to benchmark automated extraction against human experts [3]. The field is also addressing infrastructure challenges through standardized data models like Precision-DM, which enables cross-institutional collaboration while maintaining data quality [1].

For researchers and drug development professionals, these advancements enable more reliable generation of real-world evidence from routine clinical practice. This has profound implications for understanding treatment patterns, supporting regulatory decisions, and accelerating the development of novel therapies, particularly in precision oncology where molecular data complexity compounds traditional surveillance challenges [2].

As the field evolves, future directions will likely focus on expanding extraction capabilities to include biomarkers, medication history, and treatment outcomes, further enriching the real-world data available for cancer research and care optimization [4].

Cancer registries have traditionally served as static repositories of historical data, compiled through labor-intensive manual processes with significant time lags. However, a paradigm shift is underway toward dynamic systems capable of real-time data reporting. This transformation, powered by automated extraction technologies and standardized data models, is creating unprecedented opportunities for epidemiological research, drug development, and clinical decision-making. This guide compares the performance of emerging real-time reporting methodologies against traditional registry approaches, examining their validation through recent experimental implementations. We provide comprehensive experimental data and technical specifications to inform researchers, scientists, and drug development professionals navigating this rapidly evolving landscape.

The Evolution from Traditional to Real-Time Cancer Registries

Traditional population-based cancer registries have been indispensable for understanding cancer epidemiology, tracking incidence trends, and informing public health policy. These systems typically rely on manual data extraction from electronic health records (EHRs), a process that is both time-consuming and labor-intensive [7]. The Netherlands Cancer Registry (NCR), for instance, exemplifies this conventional approach where all Dutch cancer patients are manually recorded, creating significant delays between patient encounters and data availability for research and surveillance [7].

The limitations of this static model have become increasingly apparent amid rapid advances in cancer treatment. The growing demand for real-world evidence to evaluate diagnostic and therapeutic strategies used in daily practice has exposed the inadequacies of manual registration systems [7]. Furthermore, the rise of precision oncology, with its transition from hundreds of diagnoses to thousands of distinct cancer subtypes driven by molecular testing, places unique burdens on traditional registry structures that were not designed for such complexity [2].

In response to these challenges, a new model of dynamic, real-time reporting has emerged. These systems leverage automated data extraction technologies that harmonize structured EHR data across multiple healthcare institutions into common data models, supporting near real-time enrichment of cancer registries [7] [8]. This transition represents a fundamental shift from cancer registries as historical archives to their new role as living resources that can support contemporary clinical decision-making and accelerate oncology research.

Performance Comparison: Real-Time vs. Traditional Registry Systems

Direct comparisons between emerging real-time reporting systems and traditional registry approaches reveal significant differences in data accuracy, timeliness, and operational efficiency. The following analysis is based on experimental implementations across multiple research initiatives.

Table 1: Comparative Performance of Real-Time vs. Traditional Registry Systems

| Performance Metric | Traditional Registry Systems | Real-Time Reporting Systems | Validation Study |

|---|---|---|---|

| Diagnosis Accuracy | Not directly reported | 100% concordance with registered NCR diagnoses | Datagateway System [7] [8] |

| New Case Identification | Not directly reported | 95% accuracy against inclusion criteria | Datagateway System [7] [8] |

| Treatment Regimen Accuracy | Not directly reported | 97-100% across cancer types | Datagateway System [7] |

| Combination Therapy Classification | Not directly reported | 97% accuracy (3% misclassification) | Datagateway System [7] |

| Laboratory Data Accuracy | Not directly reported | ~100% match | Datagateway System [7] |

| Toxicity Indicators Accuracy | Not directly reported | 72%-100% accuracy | Datagateway System [7] |

| Data Currency | Months to years | Near real-time | Multiple Studies [7] [9] |

| EHR-EDC Concordance (CDS) | Not applicable | 34% (increasing to 87% when disease evaluation captured in both systems) | ICAREdata Project [9] |

| EHR-EDC Concordance (TPC) | Not applicable | 79% | ICAREdata Project [9] |

Table 2: Specialized Performance Metrics by Cancer Type

| Cancer Type | Validation Focus | Accuracy Rate | Sample Size | System |

|---|---|---|---|---|

| Acute Myeloid Leukemia (AML) | Treatment regimens | 100% | 254 patients | Datagateway [7] |

| Multiple Myeloma (MM) | Treatment regimens | 97% | 117 patients, 198 regimens | Datagateway [7] |

| Lung Cancer | New diagnosis extraction | 95% | 938 patients | Datagateway [7] |

| Breast Cancer | Overall system performance | Included in multi-cancer validation | Not specified | Datagateway [7] |

| Sarcoma | Descriptive EHR fields | Variable (50-86%) | 45 cases | Precision-DM Toolset [10] |

| Solid Tumors | Cancer Disease Status (CDS) | 87% (when disease evaluation captured in both systems) | 15 trials | ICAREdata [9] |

Experimental Protocols and Methodologies

The Datagateway Validation Study

The Netherlands Cancer Registry implemented and validated an automated real-time data extraction system called "Datagateway" that harmonizes structured EHR data across multiple hospitals into a common model [7] [8].

Experimental Protocol:

- Data Sources: EHR data from patients with acute myeloid leukemia, multiple myeloma, lung cancer, and breast cancer were extracted via the Datagateway system [7]

- Validation Method: Extracted data were compared against manually registered NCR data and original EHR source data [7]

- Patient Cohorts:

- Accuracy Assessment: Multiple data elements were validated including diagnoses, treatment regimens, laboratory values, and toxicity indicators [7]

Key Findings:

- The system achieved 100% accuracy for identifying existing diagnoses compared to manually registered NCR data [7]

- For new diagnoses, the system demonstrated 95% accuracy against NCR inclusion criteria [7]

- Treatment identification showed high accuracy (100% for AML, 97% for multiple myeloma) with only 3% of combination therapies misclassified [7]

- Laboratory values matched "virtually completely" between systems [7]

The following workflow diagram illustrates the Datagateway validation process:

The ICAREdata Project Implementation

The Integrating Clinical Trials and Real-World Endpoints (ICAREdata) project demonstrated an alternative approach to real-world data capture using standardized oncology data elements [9].

Experimental Protocol:

- Data Standards: Implemented minimal Common Oncology Data Elements (mCODE) within HL7 FHIR standard [9]

- Study Scope: 10 clinical sites (academic and community centers) across 15 trials [9]

- Data Elements: Focused on Cancer Disease Status (CDS) and Treatment Plan Change (TPC) [9]

- Implementation Tools:

- Extraction Method: mCODE Extraction Framework with FHIR-based transmission [9]

Key Findings:

- Overall concordance rate of 79% for Treatment Plan Change between EHR and electronic data capture systems [9]

- Concordance of 34% for Cancer Disease Status, increasing to 87% when disease evaluation was captured in both systems [9]

- Demonstrated feasibility of standards-based structured data capture and transmission for clinical trials [9]

Technological Infrastructure for Real-Time Reporting

Common Data Models and Standards

Successful real-time reporting systems rely on standardized data models that enable interoperability across different healthcare systems:

mCODE (Minimal Common Oncology Data Elements): An open-source set of structured oncology data elements part of the HL7 FHIR standard, designed to facilitate electronic exchange of cancer-specific data between systems [9].

Precision-DM (Precision Oncology Core Data Model): A comprehensive model developed to support clinical-genomic data standardization, containing 22 profiles and 494 data elements with mappings to standardized terminologies [10].

FHIR (Fast Healthcare Interoperability Resources): A standard for electronic healthcare data exchange that supports API-based data access, increasingly adopted by EHR vendors for research purposes [9].

Architecture of Real-Time Reporting Systems

The technological infrastructure supporting real-time cancer registry reporting typically follows a layered architecture:

Research Reagent Solutions: Essential Tools and Technologies

Table 3: Key Research Reagents and Technologies for Real-Time Cancer Registry Implementation

| Tool/Technology | Function | Implementation Example |

|---|---|---|

| HL7 FHIR Standard | Enables electronic exchange of health data between systems | ICAREdata project used FHIR for data transmission [9] |

| mCODE (Minimal Common Oncology Data Elements) | Standardized structured data elements for oncology | Used for cancer disease status and treatment representation [9] |

| Epic SmartForms | Structured data capture within EHR problem lists | Implemented for Cancer Disease Status questions [9] |

| Epic SmartPhrases | Structured documentation in clinical notes | Used for Treatment Plan Change documentation [9] |

| mCODE Extraction Framework | Open-source tool for data formatting and transmission | Interim solution for FHIR-based transmission [9] |

| Natural Language Processing (NLP) | Extracts information from unstructured clinical text | Used for retrieving performance status with 93% accuracy [10] |

| Precision-DM Model | Comprehensive clinical-genomic data standardization | Supports molecular data integration with clinical phenotypes [10] |

| Common Data Model Harmonization | Transforms heterogeneous EHR data into standardized format | Datagateway system harmonized data across multiple hospitals [7] [8] |

Implications for Research and Drug Development

The transition to real-time reporting in cancer registries presents significant opportunities for the research community and pharmaceutical industry:

Accelerated Clinical Research: Real-world data from automated systems can supplement or serve as external control cohorts in clinical trials, potentially reducing recruitment timelines and costs [7]. The ability to identify patient populations meeting specific criteria in near real-time enhances clinical trial feasibility and efficiency.

Enhanced Safety Monitoring: Automated systems can provide more timely insights into treatment toxicities and adverse events, with studies demonstrating 72-100% accuracy for toxicity indicators [7]. This enables more responsive safety monitoring and pharmacovigilance.

Precision Medicine Applications: Standardized data models that incorporate molecular testing results support the development of targeted therapies for specific cancer subtypes [10]. The integration of genomic and clinical data is essential for advancing personalized treatment approaches.

Health Economics and Outcomes Research: More current and comprehensive data on treatment patterns and outcomes facilitates robust cost-effectiveness analyses and population health management, supporting value-based care initiatives in oncology.

The evolution from static to dynamic cancer registries represents a transformative advancement in oncology data infrastructure. Validation studies demonstrate that automated real-time reporting systems can achieve high accuracy rates—95-100% for key data elements—while dramatically improving data currency compared to traditional manual approaches [7] [8]. The successful implementation of standards-based approaches like mCODE and FHIR further supports the scalability and interoperability of these systems [9].

For researchers, scientists, and drug development professionals, these technological advances create unprecedented opportunities to leverage real-world evidence throughout the therapeutic development lifecycle. As these systems continue to mature, incorporating artificial intelligence and enhanced natural language processing capabilities, the potential for innovation in cancer research and care delivery will continue to expand, ultimately accelerating progress against cancer.

The validation of real-time oncology data from electronic health records (EHRs) is transforming oncology research and drug development. By converting unstructured clinical narratives into structured, research-ready data, advanced computational methods are enabling more efficient evidence generation and supporting the advancement of precision medicine. This guide objectively compares the key technologies and methodologies driving this transformation.

Table 1: Performance Comparison of Data Processing Models in Oncology

| Model Name | Primary Function | Test Data | Key Performance Metrics | Reported Limitations / Challenges |

|---|---|---|---|---|

| GPT-4o (OpenAI) [11] | Classify cancer diagnoses from ICD/free-text | 762 unique diagnoses (326 ICD, 436 free-text) [11] | ICD Code Accuracy: 90.8%; Free-text Accuracy: 81.9%; Weighted Macro F1-score (Free-text): 71.8 [11] | Confusion between metastasis and CNS tumors; errors with ambiguous terminology [11] |

| BioBERT (dmis-lab) [11] | Biomedical-specific classification | 762 unique diagnoses [11] | ICD Code Accuracy: 90.8%; Free-text Accuracy: 81.6%; Weighted Macro F1-score (Free-text): 61.5 [11] | Lower performance on unstructured free-text compared to structured ICD codes [11] |

| LLM for Clinical Data Extraction (Ontada) [4] | Extract cancer diagnosis, histology, grade, stage | 26 solid tumors, 14 hematologic malignancies [4] | F1 scores > 0.85 for key data elements (TNM stage, grade, histology) [4] | Requires testing for bias across all cancer populations to ensure fairness [4] |

| Precision-DM Data Pipeline [1] | Standardize EHR data for precision oncology | 106 lung cancer & 45 sarcoma cases [1] | Accuracy for Age at Diagnosis, Overall Survival: 50% - 86% [1] | Lower accuracy in extracting dates (e.g., Date of Diagnosis, Treatment Start) [1] |

Detailed Experimental Protocols

Protocol for Validating LLMs in Cancer Diagnosis Categorization

This protocol is based on a benchmark study evaluating large language models (LLMs) and a specialized model on their ability to classify cancer diagnoses from EHRs [11].

- Objective: To evaluate the performance of LLMs (GPT-3.5, GPT-4o, Llama 3.2, Gemini 1.5) and BioBERT in classifying cancer diagnoses from both structured and unstructured EHR data into clinically relevant categories [11].

- Dataset Curation:

- Source data was obtained from 3,456 patient records in the Research Enterprise Data Warehouse [11].

- The test set consisted of 762 unique diagnoses: 326 structured International Classification of Diseases (ICD) code descriptions and 436 unstructured free-text entries from clinical notes [11].

- Two oncology experts defined and validated 14 cancer categories (e.g., Breast, Lung, Gastrointestinal, Central Nervous System) [11].

- Model Implementation & Prompting:

- General-purpose LLMs were accessed via their respective cloud APIs, while BioBERT was deployed via the Hugging Face Transformers library. Llama 3.2 was run locally using Ollama [11].

- A standardized prompt was used for the LLMs: "Given the following ICD-10 description or treatment note for a radiation therapy patient: {input}, select the most appropriate category from the predefined list: {Category list}. Respond only with the exact category name from the list..." [11].

- Validation and Metrics:

Protocol for Building a Standardized Real-World Data Pipeline

This methodology focuses on creating a scalable infrastructure to extract and standardize EHR data for precision oncology use cases [1].

- Objective: To develop and evaluate a toolset that automatically retrieves and standardizes descriptive variables and common endpoints from EHRs according to the Precision Oncology Core Data Model (Precision-DM) [1].

- Data Processing & Toolset Development:

- Validation Approach:

- The toolset's performance was validated against a reference dataset of 106 lung cancer and 45 sarcoma patient cases from the Johns Hopkins Molecular Tumor Board [1].

- Accuracy was assessed for key clinical variables, including Age at Diagnosis, Overall Survival, and Time to First Treatment, which were calculated from extracted dates [1].

The Scientist's Toolkit: Research Reagent Solutions

The following tools and data standards are essential for conducting real-world evidence research in oncology.

| Tool / Solution | Type | Primary Function in Research |

|---|---|---|

| Large Language Models (LLMs) [11] [4] | Software Model | Automate the extraction and structuring of complex clinical information (e.g., diagnosis, stage) from unstructured EHR text, enabling high-throughput data curation. |

| BioBERT [11] | Software Model | Provide a domain-specific language model pre-trained on biomedical literature, enhancing performance on tasks involving specialized medical terminology. |

| Precision-DM (Precision Oncology Core Data Model) [1] | Data Standard | Offer a standardized data model to harmonize EHR-derived real-world data, ensuring consistency and facilitating data sharing across different cancer centers and studies. |

| AACR Project GENIE [12] | Data Registry | Serve as a large, publicly available, clinically annotated genomic registry used to accelerate precision oncology discovery and validate findings across diverse patient populations. |

| Flatiron Health EHR-Derived Databases [13] | Data Resource | Provide de-identified, structured, and unstructured data derived from routine oncology care across a nationwide network of providers, supporting outcomes research and regulatory-grade evidence generation. |

Experimental Workflow and Data Validation Pathways

The following diagram illustrates the standard workflow for processing and validating real-world data from EHRs for oncology research.

Real-World Data Processing Workflow

Framework for Regulatory-Grade Real-World Evidence

Generating evidence fit for regulatory decisions requires a robust methodological framework to address biases inherent in observational data [14].

RWE Validation Framework

This framework emphasizes the importance of pre-specifying a causal question and using tools like Directed Acyclic Graphs (DAGs) to map relationships between variables [14]. Target trial emulation involves designing an observational study to mimic a hypothetical randomized controlled trial as closely as possible, which includes precisely defining eligibility criteria, treatment strategies, and outcomes [14]. Analytic methods like Inverse Probability of Treatment Weighting (IPTW) are then used to control for confounding and generate reliable evidence for regulatory and reimbursement decisions [14].

In the field of oncology research, the validation of real-time data from electronic health records (EHRs) represents a critical frontier for advancing evidence-based medicine. Real-world data (RWD) offers the potential to capture diverse patient experiences often missed by traditional randomized controlled trials (RCTs), particularly for older adults, those with comorbidities, and individuals with rare cancers [15] [16]. However, the journey from raw EHR data to trustworthy evidence is fraught with challenges related to data completeness, accuracy, and timeliness. These data gaps directly impact the reliability of insights drawn from RWD and can consequently affect drug development timelines, clinical decision-making, and ultimately, patient outcomes. This guide objectively compares the performance of different data collection and validation methodologies, providing researchers with a framework for navigating the complex landscape of oncology RWD.

The following tables summarize the performance characteristics of various oncology data sources and validation systems based on recent research findings.

Table 1: Performance Metrics of Automated Oncology Data Extraction Systems

| System / Study | Data Source | Key Performance Metrics | Primary Limitations |

|---|---|---|---|

| Datagateway System [7] | EHR data from multiple hospitals (Netherlands Cancer Registry) | • 100% concordance with registered NCR diagnoses• 95% accuracy in new diagnosis extraction• 97% accuracy in treatment regimen identification (MM)• 100% accuracy in AML treatment identification | • 3% of combination therapies misclassified• Toxicity indicators showed variable accuracy (72%-100%) |

| Privacy-Preserving ML Tool [17] | Oncology EHR data across multiple institutions | • Improved ML model performance by 10-15%• Accelerated feedback cycles from weeks to days | • Requires human expert-curated gold standard for validation• Must comply with European data protection standards |

| Oncology Data Network (ODN) [18] | 124 cancer centers across 7 European countries | • Near real-time analytics within 24 hours of data entry• Captures treatment duration, intervals, and discontinuation | • Concise initial dataset focused primarily on cancer medicine use• Achieving critical mass of contributors proved challenging |

Table 2: Data Completeness Across Different Oncology Registry Types

| Registry Type | Strengths | Data Gaps & Limitations | Example Research Applications |

|---|---|---|---|

| Population-Based Registries (SEER, NPCR) [16] | • Large, diverse samples representative of populations• Common coding schema• Details on tumor characteristics | • Incomplete treatment information• Lack of detailed data on health behaviors and age-related conditions (frailty, cognition) | • Trends in cancer incidence and mortality• Health disparities research across age, race, and geography |

| Hospital-Based Registries (National Cancer Database) [16] | • Captures ~70% of incident US cancers• Detailed clinical information from accredited hospitals | • Findings may not be generalizable to full US population• Limited information on geriatric impairments | • Quality of care comparisons across institutions• Treatment pattern analysis |

| Specialized Geriatric Registries (Carolina Seniors Registry) [16] | • Captures geriatric assessment data for all participants• Focuses on older adults in academic and community settings | • Limited geographic coverage• May not represent all care settings | • Understanding geriatric impairments in older cancer patients• Linking functional status to treatment outcomes |

Experimental Protocols for Data Validation

Protocol 1: Validation of Automated EHR Data Extraction Systems

Objective: To validate the accuracy of an automated system (Datagateway) for extracting and harmonizing structured EHR data into a common model to support near real-time enrichment of cancer registries [7].

Methodology:

- Patient Cohort Selection: Data from patients with acute myeloid leukemia (AML), multiple myeloma, lung cancer, and breast cancer were extracted via the Datagateway system.

- Comparison Framework: Extracted data was compared against two standards: the manually curated Netherlands Cancer Registry (NCR) and original EHR source data.

- Validation Metrics:

- Diagnostic Accuracy: Compared automatically extracted diagnoses with NCR-registered diagnoses.

- Treatment Identification: Validated treatment regimens against manually curated records.

- Data Element Accuracy: Assessed concordance for laboratory values and toxicity indicators.

Key Findings: The system demonstrated 100% accuracy for retrieving patients recorded in the NCR and 95% accuracy in identifying new diagnoses meeting NCR inclusion criteria. Treatment identification showed high accuracy (97-100%) across cancer types, with only 3% of complex combination therapies misclassified [7].

Protocol 2: Privacy-Preserving Machine Learning Error Analysis

Objective: To develop a workflow that allows clinical experts and data scientists to collaboratively identify machine learning (ML) extraction errors while maintaining privacy compliance [17].

Methodology:

- Gold Standard Establishment: Human expert-curated datasets served as the validation benchmark.

- Interactive Dashboard: Implemented a Snowflake-based interactive dashboard for reviewing model outputs against human benchmarks.

- Error Categorization: Team reviewed discrepancies to categorize errors and inform model improvements.

- Iterative Refinement: Established feedback loops to continuously improve ML model performance.

Key Findings: This approach improved ML model performance by 10-15% and accelerated feedback cycles from weeks to days, ensuring that data extraction remains both precise and compliant with European data protection standards [17].

Visualization of Data Validation Workflows

Oncology RWD Validation Workflow: This diagram illustrates the sequential process of transforming raw EHR data into validated real-world data through common data models, validation against gold standards, and iterative error analysis loops.

Multinational RWD Harmonization: This visualization shows the workflow for creating globally applicable oncology datasets through disease-specific common data models, robust curation processes, and secure trusted research environments.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for Oncology RWD Research

| Resource / Tool | Type | Primary Function | Access Considerations |

|---|---|---|---|

| Common Data Models [7] [18] | Data Infrastructure | Harmonizes data from diverse EHR systems into standardized formats for aggregation and comparison | Requires mapping local data elements to common standards; must be maintained as clinical practices evolve |

| Core Regimen Reference Library (CRRL) [18] | Reference Database | Codifies treatment regimens used in clinical practice and maps them against established guidelines | Essential for comparing treatment patterns across institutions and countries; requires clinical expertise to maintain |

| Privacy-Preserving Error Analysis Dashboard [17] | Analytical Tool | Enables collaborative identification of ML extraction errors against human expert-curated gold standards | Must comply with regional data protection regulations (e.g., GDPR); requires specialized technical implementation |

| USP Medicine Supply Map [19] | Supply Chain Analytics | Uses predictive analytics to identify vulnerability factors in drug supply chains and calculate shortage risk scores | Commercial tool requiring subscription; provides crucial data for understanding drug availability impacts on treatment |

| Flatiron Health Multinational Datasets [20] | Curated RWD Source | Provides structured, curated oncology EHR-derived data across multiple countries using disease-specific common data models | Access via trusted research environment; enables global comparative studies while maintaining data privacy |

The validation of real-time oncology data from EHRs remains a complex but essential endeavor for advancing cancer research and treatment. Automated data extraction systems show promising accuracy, particularly for diagnosis identification and monotherapy regimens, but challenges persist with complex combination therapies and toxicity documentation. The consequences of incomplete and delayed information are significant, potentially leading to suboptimal treatment decisions, inefficient drug development, and inadequate understanding of real-world therapeutic effectiveness.

Researchers must carefully select data sources and validation methodologies based on their specific use cases, recognizing that different registry types and data systems exhibit distinct strength and limitation profiles. The emerging toolkit of common data models, privacy-preserving error analysis frameworks, and multinational data harmonization approaches offers promising pathways for addressing critical data gaps. As the field evolves, continued refinement of these methodologies and technologies will be essential for generating reliable real-world evidence that can truly inform clinical practice and improve outcomes for cancer patients.

Building the Pipeline: Methodologies for Real-Time Data Extraction and Harmonization

The modern landscape of oncology research is increasingly dependent on the rapid and reliable use of real-world data from Electronic Health Records (EHRs). However, the clinical utility of this data is often hampered by significant challenges, including fragmentation across proprietary systems, inconsistent data structures, and burdensome manual extraction processes that are both time-consuming and labor-intensive [7] [2]. These limitations create critical bottlenecks for research and delay insights into cancer treatment efficacy and safety. Common Data Models (CDMs) have emerged as a foundational architectural solution to these problems, providing a standardized framework that harmonizes disparate data sources into a consistent, analyzable format. By transforming heterogeneous EHR data into a unified structure, CDMs enable the automated, real-time data pipelines essential for a responsive Learning Health System in oncology [7] [21]. This guide objectively evaluates the role of CDMs, with a specific focus on validating their performance in automating the extraction of real-time oncology data for research and drug development.

CDM Performance Comparison: Validating Automated Oncology Data Extraction

To assess the practical value of Common Data Models in a real-world oncology context, we examine performance data from a validation study of an automated data extraction system that leveraged a CDM to harmonize EHR data across multiple hospitals for the Netherlands Cancer Registry (NCRCITATION). The study provides critical quantitative metrics on the accuracy and feasibility of using a CDM for real-time data enrichment in a population-based registry.

Diagnostic and Treatment Data Accuracy

The validation demonstrated a high level of accuracy across key oncology data domains, confirming the reliability of the CDM-based automated system.

Table 1: Accuracy of CDM-Based Data Extraction for Oncology Diagnoses and Treatment

| Data Category | Specific Metric | Performance | Context / Sample Size |

|---|---|---|---|

| Diagnosis Validation | Concordance with registered NCR diagnoses | 100% | Compared to NCR gold standard [7] |

| Accuracy in identifying new diagnoses per NCR criteria | 95% | 1,219 of 1,287 patient records [7] | |

| Treatment Validation | Acute Myeloid Leukemia (AML) treatment regimens | 100% | 254 patients [7] |

| Multiple Myeloma (MM) treatment regimens | 97% | 198 regimens from 117 patients [7] | |

| Combination therapy misclassification | 3% | Small subset of MM regimens [7] |

Clinical and Laboratory Data Accuracy

The system also excelled in capturing detailed clinical data, which is crucial for comprehensive research and safety monitoring.

Table 2: Accuracy of CDM-Based Clinical and Laboratory Data Extraction

| Data Type | Performance | Notes |

|---|---|---|

| Laboratory Values | Virtually complete match [7] | High fidelity in transferring structured numeric data. |

| Toxicity Indicators | 72% - 100% accuracy [7] | Range indicates variation in capture accuracy for different types of toxicities. |

Experimental Protocols: Methodologies for CDM Validation

The performance data cited in the previous section were derived from rigorous validation studies. The following protocols detail the methodologies used to generate that evidence, providing a blueprint for researchers seeking to validate similar CDM-based systems.

Protocol 1: Prospective Validation of New Cancer Diagnoses

This protocol was designed to test the system's ability to accurately identify and include new cancer cases in real-time.

- Objective: To determine the accuracy of the CDM-based automated system in identifying new patient diagnoses that meet the registry's inclusion criteria, compared to manual registration processes [7].

- Data Source: Structured data extracted directly from hospital EHRs and harmonized via the CDM [7].

- Patient Cohort: 1,287 patient records from three hospitals, encompassing patients with Acute Myeloid Leukemia (AML) and lung cancer [7].

- Validation Method: Each patient record identified by the automated system was checked against the NCR inclusion criteria to confirm they represented a valid, new cancer diagnosis [7].

- Output Metric: The percentage of automatically identified patients who correctly met all inclusion criteria (95%) [7].

Protocol 2: Retrospective Validation of Treatment Regimens

This protocol assessed the system's precision in capturing complex cancer treatment information.

- Objective: To validate the correctness of treatment regimen identification by the CDM system against previously recorded NCR data and the original EHR source data [7].

- Data Source: Harmonized treatment data from the CDM, compared to the gold-standard NCR records and source EHRs [7].

- Patient Cohort: 254 AML patients and 117 Multiple Myeloma (MM) patients, encompassing a total of 198 distinct treatment regimens for MM [7].

- Validation Method: A detailed, record-by-record comparison was conducted. For example, each specific drug combination (e.g., D-VRd, D-VTd) identified for an MM patient was verified against the actual prescribed therapy in the medical record [7].

- Output Metric: The percentage of treatment regimens that were correctly classified (100% for AML, 97% for MM) [7].

System Architecture: Workflow of a CDM for Oncology Data

The following diagram illustrates the logical flow and key components of an automated system that uses a Common Data Model to process oncology data from source EHRs to research-ready outputs.

Diagram 1: Logical workflow of a CDM-based automated data pipeline for oncology research, from heterogeneous EHR sources to research consumption. Based on a scalable data architecture framework [22].

Successful implementation of a CDM for oncology research relies on a combination of specific data standards, technical tools, and governance frameworks.

Table 3: Key Resources for Implementing a CDM in Oncology Research

| Tool / Standard | Category | Primary Function in CDM Implementation |

|---|---|---|

| OMOP Common Data Model [21] | Data Standard | Provides an open-source, standardized data model and structure for observational health data, enabling systematic analytics. |

| OHDSI Standardized Vocabularies [21] | Terminology | Allows organization and standardization of medical terms (e.g., medications, conditions) across clinical domains for consistent phenotype definition. |

| Dataverse / Microsoft CDM [23] | Platform & Standard | Offers a standardized, cloud-based schema and storage for business data, promoting interoperability between applications like Dynamics 365 and Power BI. |

| Data Catalogs (e.g., Alation) [24] | Governance Tool | Centralizes documentation of the CDM, tracks data lineage, manages metadata, and ensures governance and discoverability of standardized entities. |

| ETL/ELT Tools (e.g., dbt, Talend) [22] [24] | Technical Tool | Executes the transformation and loading of source data into the CDM structure, often within modular, version-controlled pipelines. |

| Unity Catalog (on Databricks) [22] | Governance Tool | Provides centralized governance, lineage tracking, and access control for data within a lakehouse architecture, securing the CDM. |

The empirical validation of a Common Data Model for automating oncology data extraction demonstrates that this architectural approach is not only feasible but also highly reliable. With performance benchmarks showing 95% to 100% accuracy in identifying diagnoses and treatment regimens, CDMs provide a robust foundation for real-time data integration in cancer research [7]. By overcoming the inherent fragmentation of EHR systems, CDMs enable scalable, high-quality data pipelines that are essential for accelerating real-world evidence generation, supporting drug development, and ultimately advancing patient care in a Learning Health System framework.

Harnessing AI and Natural Language Processing (NLP) for Unstructured Data

In modern oncology, the pursuit of precision medicine generates massive volumes of patient data, most of which exists in unstructured formats within electronic health records (EHRs). Critical information regarding cancer diagnosis, histology, staging, treatment responses, and patient-reported outcomes often remains buried in clinical narratives, pathology reports, and physician notes rather than structured, analyzable fields. This unstructured data represents both a formidable challenge and a tremendous opportunity for cancer research and drug development. The U.S. healthcare system alone has exceeded 2000 exabytes of data, much of which is unstructured clinical information requiring sophisticated processing techniques [25]. For researchers and pharmaceutical professionals, unlocking this information is crucial for generating robust real-world evidence, streamlining clinical trials, and advancing personalized treatment strategies.

Artificial intelligence, particularly natural language processing, has emerged as a transformative solution to this data accessibility problem. NLP technologies can automatically extract, structure, and analyze clinical information from unstructured text, converting qualitative narratives into quantitative, research-ready data [4]. This capability is especially valuable in oncology, where the heterogeneity of cancer subtypes, treatment protocols, and patient outcomes demands sophisticated data integration across multiple sources. This guide provides a comprehensive comparison of AI and NLP methodologies for oncology data extraction, evaluates their performance against traditional approaches, and details experimental protocols for validating these technologies in real-world research settings, with a specific focus on applications for real-time oncology data validation from EHRs.

Comparative Analysis of NLP Approaches in Oncology

Evolution of NLP Technologies: From Rules to Deep Learning

The field of natural language processing has undergone significant evolution, transitioning through three distinct technological eras that build upon each other in complexity and capability. Each approach offers different advantages for oncology applications, from extracting simple diagnostic information to understanding complex clinical contexts.

Figure 1: The evolution of NLP technologies shows a progression from rigid rule-based systems to contextually aware large language models, with each generation building upon the previous to handle increasingly complex clinical language tasks.

Performance Comparison: Traditional NLP vs. Modern LLMs

Multiple studies have quantitatively evaluated the performance of different NLP approaches for extracting oncology concepts from unstructured clinical text. The table below summarizes key performance metrics across various extraction tasks and cancer types, demonstrating the comparative advantages of modern approaches.

Table 1: Performance comparison of NLP approaches on oncology data extraction tasks

| NLP Approach | Cancer Types Evaluated | Key Data Elements Extracted | Performance Metrics | Reference |

|---|---|---|---|---|

| Rule-Based Systems | Breast, Colorectal, Prostate | Symptoms, Urinary function, Pain intensity | Precision: 0.72-0.89, Recall: 0.68-0.85 [26] | Systematic Review (2024) |

| Traditional Machine Learning | Multiple (26 solid tumors, 14 hematologic) | Diagnosis, Histology, Staging | F1 Score: 0.78-0.82 [4] | ASCO 2025 Validation Study |

| Large Language Models (LLMs) | Multiple (26 solid tumors, 14 hematologic) | Cancer diagnosis, Histology, Grade, TNM staging | F1 Score: >0.85 [4] | ASCO 2025 Validation Study |

| Deep Convolutional Neural Networks | Gastric cancer (early detection) | Endoscopic image classification | Sensitivity: 0.94, Specificity: 0.91, AUC: 0.98 [27] | Meta-analysis (2025) |

The performance advantage of large language models is particularly evident in complex extraction tasks such as TNM staging, where contextual understanding is essential. Modern LLMs like BERT and GPT variants achieve F1 scores exceeding 0.85 for extracting key clinical elements across 26 solid tumors and 14 hematologic malignancies, outperforming traditional machine learning approaches that require extensive feature engineering and task-specific training [4]. This represents a significant advancement for oncology research, where accurate, automated extraction of structured data from clinical narratives enables more comprehensive patient cohort identification for clinical trials and more robust real-world evidence generation.

Domain-Specific Performance Variations

While modern NLP approaches generally outperform traditional methods, their effectiveness varies across specific oncology domains and documentation types. For instance, in early gastric cancer detection using endoscopic images, deep convolutional neural networks (DCNNs) demonstrate remarkable sensitivity (0.94) and specificity (0.91), significantly outperforming both traditional computer vision approaches and clinician assessment in controlled studies [27]. This performance advantage is particularly pronounced in dynamic video analysis, where DCNNs achieve an AUC of 0.98 compared to clinician AUC ranges of 0.85-0.90, highlighting their potential for real-time clinical decision support [27].

However, the performance of any NLP system is highly dependent on the quality and representativeness of its training data. Models trained on specific cancer types or institutional documentation styles typically perform better within those domains than general-purpose models applied to unfamiliar contexts. This underscores the importance of domain-specific tuning and validation when implementing NLP solutions for oncology research applications [26] [4].

Experimental Protocols for NLP Validation in Oncology

Methodological Framework for Validation Studies

Robust validation of NLP systems for oncology applications requires carefully designed experimental protocols that assess both technical performance and clinical utility. The following workflow outlines a comprehensive validation methodology adapted from recent high-quality studies in the field.

Figure 2: Comprehensive validation workflow for NLP systems in oncology, progressing from data collection through clinical utility assessment, with specific methodological considerations at each stage.

Key Performance Metrics and Evaluation Criteria

Rigorous evaluation of NLP systems requires multiple performance dimensions assessed through standardized metrics. The oncology research context demands particular attention to clinical relevance and potential impact on research workflows.

Table 2: Standard evaluation metrics for NLP systems in oncology applications

| Performance Dimension | Key Metrics | Target Benchmarks | Evaluation Method |

|---|---|---|---|

| Concept Extraction Accuracy | Precision, Recall, F1-score | F1 > 0.85 for key concepts [4] | Comparison to gold standard manual abstraction |

| Clinical Validity | Sensitivity, Specificity, AUC | Sensitivity: 0.90-0.94, Specificity: 0.87-0.95 [27] | Cross-reference with clinical outcomes |

| Generalizability | Performance variation across cancer types, institutions | F1 >= 0.85 across all cancer types [4] | Cross-validation, external validation |

| Clinical Utility | Time savings, clinician accuracy improvement | Improved clinician performance with AI assistance [28] | Pre-post implementation studies |

| Calibration | Calibration plots, Brier score | Ratio between predicted/observed outcomes [28] | Graphical assessment of prediction reliability |

Beyond these technical metrics, successful validation should include assessment of clinical utility involving end-users. Recent studies have engaged 499 clinicians using 12 different assessment tools to demonstrate that AI assistance improves clinician performance in tasks such as trial eligibility screening and documentation accuracy [28]. This real-world validation is essential for establishing the practical value of NLP systems in oncology research and clinical contexts.

Research Reagent Solutions: Essential Tools for Oncology NLP

Implementing successful NLP projects in oncology requires both technical infrastructure and domain-specific resources. The following table details essential components of the research toolkit for developing and validating NLP systems for oncology data extraction.

Table 3: Essential research reagents and tools for oncology NLP projects

| Tool Category | Specific Solutions | Function | Application Context |

|---|---|---|---|

| Data Management Platforms | iCore [29], OSIRIS RWD [30], OMOP CDM [30] | Harmonizes diverse datasets (genomics, proteomics, imaging) and ensures regulatory compliance | Multi-institutional research collaborations, regulatory-grade RWE generation |

| NLP Frameworks & Models | BERT [25], GPT variants [25], Transformer models [26] | Provides pre-trained language understanding capabilities for clinical text | Rapid development of information extraction pipelines |

| Standardized Data Models | OMOP Common Data Model [30], OSIRIS [30], FHIR [30] | Enables standardized data representation and cross-system interoperability | Health system integrations, regulatory submissions |

| Annotation Tools | Clinical specialist manual abstraction [4], Structured annotation guidelines | Creates gold standard datasets for model training and validation | Supervised learning projects, model validation |

| Validation Frameworks | QUADAS-2 [27], Clinical utility assessments [28] | Assesses risk of bias and clinical applicability of NLP systems | Peer-reviewed research, regulatory evaluation |

These research reagents collectively enable the end-to-end development, validation, and deployment of NLP systems for oncology research. Platforms like iCore are particularly valuable for addressing the "data dilemma" in AI development by ensuring proper harmonization of diverse datasets from genomics, proteomics, and imaging sources, which is essential for building trustworthy AI models [29]. Similarly, standardized data models like OSIRIS and OMOP facilitate the structured representation of extracted information, enabling cross-system interoperability and collaborative research initiatives across multiple cancer centers [30].

The integration of AI and NLP technologies into oncology research represents a paradigm shift in how we extract knowledge from unstructured clinical data. The quantitative evidence demonstrates that modern approaches, particularly large language models, achieve clinically acceptable performance levels for automating the extraction of critical oncology concepts from EHRs. These capabilities directly address fundamental challenges in real-world oncology data validation by enabling more efficient, comprehensive, and accurate structuring of patient information for research purposes.

Looking forward, several emerging trends will shape the next generation of oncology NLP applications. The integration of multi-omics data—drawing from genomics, transcriptomics, proteomics, and metabolomics—will provide a more comprehensive picture of cancer biology that extends beyond singular dysregulated genes or signaling pathways [29]. Additionally, the growing regulatory acceptance of AI-defined biomarkers and the intentional incorporation of AI tools into clinical trial designs promise to accelerate the translation of these technologies into practical research applications [29]. However, success will ultimately depend on how well these AI tools integrate into clinical and operational workflows, not just the sophistication of the underlying algorithms [29].

For researchers, scientists, and drug development professionals, these advancements offer unprecedented opportunities to leverage real-world data at scale. By implementing robust validation methodologies and selecting appropriate NLP approaches for specific research questions, the oncology research community can harness the full potential of unstructured data to accelerate drug development, personalize treatment approaches, and improve outcomes for cancer patients.

The shift towards data-driven oncology research, accelerated by initiatives like the Cancer Moonshot, has made the curation of electronic health record (EHR) data a critical scientific competency [31] [32]. Real-world evidence (RWE) generated from EHRs is now integral to understanding disease progression, supporting drug development, and optimizing patient care [31]. However, EHR data exists in two fundamentally different forms—structured and unstructured—each requiring distinct curation methodologies. This guide objectively compares techniques for handling these data types, focusing on their validation within real-time oncology research contexts. For researchers and drug development professionals, selecting the appropriate curation strategy is paramount for ensuring data quality, relevance, and reliability for specific use cases, from clinical trial design to post-market surveillance.

Structured data refers to highly organized information with predefined formats, typically stored in tabular forms like relational databases. In oncology EHRs, this includes demographic information, laboratory test results (e.g., numerical values from blood tests), vital signs, medication prescriptions, and standardized diagnosis codes like ICD-10 [33] [34]. Unstructured data, which constitutes an estimated 80-90% of all digital information, lacks a pre-defined model and includes clinical notes, pathology reports, radiology interpretations, and discharge summaries [33]. A third category, semi-structured data (e.g., JSON, XML formats), offers some organizational tags without rigid schema requirements [35].

The core distinctions between structured and unstructured data impact every aspect of their management, from storage to analysis. The table below summarizes these key differences.

Table 1: Core Characteristics of Data Types in Oncology Research

| Aspect | Structured Data | Unstructured Data |

|---|---|---|

| Schema & Format | Predefined, tabular format (rows/columns); schema-dependent [33] [35] | Schemaless; stored in native formats (text, PDF, images) [33] [35] |

| Oncology Examples | Patient demographics, ICD-10 codes, lab values, medication orders, TNM staging [31] [34] | Pathology reports, clinical narratives, radiology notes, surgical summaries [31] [34] |

| Storage Solutions | Relational databases (SQL); Data Warehouses [33] [35] | Data lakes, NoSQL databases; Cloud object storage [33] [35] |

| Primary Analysis Tools | SQL, traditional BI and statistical tools [33] [35] | NLP, Machine Learning, AI-based indexing [36] [37] |

| Inherent Nature | Quantitative, easily countable [33] | Qualitative, rich in context and nuance [33] |

Data Provenance and Workflow Integration

In clinical settings, structured data is often generated through discrete entry fields in EHRs, such as dropdown menus for Eastern Cooperative Oncology Group (ECOG) performance status or checkboxes for symptoms [31]. This data is extracted from various hospital systems and harmonized into computable standard terminologies. Unstructured data, conversely, originates from free-text entries composed by clinicians. This includes the rich contextual details found in clinical narratives and tumor board notes, which are crucial for understanding patient-specific factors and complex disease presentations [31] [34].

Curation Techniques and Methodologies

The transformation of raw EHR data into a research-ready resource requires sophisticated, fit-for-purpose curation pipelines. The following workflow diagram illustrates the parallel processes for structured and unstructured data, culminating in a unified dataset for evidence generation.

Diagram 1: Oncology Data Curation Workflow

Structured Data Curation

The curation of structured data focuses on harmonization and validation. Data from disparate EHR systems and formats are mapped to common data models, such as the Fast Healthcare Interoperability Resources (FHIR) standard, and standardized terminologies (e.g., SNOMED CT, LOINC) [36] [34]. The process involves:

- Extract, Transform, Load (ETL): Automated processes extract data from source systems, apply business rules for transformation, and load it into a target database or warehouse [33].

- Data Quality Checks: Implementing verification for conformance (data matches expected type), consistency (values are logically consistent across related fields), and plausibility (values fall within expected ranges) [31].

- Validation Techniques: Accuracy is assessed by comparing curated variables to internal or external reference standards where available, or through indirect benchmarking against known population distributions [31].

Unstructured Data Curation

Curation of unstructured data is the process of converting clinical text into structured, analyzable fields. Methodologies exist on a spectrum from manual to fully automated.

- Manual Abstraction: Traditionally the gold standard, this involves trained abstractors (e.g., clinical research coordinators) reviewing clinical notes to extract and code specific variables. While highly accurate for complex concepts, it is resource-intensive and difficult to scale [31].

- Rule-Based Natural Language Processing (NLP): This approach uses custom-written rules or dictionaries to identify and extract specific concepts from text (e.g., flagging a note that contains "tumor size" followed by a measurement).

- Machine Learning (ML)/Deep Learning Models: Supervised ML models can be trained on pre-annotated clinical text to identify and extract complex clinical concepts, such as disease progression or recurrence status [36] [31]. These models can capture context and nuance better than rigid rules.

- Large Language Model (LLM) Processing: Recent studies demonstrate the use of LLMs like Claude 3.5 Sonnet to automate the structuring of clinical data from deidentified EHR extracts [37]. A typical protocol involves a multi-phase prompt refinement process where the LLM is trained on sample data to accurately extract and structure factors like tumor characteristics, nodal status, and biomarker information from complex clinical narratives [37].

Performance Comparison: Experimental Data and Validation

Evaluating the fitness of curated data requires assessing its performance across multiple dimensions, including prediction accuracy, operational efficiency, and alignment with established data quality frameworks.

Predictive Model Performance

A 2023 study directly compared the performance of Machine Learning models in predicting 5-year breast cancer recurrence using different data sources [36]. The eXtreme Gradient Boosting (XGB) model was trained on three distinct datasets derived from the same patient cohort.

Table 2: ML Performance for Breast Cancer Recurrence Prediction (5-Year)

| Dataset Type | Precision | Recall | F1-Score | AUROC |

|---|---|---|---|---|

| Structured Data Only | 0.900 | 0.907 | 0.897 | 0.807 [36] |

| Unstructured Data Only | (Performance was lower than Structured) | (Performance was lower than Structured) | (Performance was lower than Structured) | (Performance was lower than Structured) [36] |

| Combined Dataset | (Poorest performance among the three) [36] | (Poorest performance among the three) [36] | (Poorest performance among the three) [36] | (Poorest performance among the three) [36] |

This study found that structured data alone yielded the best predictive performance [36]. The authors noted that an NLP-based approach on unstructured data offered comparable results with potentially less manual mapping effort, suggesting context-dependent utility [36].

Curation Efficiency and Accuracy

A 2025 study compared traditional manual review against an LLM-based processing pipeline for curating breast cancer surgical oncology data [37]. The experimental protocol involved extracting 31 clinical factors from patient records.

Table 3: Manual Review vs. LLM-Based Curation Efficiency

| Curation Metric | Manual Physician Review | LLM-Based Processing |

|---|---|---|

| Processing Time | 7 months (5 physicians) | 12 days (2 physicians) [37] |

| Total Physician Hours | 1025 hours | 96 hours (91% reduction) [37] |

| Reported Accuracy | (Benchmark for comparison) | 90.8% [37] |

| Cost per Case | (Labor-intensive) | US $0.15 [37] |

| Key Strength | Established benchmark | Superior capture of survival events (41 vs. 11) [37] |

The study concluded that the two-step approach—automated data extraction followed by LLM curation—addressed both privacy and efficiency needs, providing a scalable solution for retrospective clinical research while maintaining data quality [37].

Data Quality Framework Alignment

Regulatory agencies like the FDA and EMA emphasize relevance and reliability as primary data quality dimensions for RWE generation [31]. The table below applies this framework to the two curation paradigms.

Table 4: Quality Dimension Assessment for Curation Outputs

| Quality Dimension | Structured Data Curation | Unstructured Data Curation |

|---|---|---|

| Relevance | High for defined variables (e.g., treatments, lab values); availability is clear [31] | Enables relevance for concepts not in structured fields (e.g., disease severity, symptom details) [31] |

| Reliability: Accuracy | Assessed via validation against reference standards; high conformance to predefined rules [31] | Accuracy is task-dependent; LLMs show >90% in structured tasks but requires validation [37] |

| Reliability: Completeness | Easily measured against expected data points [31] | Completeness depends on source documentation and extraction thoroughness [31] |

| Reliability: Provenance | Highly traceable through ETL pipelines and data transformation logs [31] | Requires detailed metadata on abstraction method (human, NLP, LLM) and versioning [31] |

Successful curation and utilization of oncology data often involve leveraging a suite of public resources and analytical tools.

Table 5: Essential Resources for Oncology Data Curation and Validation

| Resource or Tool | Type | Primary Function in Curation & Research |

|---|---|---|

| FHIR (Fast Healthcare Interoperability Resources) | Data Standard | Provides a modern, web-based standard for exchanging EHR data, facilitating the harmonization of both structured and unstructured elements [36]. |

| cBioPortal | Genomic Database | A public resource for exploring, visualizing, and analyzing multidimensional cancer genomics data; useful for validating molecular findings from EHRs [32]. |

| The Cancer Genome Atlas (TCGA) | Genomic Database | A landmark public dataset containing multi-omics data from thousands of patients; serves as a critical reference for benchmarking and discovery [38]. |

| PROBAST (Prediction model Risk Of Bias ASsessment Tool) | Methodological Tool | A structured tool to assess the risk of bias and applicability of diagnostic and prognostic prediction model studies, crucial for evaluating ML models [39]. |

| NLP/LLM Platforms (e.g., Claude, GPT) | Curation Tool | Used to automate the structuring of information from clinical narratives, pathology reports, and other unstructured text sources [37]. |

| Data Lakes (e.g., Amazon S3, Azure Blob) | Storage Solution | Cloud object storage systems designed to hold vast volumes of raw, unstructured data in its native format prior to curation [35]. |

The choice between structured and unstructured data curation is not a binary one; rather, it is a strategic decision based on the research question, available resources, and required level of precision. Structured data curation provides a robust, efficient pathway for variables that are routinely and discretely captured in EHRs, consistently demonstrating high performance in predictive modeling tasks [36] [39]. In contrast, unstructured data curation, through NLP or modern LLMs, is indispensable for unlocking the rich, contextual details of patient care and capturing clinical phenotypes not represented in structured fields [31] [37].

The emerging paradigm is one of integration. The most powerful real-world evidence will come from studies that intelligently combine the quantitative precision of curated structured data with the qualitative depth extracted from unstructured narratives. Future advancements will continue to blur the lines between these two types, with LLMs playing an increasingly central role in scaling the curation of complex clinical concepts, thereby accelerating oncology research and drug development.

The Datagateway system represents a significant advancement in real-time oncology data extraction, demonstrating high reliability in automating the transfer of structured Electronic Health Record (EHR) data to the Netherlands Cancer Registry (NCR). This validation study assesses the system's performance against the established standard of manual data entry, which has been the traditional methodology for population-based cancer registries. The imperative for this technological evolution is clear: manual registration is time-consuming and labor-intensive, creating limitations in the timeliness and scalability of data collection essential for modern oncology research and real-world evidence generation [7]. The findings indicate that automated data extraction via the Datagateway is not only feasible but also highly accurate, enabling near real-time insights into cancer treatment patterns and outcomes [7].

The Datagateway system is designed to address critical bottlenecks in cancer data aggregation. Its core function is to automatically harmonize and transfer structured EHR data from multiple hospitals into a common data model, directly supporting the enrichment of the NCR [7]. This positions it as a next-generation solution against a backdrop of traditional and contemporary alternatives.

The table below outlines the key characteristics of the Datagateway system compared to other common data collection methodologies.

Table: Comparison of Oncology Data Collection Methodologies

| Methodology | Description | Key Advantages | Key Limitations |

|---|---|---|---|

| Manual Abstraction (Traditional Standard) | Trained registration clerks abstract data directly from medical records [40]. | Established, high-quality data; handles unstructured data [40]. | Extremely time-consuming, labor-intensive, costly, slower data availability [7]. |

| Datagateway (Automated System) | Automated system that harmonizes structured EHR data into a common model for real-time transfer [7]. | High-speed, scalable, enables real-time surveillance, reduces manual burden [7]. | Limited to structured EHR data; accuracy dependent on source data quality and system coding. |

| Enterprise Data Warehouses (EDWs) | Centralized databases that aggregate EHR data for research and reporting [2]. | Consolidates data from across a health system; useful for internal analytics. | Prone to data quality issues (missing data, inconsistent coding); complex queries require informatics support; often not designed for interoperability [2]. |

| Basic EHR Export & Reporting | Use of built-in, hospital-specific EHR reporting tools. | Leverages existing system functionality; no new infrastructure needed. | Lack of data standardization across hospitals; ill-documented local codes; poor interoperability [2]. |

Experimental Validation & Performance Data

The validation of the Datagateway system was conducted rigorously, assessing its performance across multiple data domains critical to a cancer registry. The study utilized data from patients with acute myeloid leukemia (AML), multiple myeloma, lung cancer, and breast cancer [7].

Validation of Diagnostic Data

The system's ability to correctly identify and process new cancer diagnoses was tested both prospectively and retrospectively.

- Prospective Validation: Of 1,287 patient records evaluated, 1,219 (95%) met the NCR inclusion criteria via the Datagateway. The 5% that did not were primarily due to relapsed disease or preliminary, unconfirmed diagnoses already in the EHR [7].

- Retrospective Validation: The system successfully retrieved 100% of patients recorded in the NCR from a sample of 384 records. Furthermore, 89% of these patients were identified with a care trajectory and diagnosis within the same year as the NCR record [7].

Validation of Treatment Data

Treatment data is complex, often involving numerous combination regimens. The Datagateway system was validated against manually recorded NCR data and EHR source data.

- AML Treatment: A perfect 100% concordance was found when comparing treatment regimens for 254 AML patients identified by the Datagateway to the reference standard [7].

- Multiple Myeloma (MM) Treatment: Across 198 treatment regimens for 117 MM patients, 192 (97%) were correctly identified. The 3% misclassification rate was primarily related to specific dosing nuances and regimens not included in the system's initial classification rules [7].

Table: Summary of Datagateway System Validation Performance

| Validation Metric | Data Type | Sample Size | Accuracy | Notes |

|---|---|---|---|---|

| Diagnosis Retrieval | Retrospective | 384 patients | 100% | All NCR-recorded patients were retrieved [7]. |

| New Diagnosis Inclusion | Prospective | 1,287 patients | 95% | Compared to NCR inclusion criteria [7]. |

| Treatment Regimen (AML) | Cross-sectional | 254 patients | 100% | 100% concordance with NCR/EHR source data [7]. |

| Treatment Regimen (MM) | Cross-sectional | 198 regimens | 97% | Misclassifications involved specific drug combinations and dosing [7]. |

| Laboratory Values | Cross-sectional | Various | ~100% | Virtually complete match with source data [7]. |

| Toxicity Indicators | Cross-sectional | Various | 72%-100% | Accuracy varied by specific toxicity indicator [7]. |

Experimental Protocol & Methodology

The validation process for the Datagateway system can be summarized in the following workflow, which illustrates the key stages of data extraction, harmonization, and validation.

Detailed Methodological Steps

- Data Extraction: Structured data regarding diagnosis, treatment, laboratory values, and toxicity indicators were extracted from the EHRs of multiple participating hospitals [7].

- Data Harmonization: The extracted data was processed and harmonized by the Datagateway system into a Common Data Model. This critical step ensures that data from different source systems with varying formats and codes is standardized into a consistent structure for the registry [7].

- Validation Comparison: The output from the Datagateway was compared against two primary reference standards:

- Performance Analysis: Quantitative metrics including accuracy, concordance, and misclassification rates were calculated for each data domain (e.g., diagnoses, treatment regimens) to provide a comprehensive performance assessment [7].

The Researcher's Toolkit: Essential Components for Real-Time Data Validation

The successful implementation and validation of an automated system like the Datagateway rely on a combination of technological infrastructure, data standards, and methodological frameworks.

Table: Essential Components for Real-Time Oncology Data Validation

| Component | Function in Validation | Application in Datagateway Study |

|---|---|---|

| Common Data Model (CDM) | Provides a standardized structure for harmonizing heterogeneous data from multiple sources, enabling consistent analysis and comparison. | The core of the Datagateway system, allowing it to integrate data from different hospital EHRs into a unified format for the NCR [7]. |

| Electronic Health Records (EHRs) | Serve as the primary source of real-world patient data, including diagnoses, treatments, lab results, and outcomes. | The source systems from which structured data on diagnosis, treatment, and lab values were extracted for validation [7]. |

| Validation Framework | A structured protocol defining the reference standards, comparison metrics, and statistical methods for assessing data accuracy. | The study design comparing Datagateway output to manual NCR data and EHR source data across multiple cancer types and data domains [7]. |

| Reference Standard Registry (NCR) | A high-quality, manually curated data source that serves as the benchmark for validating the automated system's output. | The Netherlands Cancer Registry itself was used as the gold standard for validating diagnoses and treatment data [7] [40]. |