Transfer Learning for MRI Brain Tumor Detection: Advanced Models, Clinical Implementation, and Future Directions

This article comprehensively reviews the application of transfer learning (TL) for brain tumor detection in MRI scans, tailored for researchers and drug development professionals.

Transfer Learning for MRI Brain Tumor Detection: Advanced Models, Clinical Implementation, and Future Directions

Abstract

This article comprehensively reviews the application of transfer learning (TL) for brain tumor detection in MRI scans, tailored for researchers and drug development professionals. It explores the foundational principles of TL and its necessity in medical imaging, details state-of-the-art methodologies including hybrid CNN-Transformer architectures and attention mechanisms, and addresses key challenges like data scarcity and model interpretability. The scope also includes a rigorous comparative analysis of model performance and validation techniques, synthesizing findings to discuss future trajectories for integrating these AI tools into biomedical research and clinical diagnostics to enhance precision medicine.

The Foundation of Transfer Learning in Neuro-Oncology: From Basic Concepts to Clinical Imperatives

Defining Transfer Learning and its Core Mechanism in Medical Image Analysis

Transfer learning is a machine learning technique where knowledge gained from solving one problem is reused to improve performance on a different, but related, problem [1]. Instead of building a new model from scratch for each task, transfer learning uses pre-trained models as a starting point, leveraging patterns learned from large datasets to accelerate training and enhance performance on new tasks with limited data [2].

In medical image analysis, this approach is particularly valuable given the scarcity of large, annotated medical datasets and the substantial computational resources required to train deep learning models from scratch [1] [2]. For brain tumor detection in MRI scans, transfer learning enables researchers to adapt models initially trained on natural images to the specialized domain of medical imaging, significantly reducing development time while maintaining high diagnostic accuracy [3] [4].

Core Mechanisms of Transfer Learning

Fundamental Principles

The core mechanism of transfer learning operates on the principle that neural networks learn hierarchical feature representations. In computer vision applications, early layers typically detect low-level features like edges and textures, middle layers identify more complex shapes and patterns, while later layers specialize in task-specific features [2]. Transfer learning exploits this hierarchical structure by preserving and reusing the generic feature detectors from earlier layers while retraining only the specialized later layers for the new task.

This process involves two key types of layers:

- Frozen layers: Layers that retain knowledge from the original task and are not updated during retraining

- Modifiable layers: Layers that are retrained to adapt to the new task [2]

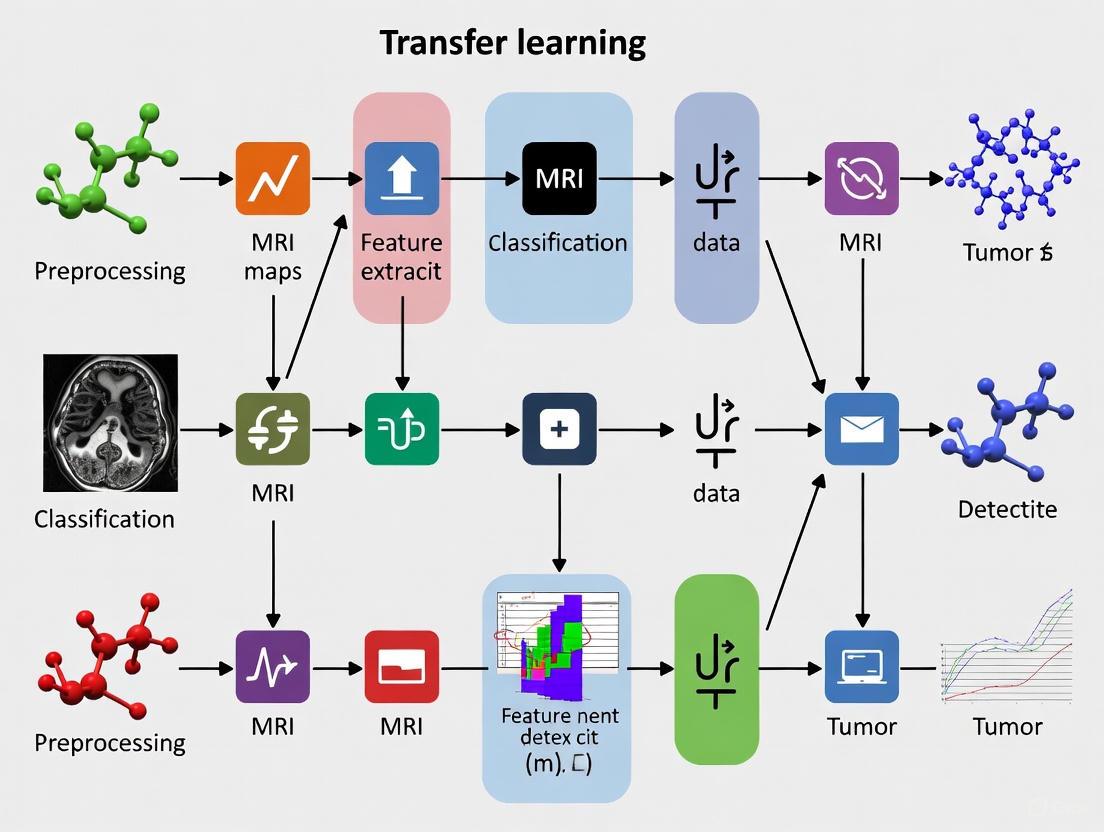

Transfer Learning Workflow for Medical Imaging

The following diagram illustrates the standard transfer learning pipeline for adapting a general image classification model to the specific task of brain tumor detection in MRI scans:

Types of Transfer Learning Approaches

Three primary approaches facilitate knowledge transfer across domains and tasks:

Inductive Transfer: Applied when source and target tasks differ, but domains may be similar or different. This commonly appears in computer vision where models pre-trained for feature extraction on large datasets are adapted for specific tasks like object detection [1].

Transductive Transfer: Used when source and target tasks are identical, but domains differ. Domain adaptation is a form of transductive learning that applies knowledge from one data distribution to the same task on another distribution [1].

Unsupervised Transfer: Employed when both source and target tasks are different, and data is unlabeled. This approach identifies common patterns across unlabeled datasets for tasks like anomaly detection [1].

Performance Comparison of Transfer Learning Models in Brain Tumor Detection

Quantitative Performance Metrics

Table 1: Comparative performance of transfer learning models for brain tumor classification

| Model Architecture | Dataset Size | Accuracy (%) | Preprocessing Techniques | Tumor Types Classified |

|---|---|---|---|---|

| GoogleNet [3] | 4,517 MRI scans | 99.2 | Data augmentation, class imbalance handling | Glioma, Meningioma, Pituitary, Normal |

| Enhanced YOLOv7 [4] | 10,288 images | 99.5 | Image enhancement filters, data augmentation | Glioma, Meningioma, Pituitary, Non-tumor |

| CNN with Feature Extraction [5] | 7,023 MRI images | 98.9 | Gaussian filtering, binary thresholding, contour detection | Glioma, Meningioma, Pituitary, Non-tumor |

| AlexNet [3] | 4,517 MRI scans | 98.1 | Data augmentation, class imbalance handling | Glioma, Meningioma, Pituitary, Normal |

| MobileNetV2 [3] | 4,517 MRI scans | 97.8 | Data augmentation, class imbalance handling | Glioma, Meningioma, Pituitary, Normal |

| YOLOv11 Pipeline [6] | Large diverse dataset + fine-tuning | 93.5 mAP | Two-stage transfer learning, geometric transformations | Glioma, Meningioma, Pituitary |

Advanced Implementation Frameworks

Table 2: Advanced transfer learning frameworks for brain tumor analysis

| Framework | Core Innovation | Transfer Learning Strategy | Key Advantages |

|---|---|---|---|

| YOLOv11 Pipeline [6] | Two-stage transfer learning with morphological post-processing | Base model trained on large dataset, then fine-tuned on smaller domain-specific dataset | High mAP (93.5%), generates segmentation masks, extracts clinical metrics |

| Enhanced YOLOv7 [4] | Integration of CBAM attention mechanism and BiFPN | Pre-trained model fine-tuned with domain-specific augmentation | 99.5% accuracy, improved small tumor detection, multi-scale feature fusion |

| Multi-Model Comparison [3] | Comprehensive analysis of AlexNet, MobileNetV2, GoogleNet | Individual model fine-tuning with data augmentation | Direct architecture comparison, GoogleNet achieved 99.2% accuracy |

Experimental Protocols and Methodologies

Standard Transfer Learning Protocol for Brain Tumor Classification

Phase 1: Data Preparation and Preprocessing

- Data Collection: Curate MRI dataset with balanced representation of tumor types (glioma, meningioma, pituitary) and normal scans [3] [5]

- Data Augmentation: Apply geometric transformations (rotation, flipping, scaling) to increase dataset diversity and prevent overfitting [4]

- Image Enhancement: Implement filters (Gaussian, binary thresholding) to improve contrast and highlight regions of interest [4] [5]

- Class Imbalance Handling: Employ sampling techniques or weighted loss functions to address unequal class distribution [3]

Phase 2: Model Selection and Adaptation

- Base Model Selection: Choose pre-trained model (GoogleNet, AlexNet, MobileNetV2, YOLO variants) based on task requirements [3] [4]

- Architecture Modification: Replace final classification layers with tumor-specific categories (glioma, meningioma, pituitary, non-tumor) [3]

- Layer Freezing: Preserve early and middle layers for generic feature extraction while enabling retraining of later layers [2]

Phase 3: Training and Optimization

- Two-Stage Training:

- Hyperparameter Tuning: Adjust learning rates, batch sizes, and optimization algorithms for medical imaging context

- Regularization: Apply techniques to prevent overfitting on limited medical data

Phase 4: Validation and Interpretation

- Performance Metrics: Evaluate using accuracy, precision, recall, F1-score, and mAP [3] [4]

- Clinical Validation: Assess model outputs against radiological expert annotations

- Interpretability: Implement visualization techniques (Grad-CAM, attention maps) to explain model decisions [4]

Advanced Two-Stage Transfer Learning Protocol

Stage 1: Base Model Development (Brain Tumor Detection Model - BTDM)

- Train model on large, diverse MRI dataset (10,000+ images) [6]

- Implement domain-specific augmentation: mosaic, cutmix, horizontal flipping [6]

- Continue training until mean Average Precision (mAP) exceeds 90% [6]

- Designate optimized model as Brain Tumor Detection Model (BTDM) [6]

Stage 2: Specialized Model Fine-tuning (Brain Tumor Detection and Segmentation - BTDS)

- Utilize structurally similar but smaller dataset for fine-tuning [6]

- Apply transfer learning from BTDM to maintain performance with limited data [6]

- Integrate morphological post-processing for segmentation mask generation [6]

- Extract clinically relevant metrics: tumor size, location, severity level [6]

Research Reagents and Computational Tools

Table 3: Essential research reagents and computational tools for transfer learning in medical imaging

| Resource Type | Specific Examples | Function/Application |

|---|---|---|

| Pre-trained Models | AlexNet, GoogleNet, MobileNetV2, ResNet, YOLO variants [3] [4] [2] | Provide foundation for transfer learning, feature extraction capabilities |

| Medical Imaging Datasets | Kaggle Brain Tumor MRI Dataset, Figshare dataset [3] [5] | Source of domain-specific data for fine-tuning and validation |

| Data Augmentation Tools | Geometric transformations, mosaic augmentation, cutmix [4] [6] | Increase dataset diversity, improve model generalization |

| Attention Mechanisms | Convolutional Block Attention Module (CBAM) [4] | Enhance feature extraction, focus on salient tumor regions |

| Feature Fusion Networks | Bi-directional Feature Pyramid Network (BiFPN) [4] | Enable multi-scale feature fusion, improve small tumor detection |

| Performance Metrics | Accuracy, mean Average Precision (mAP), F1-score [3] [6] | Quantify model performance, enable comparative analysis |

| Post-processing Modules | Morphological operations, segmentation mask generation [6] | Extract clinical metrics (tumor size, severity), enhance interpretability |

Technical Implementation Considerations

Optimization Strategies

Successful implementation of transfer learning for brain tumor detection requires addressing several technical challenges:

Data Scarcity Mitigation

- Leverage data augmentation techniques specifically tailored for medical images [4]

- Utilize two-stage transfer learning to maximize knowledge extraction from limited data [6]

- Implement class imbalance handling strategies to prevent model bias [3]

Architecture Optimization

- Integrate attention mechanisms (CBAM) to improve focus on tumor regions [4]

- Employ feature pyramid networks (BiFPN) for multi-scale feature detection [4]

- Balance computational efficiency with detection accuracy through model selection [3] [2]

Clinical Relevance Enhancement

- Develop post-processing modules for tumor segmentation and measurement [6]

- Generate clinically interpretable outputs (tumor size, location, severity) [6]

- Validate model performance against radiological expert assessments [4]

The strategic implementation of transfer learning mechanisms detailed in these protocols demonstrates significant potential for advancing automated brain tumor detection systems, ultimately contributing to improved diagnostic accuracy and patient outcomes in neuro-oncological care.

The accurate detection and diagnosis of brain tumors using Magnetic Resonance Imaging (MRI) are critical for determining appropriate treatment strategies and improving patient survival rates. However, the development of robust, automated diagnostic tools, particularly those powered by artificial intelligence (AI), faces two fundamental and interconnected challenges: the inherent scarcity of large, annotated medical datasets and the significant variability in clinical MRI data [7] [8]. Manual annotation of brain tumors by medical experts is time-consuming, expensive, and prone to inter-observer variability, leading to a natural limitation in dataset sizes [7]. Furthermore, MRI data acquired from different hospitals using various scanner manufacturers, models, and acquisition protocols exhibit substantial variations in image characteristics, such as intensity, contrast, and noise profiles, a phenomenon often termed "scanner effects" [7] [8]. This heterogeneity can severely degrade the performance and generalizability of AI models when deployed in real-world clinical settings. This Application Note details these challenges within the context of transfer learning research and provides structured protocols to effectively address them, enabling the development of more reliable and translatable diagnostic tools.

The following tables summarize the core data challenges and the performance of advanced methods designed to overcome them.

Table 1: Key Challenges in Brain Tumor MRI Data for AI Research

| Challenge Category | Specific Manifestation | Impact on AI Model Development |

|---|---|---|

| Data Scarcity | Limited number of annotated medical images [7] | Increased risk of model overfitting and poor generalization [7] |

| High cost and time required for expert labeling [7] | Limits the scale and diversity of datasets available for training | |

| Data Variability | Intensity inhomogeneity (bias field effects) [9] | Introduces non-biological variations, confusing feature extraction algorithms |

| "Scanner effects" from different protocols and equipment [7] [8] | Reduces model robustness and performance on external validation sets [7] | |

| Variations in tumor appearance (size, shape, morphology) [7] | Complicates the learning of consistent and generalizable tumor features | |

| Class Imbalance | Uneven distribution of tumor types (e.g., glioma, meningioma) and "no tumor" cases [10] | Introduces bias, causing models to perform poorly on underrepresented classes |

Table 2: Performance of Advanced Models Addressing Data Challenges

| Model Architecture | Core Strategy | Reported Performance | Reference |

|---|---|---|---|

| Fine-tuned VGG16 | Transfer Learning & Bounding Box Localization | Accuracy: 99.86% (Brain Tumor MRI Dataset) [10] | |

| GoogleNet (Transfer Learning) | Transfer Learning & Data Augmentation | Accuracy: 99.2% (4,517 image dataset) [3] | |

| DenseTransformer (DenseNet201 + Transformer) | Hybrid CNN-Attention & Transfer Learning | Accuracy: 99.41% (Br35H dataset) [11] | |

| CNN-SVM Hybrid | Hybrid Architecture (Feature Learning + Classification) | Accuracy: 98.5% [7] | |

| Swin Transformer | Advanced Transformer Architecture | Accuracy: Up to 99.9% [7] |

Experimental Protocols for Robust Model Development

This section outlines detailed methodologies for key experiments cited in this note, providing a reproducible framework for researchers.

Protocol: Transfer Learning for Brain Tumor Classification

This protocol is based on the methodology that achieved 99.86% accuracy using a fine-tuned VGG16 model [10].

1. Dataset Description and Preprocessing:

- Dataset: Use a curated brain tumor MRI dataset (e.g., the combined Figshare, SARTAJ, and Br35H dataset containing 7,023 images across four classes: glioma, meningioma, pituitary tumor, and no tumor) [10].

- Image Resizing: Load and resize all MRI images to a uniform 224 x 224 pixels to ensure consistency as input to the Convolutional Neural Network (CNN) model.

- Image Normalization: Scale pixel values to a range of 0 to 1 to enhance model convergence and reduce computational complexity [10].

- Data Splitting: Partition the dataset into training (80%), validation (10%), and test (10%) sets, ensuring a balanced distribution of classes across splits [10].

2. Data Augmentation (for addressing data scarcity and class imbalance): Apply the following augmentation techniques in real-time during training to increase the diversity of the training data and mitigate overfitting [10]:

- Shear (30%)

- Zoom (30%)

- Vertical and Horizontal Flip

- Fill Mode: 'nearest'

3. Model Selection and Fine-Tuning:

- Model Initialization: Select a pre-trained model (e.g., VGG16, ResNet50, Xception) initialized with weights from large-scale natural image datasets like ImageNet [10].

- Fine-Tuning Strategy:

- Unfreeze only the last 5 layers of the pre-trained model. This allows the model to adapt its high-level, task-specific features to the medical domain while retaining the general feature detectors learned from ImageNet.

- Replace the original classification head (top layers) with custom layers tailored for the specific brain tumor classification task (e.g., a new fully connected layer with 4 output nodes) [10].

- Training Hyperparameters:

- Epochs: 100 (with early stopping to prevent overfitting).

- Optimizer: Adam.

- Loss Function: Categorical Cross-Entropy.

Protocol: Multi-Scanner Harmonization and Preprocessing Pipeline

This protocol addresses data variability and is critical for ensuring model generalizability [8] [12].

1. Preprocessing Steps: Implement a sequential pipeline using tools like the FMRIB Software Library (FSL):

- Skull Stripping: Use FSL's Brain Extraction Tool (BET) to remove non-brain tissue [12].

- Bias Field Correction: Apply FSL's FMRIB's Automated Segmentation Tool (FAST) to correct for low-frequency intensity inhomogeneity (bias field) across the image [12].

- Denoising: Utilize an edge-preserving filter like FSL's SUSAN denoising to reduce high-frequency noise while preserving important structural details [12].

- Intensity Normalization: Perform Z-score normalization on the image to standardize intensities to a mean of zero and a standard deviation of one, reducing inter-scanner variability [12].

2. Harmonization Validation:

- Feature Reproducibility Analysis: Extract radiomic features from the processed images. Assess feature stability across different preprocessing pipelines and scanner types using the Intraclass Correlation Coefficient (ICC). Prefer features with high ICC (e.g., ≥ 0.90) for model development, as they are more robust to technical variations [12].

- Traveling Headers/Phantom Studies: Incorporate data from traveling human subjects or standardized phantoms scanned across multiple sites and scanners to quantitatively evaluate and correct for site-specific effects [8].

Workflow and Signaling Diagrams

The following diagram illustrates the integrated workflow for developing a robust brain tumor classification system that addresses data scarcity and variability.

Figure 1: Integrated workflow for robust brain tumor classification model development, illustrating how core solutions address key data challenges.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Tools for Brain Tumor MRI Analysis

| Item/Tool Name | Function/Application | Explanation & Relevance |

|---|---|---|

| FSL (FMRIB Software Library) | Image Preprocessing & Analysis | A comprehensive library of analysis tools for fMRI, MRI, and DTI brain imaging data. Critical for implementing data harmonization pipelines (e.g., BET, FAST, SUSAN) [12]. |

| BraTS Dataset | Model Training & Benchmarking | A large-scale, multi-institutional benchmark dataset for brain tumor segmentation, providing multi-modal MRI scans with expert-annotated ground truth [7] [9]. |

| Pre-trained CNN Models (VGG16, ResNet50, DenseNet201) | Transfer Learning Base Models | Models pre-trained on ImageNet provide powerful, generic feature extractors. Fine-tuning them on medical images is a highly effective strategy when data is scarce [3] [10] [11]. |

| Grad-CAM / SHAP | Model Interpretability (XAI) | Techniques that produce visual explanations for decisions from CNN models, increasing clinical trust by highlighting regions of the MRI that influenced the classification [13] [11]. |

| Data Augmentation Tools (e.g., TensorFlow Keras ImageDataGenerator) | Mitigating Data Scarcity | Software tools that programmatically expand training datasets using transformations (rotation, flips, etc.), improving model robustness and combating overfitting [3] [10]. |

Pre-trained models have revolutionized the field of computer vision by providing powerful, ready-to-use solutions that save time and computational resources. These models, trained on large-scale datasets like ImageNet, capture intricate patterns and features, making them highly effective for image classification and other visual tasks [14]. Within medical imaging, and specifically for tumor detection in MRI scans, transfer learning with these models accelerates development and enhances the accuracy of diagnostic tools [3] [13]. This document provides a detailed overview of four common pre-trained models—VGG16, ResNet, DenseNet, and GoogleNet—framed within the context of brain tumor detection research. It includes architectural summaries, experimental protocols, and key reagents to equip researchers and scientists with the necessary knowledge for effective implementation.

Model Architectures and Principles

- VGG16: Developed by the Visual Geometry Group at the University of Oxford, VGG16 is characterized by its simplicity and depth, using only 3x3 convolutional filters stacked in a sequential manner. It consists of 13 convolutional layers and 3 fully connected layers, totaling 16 weight layers [15] [16]. Its uniform architecture makes it a strong baseline feature extractor.

- ResNet (Residual Networks): Introduced by Microsoft Research, ResNet revolutionized deep learning by solving the vanishing gradient problem through residual connections [14] [17]. These skip connections allow the network to learn residual functions by referencing the layer's inputs, expressed as

H(x) = F(x) + x, enabling the training of networks that are substantially deeper (e.g., ResNet-50, ResNet-101) than was previously feasible [17]. - DenseNet (Densely Connected Convolutional Network): DenseNet connects each layer to every other layer in a feed-forward fashion within a dense block [18] [19]. This architecture ensures maximum information flow between layers, encourages feature reuse, and significantly reduces the number of parameters, making it both efficient and powerful [18].

- GoogleNet (Inception v1): GoogleNet introduced the Inception module, which performs multiple convolution operations (1x1, 3x3, 5x5) in parallel, along with max pooling, and concatenates their outputs [20]. A key innovation is the use of 1x1 convolutions for dimensionality reduction, which decreases computational cost. It also uses auxiliary classifiers during training to combat the vanishing gradient problem and improve convergence in its 22-layer deep network [20].

Comparative Analysis for Tumor Detection

The table below summarizes the key architectural features and performance considerations of these models, particularly for medical image analysis.

Table 1: Comparative analysis of pre-trained models for tumor detection applications

| Aspect | VGG16 | ResNet | DenseNet | GoogleNet (Inception v1) |

|---|---|---|---|---|

| Core Innovation | Depth via small (3x3) filters [16] | Residual learning with skip connections [17] | Dense connectivity for feature reuse [18] | Inception module (multi-scale processing) [20] |

| Key Strength | Simple, robust feature extraction [14] | Trains very deep networks effectively [14] [17] | High parameter efficiency, strong gradient flow [18] | Computational efficiency, good accuracy [20] [21] |

| Depth (Layers) | 16 [16] | 50, 101, 152 (variants) [14] | 121, 169, 201 (variants) [14] [18] | 22 [20] |

| Parameter Count | High (~138 million) [16] | Moderate (e.g., ~25.6M for ResNet-50) | Low (e.g., ~8M for DenseNet-121) [18] | Low (~7M) [20] |

| Handling Vanishing Gradient | Prone | Mitigated via skip connections [17] | Mitigated via dense connections [18] | Mitigated via auxiliary classifiers [20] |

| Example Performance in Brain Tumor Classification | 94% accuracy (Hybrid CNN-VGG16) [13] | High accuracy in comparative studies [3] | Suitable for complex feature extraction [18] | 99.2% accuracy (highest in a 2025 study) [3] |

Experimental Protocol for Tumor Classification in MRI Scans

This protocol outlines a standardized methodology for leveraging pre-trained models to classify brain tumors from MRI scans, for instance, into categories like Glioma, Meningioma, Pituitary tumor, and Normal [3].

The following diagram illustrates the end-to-end experimental workflow for transfer learning-based tumor classification.

Detailed Methodology

Data Preprocessing and Augmentation

- Data Sourcing: Utilize publicly available datasets of brain MRI scans. A representative dataset includes 4,517 images across three tumor types (Glioma, Meningioma, Pituitary) and normal brains [3].

- Preprocessing Pipeline:

- Normalization: Scale pixel intensities to a range of [0, 1] by dividing by 255 [17].

- Resizing: Resize all images to the input size required by the pre-trained model (e.g., 224x224 for VGG16 and others) [15] [13].

- Data Augmentation: To address overfitting and class imbalance, apply real-time data augmentation during training. This includes random rotations, width and height shifts, shearing, zooming, and horizontal flipping [3] [15] [13]. This is efficiently implemented using tools like the

ImageDataGeneratorin Keras [15].

Model Setup and Fine-tuning

- Base Model Initialization: Load a pre-trained model (e.g., VGG16, ResNet, GoogleNet) without its top classification head. The convolutional base is used as a feature extractor [13].

- Custom Classifier Addition: Attach a new, randomly initialized classifier on top of the base model. This typically consists of a flattening layer, followed by one or more fully connected (Dense) layers with ReLU activation, and a final softmax output layer with a number of units equal to the tumor classes [15].

- Fine-tuning Strategy:

- Feature Extraction Phase: Initially, freeze the weights of the pre-trained base model and only train the newly added classifier layers. This allows the model to learn to interpret the pre-computed features for the new task.

- Fine-Tuning Phase: Unfreeze a portion of the deeper layers of the base model and train the entire network end-to-end with a very low learning rate (e.g., 1e-5). This carefully adapts the pre-trained features to the specifics of the medical imaging domain [13].

Training Configuration

- Optimizer: Use adaptive optimizers like Adam or SGD with Nesterov momentum. A learning rate scheduler (e.g., reducing the learning rate when validation accuracy plateaus) is highly recommended for stable fine-tuning [17].

- Loss Function: Use

Categorical Crossentropyfor multi-class classification. - Regularization: Employ techniques like Dropout in the fully connected layers and L2 regularization in convolutional layers to prevent overfitting [15] [17].

- Early Stopping: Halt training if the validation performance does not improve for a pre-defined number of epochs (e.g., 20) to avoid overfitting and save computational resources [15].

Model Validation and Explainability

- Performance Metrics: Evaluate the model on a held-out test set using metrics such as Accuracy, Precision, Recall, F1-Score, and Area Under the ROC Curve (AUC) [13].

- Explainable AI (XAI): Integrate XAI methods like SHapley Additive exPlanations (SHAP) or Gradient-weighted Class Activation Mapping (Grad-CAM) to generate visual explanations [13]. These heatmaps highlight the regions in the MRI scan that were most influential in the model's prediction, which is critical for building clinical trust and verifying that the model focuses on biologically relevant areas [13].

The Scientist's Toolkit: Research Reagent Solutions

The table below lists essential computational "reagents" and tools required to implement the described experimental protocol.

Table 2: Essential research reagents and computational tools for transfer learning in medical imaging

| Research Reagent / Tool | Specification / Function | Application Note |

|---|---|---|

| Pre-trained Model Weights | VGG16, ResNet, DenseNet, GoogleNet trained on ImageNet. | Serves as a robust feature extractor, providing a strong initialization for the medical task [14] [15]. |

| MRI Datasets | Curated public datasets (e.g., Figshare) with labeled tumor classes [3]. | The foundational data for training and evaluation. Requires careful partitioning into training, validation, and test sets. |

| Data Augmentation Generator | Keras ImageDataGenerator or PyTorch torchvision.transforms. |

Artificially expands the training dataset in real-time, improving model generalization and robustness [15]. |

| Optimizer | Adam or SGD with momentum and learning rate scheduling. | Controls the weight update process during training. Scheduling is crucial for effective fine-tuning [17]. |

| Explainability Framework | SHAP or Grad-CAM libraries. | Provides post-hoc interpretability of model predictions, a necessity for clinical validation and adoption [13]. |

| Computational Hardware | GPU with sufficient VRAM (e.g., NVIDIA Tesla V100, RTX 3090). | Accelerates the training of deep neural networks, which is computationally intensive, especially for 3D medical images. |

Within magnetic resonance imaging (MRI) research, the development of robust computer-aided diagnostic (CAD) systems, particularly those leveraging transfer learning, relies on access to high-quality, annotated datasets. These datasets serve as the foundational bedrock for training, validating, and benchmarking sophisticated deep learning models. For researchers and drug development professionals, selecting the appropriate dataset is a critical first step that directly influences the validity and generalizability of their findings. This application note provides a detailed overview of three pivotal resources in this domain—the BraTS, Figshare, and Kaggle datasets. It offers a structured comparison of their characteristics, outlines detailed experimental protocols for their use in transfer learning workflows, and visualizes the key methodologies to accelerate research in accurate brain tumor detection and classification.

Benchmark datasets provide the ground truth necessary for developing and evaluating automated brain tumor analysis systems. The BraTS, Figshare, and Kaggle collections are among the most widely used, each with distinct focuses and attributes.

BraTS (Brain Tumor Segmentation): The BraTS benchmark is a continuously evolving challenge focused primarily on the complex task of pixel-wise segmentation of glioma sub-regions. The BraTS 2025 dataset includes multi-parametric MRI (mpMRI) scans from both pre-treatment and post-treatment patients, featuring T1-weighted, post-contrast T1-weighted (T1ce), T2-weighted, and T2 FLAIR modalities [22]. Its annotations are exceptionally detailed, delineating the Enhancing Tumor (ET), Non-enhancing Tumor Core (NETC), Peritumoral Edema (also referred to as Surrounding Non-enhancing FLAIR Hyperintensity, SNFH), and Resection Cavity (RC). These are also combined to evaluate the Whole Tumor (WT) and Tumor Core (TC) [22]. With thousands of cases, it is a large-scale dataset designed for developing robust, clinically relevant segmentation models.

Figshare: The Figshare repository hosts several brain tumor datasets. A prominent, widely used dataset is the one contributed by Cheng et al., which contains 3,064 T1-weighted contrast-enhanced MRI images [23]. This dataset is curated for a three-class classification task (glioma, meningioma, pituitary tumor), making it a standard benchmark for image-level classification models rather than segmentation. A newer dataset, BRISC (Brain tumor Image Segmentation & Classification), addresses common limitations in existing collections. Announced in 2025, BRISC offers 6,000 T1-weighted MRI slices with physician-validated pixel-level masks and a balanced multi-class classification split, covering glioma, meningioma, pituitary tumor, and no tumor classes [24].

Kaggle: The Kaggle platform hosts community-driven datasets, often curated for specific learning and competition goals. One such public Brain Tumor MRI Dataset contains 7,023 T1-weighted images categorized for classification into four classes: glioma, meningioma, pituitary tumor, and no tumor [5] [25]. These datasets are typically structured for ease of use in deep learning pipelines, providing a straightforward path for applying transfer learning to classification problems.

Table 1: Quantitative Comparison of Key Brain Tumor MRI Datasets

| Dataset Name | Primary Task | Modality | Volume | Classes / Annotations | Key Features |

|---|---|---|---|---|---|

| BraTS 2025 [22] | Segmentation | Multi-parametric MRI (T1, T1ce, T2, FLAIR) | ~2,877 3D cases | • Enhancing Tumor (ET)• Non-Enhancing Tumor Core (NETC)• Edema (SNFH)• Resection Cavity (RC) | Focus on pre- & post-treatment glioma; standardized benchmark |

| Figshare (Cheng et al.) [23] | Classification | T1-weighted, contrast-enhanced | 3,064 2D images | • Glioma• Meningioma• Pituitary Tumor | Classic benchmark for three-class tumor classification |

| Figshare (BRISC 2025) [24] | Segmentation & Classification | T1-weighted | 6,000 2D slices | • Glioma, Meningioma, Pituitary, No Tumor• Pixel-wise binary masks | Balanced distribution; expert-validated masks; multi-plane slices |

| Kaggle (Brain Tumor MRI) [5] [25] | Classification | T1-weighted | 7,023 2D images | • Glioma, Meningioma, Pituitary, No Tumor | Large volume; readily usable for training classification models |

Table 2: Typical Performance Benchmarks of Transfer Learning Models on These Datasets

| Model | Dataset | Reported Accuracy | Key Strengths |

|---|---|---|---|

| GoogleNet [3] | Figshare (3-class) | 99.2% | High accuracy on balanced classification tasks |

| ResNet152 with SVM [25] | Kaggle (4-class) | 98.53% | Powerful feature extraction combined with robust classifier |

| CNN (Custom) [5] | Kaggle (4-class) | 98.9% | End-to-end learning; high precision in detection |

| Random Forest [26] | BraTS (for classification) | 87.0% | Can outperform complex DL models on certain classification tasks |

| MobileNetV2 [3] | Figshare (3-class) | High (Comparative) | Lightweight architecture suitable for resource-constrained deployment |

Experimental Protocols for Transfer Learning

This section outlines detailed methodologies for employing transfer learning on the aforementioned datasets, covering both classification and segmentation tasks.

Multi-class Tumor Classification Protocol

Objective: To fine-tune a pre-trained deep learning model for classifying brain MRI images into tumor types (e.g., Glioma, Meningioma, Pituitary) or "No Tumor" using datasets like Figshare or Kaggle.

Materials: Figshare (BRISC or Cheng et al.) or Kaggle Brain Tumor MRI Dataset [24] [23] [5].

Procedure:

- Data Preprocessing:

- Image Conversion: Convert all images to grayscale to reduce computational complexity, as essential features are based on intensity [5].

- Noise Reduction: Apply a Gaussian filter to blur images and suppress high-frequency noise [5] [25].

- Intensity Normalization: Rescale pixel values to a standard range (e.g., 0-1) to ensure stable model training.

- Size Standardization: Resize all images to match the input size required by the pre-trained model (e.g., 224x224 for models like ResNet or GoogleNet) [25].

- Data Augmentation (On-the-fly): To artificially expand the dataset and improve model generalization, apply random in-memory transformations to each training batch. These can include:

- Random rotations (±10°)

- Horizontal and vertical flipping

- Brightness and contrast variations

- Model Preparation & Transfer Learning:

- Select a Pre-trained Model: Choose a model pre-trained on a large natural image dataset (e.g., ImageNet). Common choices include ResNet152, GoogleNet, or MobileNetV2 [3] [25].

- Replace Classifier Head: Remove the final fully connected classification layer of the pre-trained model and replace it with a new one with output nodes equal to the number of tumor classes (e.g., 4).

- Fine-tuning: Train the model on the brain tumor dataset. It is common practice to use a lower learning rate for the pre-trained layers and a higher one for the newly added classifier head to avoid catastrophic forgetting.

- Evaluation: Evaluate the fine-tuned model on the held-out test set using metrics such as Accuracy, Precision, Recall, and F1-Score [25].

The workflow for this protocol is visualized below.

Tumor Segmentation Protocol with Advanced Augmentation

Objective: To train a model for pixel-wise segmentation of brain tumor sub-regions using the BraTS dataset, incorporating advanced on-the-fly data augmentation to improve robustness.

Materials: BraTS dataset (mpMRI scans) [22].

Procedure:

- Data Preprocessing:

- Co-registration and Normalization: The BraTS data is already pre-processed with co-registered MR sequences, interpolated to a uniform 1mm³ resolution, and skull-stripped [22].

- Intensity Normalization: Perform per-sequence (T1, T1ce, T2, FLAIR) Z-score normalization across each volume.

- Advanced On-the-Fly Augmentation:

- Synthetic Tumor Insertion: To address data scarcity and class imbalance, integrate a Generative Adversarial Network (GAN), such as GliGAN, into the training loop [22]. This approach dynamically inserts realistic synthetic tumors into healthy brain tissue or existing tumor scans during training, vastly increasing the model's exposure to diverse tumor appearances.

- Targeted Augmentation: Use the conditional nature of GliGAN to modify the input label masks, for instance, by scaling down lesions to create more small tumor examples or by swapping under-represented tumor class labels (e.g., converting Edema to Enhancing Tumor) to balance class distribution [22].

- Model Training:

- Architecture Selection: The nnU-Net framework is a robust, self-configuring baseline that has proven highly effective in BraTS challenges and is an excellent starting point [22].

- Training Loop: The model is trained on random 3D patches extracted from the mpMRI volumes. The on-the-fly augmentation (including synthetic tumor insertion) is applied to each batch before it is fed into the network.

- Evaluation: The model's performance is evaluated on the validation set using the BraTS-standard Dice similarity coefficient for the Enhancing Tumor (ET), Tumor Core (TC), and Whole Tumor (WT) regions [22].

The workflow for this advanced segmentation protocol is as follows.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools and Models for Brain Tumor Analysis Research

| Tool / Reagent | Type | Function in Research | Exemplar Use Case |

|---|---|---|---|

| nnU-Net [22] | Deep Learning Framework | A self-configuring framework for medical image segmentation that automatically adapts to dataset properties. | Used as a robust baseline and core architecture for segmenting BraTS tumor sub-regions. |

| GliGAN [22] | Generative Adversarial Network | A pre-trained GAN for generating realistic synthetic brain tumors and inserting them into MRI scans. | Dynamic, on-the-fly data augmentation to increase model robustness and address class imbalance. |

| ResNet152 [25] | Pre-trained CNN Model | A very deep convolutional network for powerful hierarchical feature extraction from images. | Used as a feature extractor or fine-tuned for high-accuracy classification of tumor types. |

| GoogleNet [3] | Pre-trained CNN Model | A deep network with inception modules for efficient multi-scale feature computation. | Fine-tuned for brain tumor classification, achieving state-of-the-art accuracy. |

| Support Vector Machine (SVM) [25] | Machine Learning Classifier | A classical classifier that finds the optimal hyperplane to separate different classes in a high-dimensional space. | Used as the final classifier on deep features extracted by a pre-trained CNN (e.g., ResNet152). |

Implementing State-of-the-Art Transfer Learning Architectures and Techniques

Within the broader scope of thesis research on transfer learning for tumor detection in MRI scans, the fine-tuning of pre-trained Convolutional Neural Networks (CNNs) has emerged as a cornerstone technique. It effectively addresses the primary challenge in medical imaging: developing highly accurate models despite limited annotated datasets [27] [28]. This approach leverages feature hierarchies learned from large-scale natural image databases, such as ImageNet, and adapts them to the specific domain of neuroimaging, enabling robust classification of brain tumors like glioma, meningioma, and pituitary tumors [13].

The following sections provide a detailed examination of the state-of-the-art, presenting quantitative performance comparisons, structured experimental protocols, and essential toolkits to equip researchers and scientists with the practical knowledge for implementing these methods in diagnostic and drug development workflows.

Performance Analysis of Pre-trained Architectures

Recent studies have systematically evaluated various pre-trained architectures, demonstrating their efficacy in brain tumor classification. The table below summarizes the reported performance of several prominent models on standard datasets.

Table 1: Performance of Fine-Tuned Pre-trained Models in Brain Tumor Classification

| Model Architecture | Reported Accuracy | Dataset Used | Number of Classes | Key Findings / Context |

|---|---|---|---|---|

| InceptionV3 | 98.17% (Testing) [29] | Kaggle (7023 images) | 4 | Achieved impressive training accuracy of 99.28% [29]. |

| VGG19 | 98% (Classification Report) [29] | Kaggle (7023 images) | 4 | Demonstrated strong performance, beating other compared models [29]. |

| GoogleNet | 99.2% [3] | Dataset with 4,517 MRI scans | 4 | Outperformed previous studies using the same dataset [3]. |

| Fine-tuned ResNet-34 | 99.66% [27] | Brain Tumor MRI Dataset (7023 images) | 4 | Enhanced with Ranger optimizer and custom head; surpassed state-of-the-art [27]. |

| Proposed Automated DL Framework | 99.67% [30] | Figshare dataset | N/S | Used ensemble model after deep learning-based segmentation and attention modules [30]. |

| Xception | 98.57% [28] | Br35H dataset | 2 (Binary) | Part of a fine-tuned model for binary classification (abnormal vs. normal) [28]. |

| DenseTransformer (Hybrid) | 99.41% [11] | Br35H: Brain Tumor Detection 2020 | 2 (Binary) | Hybrid model combining DenseNet201 and Transformer with MHSA [11]. |

The performance of these models is heavily influenced by the specific fine-tuning strategies and data handling protocols employed. The following section details the core methodologies that underpin these results.

Experimental Protocols for Fine-Tuning

A successful fine-tuning experiment for brain tumor classification involves a structured pipeline from data preparation to model training. The protocol below synthesizes best practices from recent high-performing studies [27] [28].

Data Preprocessing and Augmentation Protocol

Objective: To prepare a robust and generalized dataset for model training. Materials: Raw MRI dataset (e.g., Figshare, Br35H), Python with OpenCV/TensorFlow/PyTorch libraries.

- Data Cleansing: Identify and remove duplicate images using algorithms like MD5 hashing to prevent overfitting [27].

- Normalization: Normalize pixel intensities using the mean and standard deviation from the ImageNet dataset (

mean = [0.485, 0.456, 0.406],std = [0.229, 0.224, 0.225]) to align with the pre-trained model's expected input distribution [27]. - Resizing and Cropping: Resize all images to a larger dimension (e.g., 256x256 pixels) followed by a random center crop to the target input size of the network (e.g., 224x224 for ResNet). This preserves important anatomical details while introducing variability [27].

- Data Augmentation: Apply real-time augmentation during training to increase dataset diversity and improve model generalization. Common techniques include:

- Vertical/Horizontal Flipping: Addresses orientation variability in MRI scans [27].

- Random Rotation (±20 degrees): Improves model invariance to slight angular differences [27].

- Random Zoom (e.g., 0.2): Helps the model learn features at different scales [27].

- Brightness Adjustment (e.g., max_delta=0.4): Accounts for differences in MRI scanner settings and imaging protocols [27].

Table 2: Standard Data Augmentation Parameters

| Augmentation Technique | Typical Parameter Value | Purpose |

|---|---|---|

| Rotation | ±20 degrees | Invariance to patient head tilt |

| Zoom | 0.2 (20%) | Robustness to tumor size variance |

| Width/Height Shift | 0.2 (20%) | Invariance to tumor location |

| Horizontal Flip | True | Positional invariance |

| Brightness Adjustment | Max Delta = 0.4 | Robustness to scanner intensity variation |

Model Fine-Tuning and Training Protocol

Objective: To adapt a pre-trained CNN for the specific task of brain tumor classification. Materials: Pre-trained model (e.g., ResNet, VGG, Inception), deep learning framework (TensorFlow/PyTorch).

Base Model Selection and Initial Setup:

Custom Classification Head:

- Remove the original fully connected (FC) head of the pre-trained model.

- Replace it with a new, randomly initialized head. A typical structure includes:

- A Global Average Pooling 2D (GAP2D) layer to convert feature maps into a vector, reducing parameters and overfitting compared to a flattening layer [28].

- One or more Dense layers (e.g., 512, 256 units) with ReLU activation and Dropout regularization (e.g., rate=0.5) to combat overfitting [28].

- A final Dense layer with Softmax activation, with units equal to the number of tumor classes (e.g., 4 for glioma, meningioma, pituitary, no tumor) [27].

Two-Phase Training:

- Phase 1: Train only the newly added classification head for a few epochs using a frozen convolutional base. This allows the head to learn to interpret the existing features.

- Phase 2: Unfreeze a portion (or all) of the convolutional base for fine-tuning. Use a significantly lower learning rate (e.g., 10 times smaller) than used in Phase 1 to make small, precise adjustments to the pre-trained features [13].

Optimization and Compilation:

- Use optimizers that contribute to stable convergence, such as Ranger (a combination of RAdam and Lookahead) [27] or Adam.

- Compile the model with a loss function like

categorical_crossentropyfor multi-class classification. - Implement learning rate scheduling or early stopping to prevent overfitting and optimize training time.

The Scientist's Toolkit: Research Reagent Solutions

This section catalogs the essential "research reagents"—key datasets, software, and hardware—required to conduct experiments in fine-tuning CNNs for brain tumor classification.

Table 3: Essential Research Reagents for Fine-Tuning Experiments

| Reagent / Resource | Type | Specification / Example | Primary Function in Experiment |

|---|---|---|---|

| Benchmark MRI Datasets | Data | Figshare (3064+ images), Br35H (3000 images), Kaggle Brain Tumor MRI Dataset (7023 images) [29] [27] [28] | Provides standardized, annotated data for model training, validation, and comparative performance benchmarking. |

| Pre-trained Model Weights | Software | ImageNet-pretrained models (ResNet34, VGG19, InceptionV3, Xception, DenseNet201) [29] [11] [28] | Serves as the foundational feature extractor, providing a robust starting point for transfer learning. |

| Deep Learning Framework | Software | TensorFlow 2.x / Keras, PyTorch | Offers the programming environment and high-level APIs for building, fine-tuning, and evaluating deep learning models. |

| Data Augmentation Library | Software | TensorFlow ImageDataGenerator, Torchvision transforms |

Systematically generates variations of training data to improve model generalization and combat overfitting. |

| Optimizer | Algorithm | Ranger (RAdam + Lookahead), Adam, SGD | Controls the model's weight update process during training, impacting convergence speed and final performance [27]. |

| Explainable AI (XAI) Tool | Software | Grad-CAM, LIME, SHAP [11] [13] | Provides visual and quantitative explanations for model predictions, building trust and enabling clinical validation. |

| Computing Hardware | Hardware | GPU with ≥ 8GB VRAM (NVIDIA RTX 3080, A100) | Accelerates the computationally intensive process of model training and inference. |

Advanced Architectural Strategies

Beyond basic fine-tuning, recent research has focused on hybrid and advanced architectural strategies to push performance boundaries.

Integration of Attention Mechanisms

Attention modules, such as Multi-Head Self-Attention (MHSA) and Squeeze-and-Excitation Attention (SEA), can be integrated after the CNN backbone. These mechanisms allow the model to focus on diagnostically salient regions in the MRI scan by capturing global contextual relationships and channel-wise dependencies, which is crucial for identifying irregular or small tumors [11].

Hybrid CNN-Transformer Frameworks

Models like the DenseTransformer combine the strengths of CNNs in local feature extraction with the ability of Transformers to model long-range dependencies. These hybrid frameworks leverage a pre-trained CNN (e.g., DenseNet201) for initial feature extraction and then process the reshaped features through a Transformer encoder to capture global context, achieving state-of-the-art accuracy [11].

Explainable AI (XAI) for Model Interpretation

For clinical deployment, model interpretability is paramount. Techniques like Gradient-weighted Class Activation Mapping (Grad-CAM) and SHapley Additive exPlanations (SHAP) are used to generate heatmaps that highlight the regions of the MRI scan that most influenced the model's decision. This aligns the AI's reasoning with clinical expertise, fostering trust and facilitating validation by radiologists [11] [13].

The application of deep learning to medical image analysis, particularly for brain tumor detection in Magnetic Resonance Imaging (MRI) scans, represents a critical frontier in modern computational oncology. Within the broader context of transfer learning research for tumor detection, hybrid models that integrate Convolutional Neural Networks (CNNs) with attention mechanisms and Transformer architectures have emerged as a particularly powerful paradigm. These models synergistically combine the proven feature extraction capabilities of CNNs, honed on large-scale natural image datasets, with the powerful global contextual reasoning of Transformers, which excel at capturing long-range dependencies in images [31] [32]. This fusion addresses fundamental limitations of pure architectures: CNNs are limited by their local receptive fields, while Transformers are often computationally intensive and data-hungry for high-resolution medical images [32]. The resulting hybrid frameworks achieve state-of-the-art performance in classification, detection, and segmentation tasks, enabling more precise, interpretable, and clinically actionable tools for researchers, scientists, and drug development professionals working in neuro-oncology.

Quantitative Performance of Hybrid Architectures

Recent research demonstrates that hybrid models consistently outperform traditional CNN and pure Transformer approaches across multiple benchmark datasets. The table below summarizes the performance of key hybrid architectures in brain tumor classification.

Table 1: Performance of Hybrid Models for Brain Tumor Classification on MRI Scans

| Model Name | Architecture Type | Reported Accuracy | Key Metrics | Dataset Used |

|---|---|---|---|---|

| HybLwDL [33] | Lightweight Hybrid Twin-Attentive Pyramid CNN | 99.5% | High computational efficiency | Brain Tumor Detection 2020 |

| VGG16 + Custom Attention [34] | CNN (VGG16) + SoftMax-weighted Attention | 99% | Precision and Recall ~99% | Kaggle (7023 images) |

| Hierarchical Multi-Scale ViT [32] | Vision Transformer with Multi-Scale Attention | 98.7% | Precision: 0.986, F1-Score: 0.987 | Brain Tumor MRI Dataset |

| ShallowMRI Attention [35] | Lightweight CNN with Novel Attention | 98.24% (Multiclass) | Computational cost: 25.4 G FLOPs | Kaggle Multiclass, BR35H |

| ANSA_Ensemble [36] | Shallow Attention-guided CNN | 98.04% (Best) | Cross-dataset validation | Cheng, Bhuvaji, Sherif |

| Ensemble CNN (VGG16) [37] | Transfer Learning (VGG16) | 98.78% (Test) | Specificity >0.98 | Kaggle (4 classes) |

These quantitative results underscore a clear trend: the integration of attention and Transformer components into established CNN pipelines reliably pushes classification accuracy into the high 98th and 99th percentiles. Furthermore, the development of lightweight hybrid models like ShallowMRI and HybLwDL proves that this performance gain does not necessarily come at the cost of computational intractability, making such models suitable for deployment in resource-constrained environments, including potential edge computing applications in clinical settings [33] [35] [36].

Core Architectural Components and Signaling Pathways

The superior performance of hybrid models stems from the seamless integration of distinct, complementary components into a cohesive analytical pipeline.

The Convolutional Backbone: Local Feature Extraction

The foundation of most hybrid models is a pre-trained CNN (e.g., VGG16, ResNet, EfficientNet) used as a feature extraction backbone [34] [38] [37]. This leverages the principle of transfer learning, where knowledge from a source domain (e.g., ImageNet) is transferred to the target medical domain. CNNs provide an inductive bias for images—namely, translation invariance and locality—making them exceptionally efficient at extracting hierarchical features like edges, textures, and complex patterns from local pixel neighborhoods [32]. This process converts a raw input MRI image into a rich, multi-dimensional feature map that serves as a structured input for subsequent stages.

The Attention Mechanism: Dynamic Feature Re-calibration

Attention mechanisms act as an intermediary, intelligent filter between the CNN and the Transformer. They can be integrated directly into the CNN backbone or as separate modules. The core function of attention is to dynamically weight the importance of different features or spatial regions. For instance:

- Channel Attention (e.g., as in Squeeze-and-Excitation networks) re-calibrates feature maps across channels, allowing the model to emphasize more informative diagnostic features [35].

- Spatial Attention generates a mask that highlights the most semantically relevant regions of the image, such as the probable tumor location, while suppressing irrelevant background information [34].

This "focusing" mechanism mimics a radiologist's ability to concentrate on salient areas, thereby improving feature quality and model interpretability before data is passed to the Transformer encoder.

The Transformer Encoder: Global Context Modeling

The processed feature maps from the CNN and attention modules are then transformed into a sequence of tokens and fed into a Transformer encoder. The encoder's multi-head self-attention mechanism is the core of its power. It allows every element in the sequence to interact with every other element, regardless of distance. This enables the model to capture long-range dependencies and global contextual relationships—for example, understanding the spatial relationship between a tumor's core and its diffuse boundaries across the entire brain slice, something CNNs struggle with due to their progressively limited receptive fields [31] [32]. The output is a set of features enriched with both local detail and global context, ready for the final classification or segmentation head.

Diagram 1: High-level workflow of a generic hybrid CNN-Transformer model for tumor classification.

Experimental Protocols for Model Implementation

This section provides a detailed, actionable protocol for developing and validating a hybrid CNN-Transformer model, framed within a transfer learning paradigm.

Data Preprocessing and Augmentation Protocol

Objective: To prepare a raw MRI dataset for model training, enhancing robustness and generalizability.

- Data Sourcing: Utilize a public benchmark dataset such as the Kaggle Brain Tumor MRI Dataset (7023 images) or the Figshare dataset [34] [31]. Ensure the data is partitioned into training, validation, and test sets (e.g., 70-15-15 split).

- Noise Reduction: Apply a filtering technique to raw images to improve signal-to-noise ratio. Median Filtering or a Gaussian Bilateral Network Filter (GANF) are common choices that effectively reduce noise while preserving edges [33] [38].

- Intensity Normalization: Standardize the intensity values of all images to a common scale (e.g., 0 to 1) to ensure consistent model convergence.

- Data Augmentation: Artificially expand the training dataset to prevent overfitting. Apply real-time transformations including:

- Random rotation (±10 degrees)

- Horizontal and vertical flipping

- Brightness and contrast adjustments

- Zoom and shear operations [34]

Model Construction and Training Protocol

Objective: To build a hybrid architecture leveraging transfer learning and optimize its parameters.

- Backbone Initialization: Select a pre-trained CNN (e.g., VGG16, EfficientNet-B0) and remove its classification head. This network serves as a feature extractor [34] [37].

- Attention Integration: Introduce an attention module to process the CNN's feature maps. A standard approach is to use a Custom Attention (CA) layer that employs SoftMax-weighted attention to dynamically weigh tumor-specific features [34].

- Transformer Integration:

- Classification Head: Attach a fully connected Multi-Layer Perceptron (MLP) to the output of the Transformer's [CLS] token or averaged tokens for the final classification into categories (e.g., Glioma, Meningioma, Pituitary, No Tumor).

- Hyperparameter Tuning: Utilize an optimization algorithm like the Stellar Oscillation Optimizer (SOO) or other nature-inspired metaheuristics to fine-tune hyperparameters such as learning rate, batch size, and the number of attention heads [33].

Model Evaluation and Explainability Protocol

Objective: To validate model performance and ensure its predictions are interpretable for clinical stakeholders.

- Performance Metrics: Calculate standard classification metrics on the held-out test set: Accuracy, Precision, Recall/Sensitivity, Specificity, and F1-Score (see Table 1 for benchmarks).

- Explainability Analysis: Implement Grad-CAM (Gradient-weighted Class Activation Mapping) to generate heatmaps that visually highlight the regions of the input MRI that were most influential in the model's prediction. This step is critical for building clinical trust and verifying that the model focuses on biologically plausible areas [33] [34].

- Cross-Dataset Validation: Test the final model on a different, external dataset (e.g., BraTS) to evaluate its generalization capability and robustness to domain shift [36].

Diagram 2: Detailed protocol for developing and validating a hybrid model.

The Scientist's Toolkit: Research Reagent Solutions

For researchers embarking on replicating or building upon these hybrid models, the following table catalogs the essential "research reagents" and computational tools required.

Table 2: Essential Research Reagents and Computational Tools for Hybrid Model Development

| Category | Item / Technique | Specific Function | Exemplars / Alternatives |

|---|---|---|---|

| Data | Public MRI Datasets | Provides standardized, annotated data for training and benchmarking. | Kaggle Brain Tumor, Figshare, BraTS [34] [31] |

| Computational Backbone | Pre-trained CNN Models | Serves as a feature extractor; foundation of transfer learning. | VGG16, ResNet, EfficientNet [34] [38] [37] |

| Architectural Components | Attention Modules | Dynamically highlights salient features and spatial regions. | Custom SoftMax-weighted Attention, Channel Attention [34] [35] |

| Transformer Encoders | Captures global contextual relationships between all image features. | Standard ViT Encoder, Swin Transformer [31] [32] | |

| Optimization & Training | Hyperparameter Optimizers | Automates the tuning of model parameters for peak performance. | Stellar Oscillation Optimizer (SOO), Manta Ray Foraging Optimizer [33] |

| Validation & Analysis | Explainability Tools | Generates visual explanations to build trust and verify model focus. | Grad-CAM, SHAP [33] [34] [31] |

| Performance Metrics | Statistical Measures | Quantifies model performance across multiple dimensions. | Accuracy, Precision, Recall, F1-Score, Specificity [33] [36] |

The application of deep learning, particularly through transfer learning, has revolutionized the analysis of Magnetic Resonance Imaging (MRI) for brain tumor detection. Pre-trained models such as DenseNet169, ResNet50, VGG16, and GoogleNet, when fine-tuned on medical datasets, have demonstrated exceptional classification accuracy, often exceeding 98% [39] [3] [40]. However, the "black-box" nature of these high-performing models poses a significant barrier to their clinical adoption, as medical professionals require understanding the reasoning behind a diagnostic decision to trust and validate it [41] [42].

Explainable AI (XAI) has emerged as a critical subfield of artificial intelligence aimed at making the decision-making processes of complex models transparent, interpretable, and trustworthy [41]. Techniques such as Grad-CAM, LIME, and SHAP provide visual and quantitative explanations for model predictions, highlighting the specific image regions or features that influence the classification outcome [39] [40] [43]. Within the context of transfer learning for tumor detection, integrating XAI is not merely an add-on but a fundamental component for bridging the gap between algorithmic performance and clinical utility. It enables researchers and clinicians to verify that a model focuses on biologically relevant tumor hallmarks rather than spurious artifacts, thereby enhancing diagnostic confidence, facilitating earlier and more accurate treatment planning, and ultimately improving patient outcomes [44] [45] [13].

The integration of Explainable AI (XAI) with transfer learning models has yielded remarkable performance in brain tumor classification using MRI data. The following table summarizes the quantitative results from recent key studies, demonstrating the synergy between model accuracy and interpretability.

Table 1: Performance of Various XAI-Integrated Models in Brain Tumor Classification

| Model Architecture | XAI Method | Dataset Size | Key Performance Metrics | Reference |

|---|---|---|---|---|

| DenseNet169-LIME-TumorNet | LIME | 2,870 images | Accuracy: 98.78% [39] | |

| Parallel Model (ResNet101 + Xception) | LIME | Information Missing | Accuracy: 99.67% [44] | |

| Improved CNN (from DenseNet121) | Grad-CAM++ | 2 datasets | Accuracy: 98.4% and 99.3% [45] | |

| ResNet50 + SSPANet | Grad-CAM++ | Information Missing | Accuracy: 97%, Kappa: 95% [40] | |

| GoogleNet | Not Specified | 4,517 scans | Accuracy: 99.2% [3] | |

| Custom CNN & SVC | SHAP | 7,023 images | CNN Accuracy: 98.9%, SVC Accuracy: 96.7% [5] [43] | |

| Hybrid CNN-VGG16 | SHAP | 3 datasets | Accuracy: 94%, 81%, 93% [13] |

These results underscore a critical trend: the pursuit of transparency through XAI does not compromise diagnostic accuracy. On the contrary, the most interpretable models are often among the most accurate. For instance, the DenseNet169-LIME-TumorNet model not only achieved a state-of-the-art accuracy of 98.78% but also provided visual explanations that build trust and facilitate clinical validation [39]. Similarly, an improved CNN model based on DenseNet121, when coupled with Grad-CAM++, achieved up to 99.3% accuracy, demonstrating exceptional performance in localizing complex tumor instances [45].

Detailed Experimental Protocols for XAI in Tumor Detection

To ensure reproducibility and robust implementation of XAI techniques, the following section outlines standardized experimental protocols. These protocols cover the essential workflow from data preparation to model explanation.

Protocol 1: Model Training with Integrated Grad-CAM Explanations

This protocol details the procedure for training a brain tumor classifier and generating explanations using Grad-CAM or its advanced variant, Grad-CAM++.

Table 2: Protocol for Model Training with Grad-CAM/Grad-CAM++

| Step | Component | Description | Purpose & Rationale |

|---|---|---|---|

| 1. Data Preparation | Dataset | Utilize a public Brain Tumor MRI Dataset (e.g., Kaggle). A typical dataset may contain 2,870 - 7,023 T1-weighted, T2-weighted, and FLAIR MRI sequences [39] [5]. | Provides a standardized benchmark for training and evaluation. |

| Preprocessing | Convert images to grayscale. Apply Gaussian filtering for noise reduction. Use binary thresholding and contour detection to crop the Region of Interest (ROI). Normalize pixel intensities [5] [13]. | Reduces computational complexity, minimizes irrelevant background data, and standardizes input. | |

| Augmentation | Apply affine transformations, intensity scaling, and noise injection to augment the training dataset [13]. | Improves model robustness and mitigates overfitting, especially with limited data. | |

| 2. Model & Training | Base Model | Employ a pre-trained model like ResNet50 or DenseNet121 as the feature extractor [40] [45]. | Leverages transfer learning to utilize features learned from large datasets (e.g., ImageNet). |

| Fine-Tuning | Replace and train the final fully connected layer for tumor classification. Optionally unfreeze and fine-tune deeper layers of the network [3] [13]. | Adapts the pre-trained model to the specific task of brain tumor classification. | |

| Training Loop | Train using a standard optimizer (e.g., Adam) and a cross-entropy loss function. | Standard procedure for supervised learning in classification tasks. | |

| 3. XAI Explanation | Explanation Generation | For a given input image, compute the gradients of the target class score flowing into the final convolutional layer. Generate a heatmap by weighing the feature maps by these gradients and applying a ReLU activation [40] [45]. | Produces a coarse localization map highlighting important regions for the prediction. |

| Visualization | Overlay the generated heatmap onto the original MRI scan. Use a color map (e.g., jet) to visualize regions of high and low importance. | Provides an intuitive visual explanation that clinicians can interpret. |

Protocol 2: Generating Model-Agnostic Explanations with LIME

LIME (Local Interpretable Model-agnostic Explanations) explains individual predictions by approximating the complex model locally with an interpretable one.

Table 3: Protocol for Generating Explanations with LIME

| Step | Component | Description | Purpose & Rationale |

|---|---|---|---|

| 1. Model & Data | Pre-trained Model | Use a fully trained and fine-tuned model (e.g., DenseNet169) for brain tumor classification [39] [44]. | Provides the "black-box" model whose predictions need to be explained. |

| Instance Selection | Select a specific MRI image (a single instance) for which an explanation is required. | LIME is designed to explain individual predictions. | |

| 2. LIME Process | Perturbation | Generate a set of perturbed versions of the original image by randomly turning parts of the image (superpixels) on or off [39]. | Creates a local neighborhood of data points around the instance to be explained. |

| Prediction | Obtain predictions from the black-box model for each of these perturbed samples. | Maps the perturbed inputs to the model's output. | |

| Interpretable Model | Train a simple, interpretable model (e.g., a linear model with Lasso regression) on the dataset of perturbed samples and their corresponding predictions. The features are the binary vectors indicating the presence of superpixels. | Learns a locally faithful approximation of the complex model's behavior. | |

| 3. Explanation | Feature Importance | The trained linear model yields weights (coefficients) for each superpixel, indicating its importance for the specific prediction. | Identifies which image segments (superpixels) most strongly contributed to the classification. |

| Visualization | Highlight the top-K most important superpixels on the original image. | Provides an intuitive, visual explanation for the specific prediction. |

The Scientist's Toolkit: Essential Research Reagents & Materials

Successful implementation of XAI for tumor detection relies on a suite of computational tools, datasets, and software libraries. The following table catalogs the key "research reagents" essential for experiments in this field.

Table 4: Essential Research Reagents and Tools for XAI in Tumor Detection

| Category | Item / Solution | Specifications / Function | Example Use Case |

|---|---|---|---|

| Datasets | Brain Tumor MRI Dataset (Kaggle) | A public dataset often containing 2,000+ T1-weighted, T2-weighted, and FLAIR MRI sequences, classified into tumor subtypes (Glioma, Meningioma, Pituitary) and non-tumor cases [5]. | Serves as the primary benchmark for training and evaluating models [39] [5]. |

| Figshare Dataset | A large-scale, publicly available dataset of brain MRIs, often used for multi-class classification and segmentation tasks [3]. | Used for validating model generalization power on larger, diverse data [3] [44]. | |

| Software & Libraries | Python | The primary programming language for deep learning and XAI research, preferred for its extensive ecosystem of scientific libraries (used in ~32% of studies) [42]. | Core programming environment for implementing all workflows. |

| TensorFlow / PyTorch / Keras | Open-source deep learning frameworks that provide the backbone for building, training, and fine-tuning convolutional neural networks [44]. | Used to implement transfer learning with architectures like ResNet, DenseNet, and VGG. | |

| XAI Libraries (SHAP, LIME, TorchCam) | Specialized libraries for generating explanations. SHAP explains output using game theory, LIME creates local surrogate models, and TorchCam provides Grad-CAM variants for PyTorch. | Generating visual and quantitative explanations for model predictions [39] [43] [13]. | |

| Computational Hardware | GPUs (NVIDIA) | Graphics Processing Units are critical for accelerating the training of deep learning models, reducing computation time from weeks to hours. | Essential for all model training and extensive hyperparameter tuning. |

| Pre-trained Models | DenseNet169 / ResNet50 / VGG16 | Established Convolutional Neural Network architectures pre-trained on the ImageNet dataset. They serve as powerful and efficient feature extractors. | Used as the backbone for transfer learning, where they are fine-tuned on medical image data [39] [40] [13]. |

The application of deep learning, particularly transfer learning, for brain tumor detection in MRI scans represents a significant advancement in medical imaging. This approach leverages pre-trained convolutional neural networks (CNNs), fine-tuned on medical datasets, to achieve high diagnostic accuracy even with limited data. By transferring knowledge from large-scale natural image datasets, these models can learn robust feature representations, overcoming the common challenge of small, annotated medical imaging datasets. The integration of data augmentation and explainable AI (XAI) further enhances model robustness and clinical trust, providing a comprehensive framework for assisting researchers and clinicians in accurate, efficient diagnosis.

Data Acquisition and Preprocessing

Publicly available datasets are crucial for benchmarking and developing brain tumor classification models. The following table summarizes commonly used datasets in recent studies.

Table 1: Summary of Brain Tumor MRI Datasets Used in Research

| Dataset | Sample Size | Classes | Key Characteristics | Citation |

|---|---|---|---|---|

| Figshare (Cheng, 2017) | 4,517 images | Glioma (1,129), Meningioma (1,134), Pituitary (1,138), Normal (1,116) | Large, multi-class; used for comprehensive model comparison | [3] |

| Br35H | 3,000 images | Normal, Tumor | Designed for binary classification (normal vs. tumor) | [11] |

| Kaggle Brain Tumor MRI | 2,000 - 7,023 images | Glioma, Meningioma, Pituitary, Normal | Often used in two variants (small and large) for testing generalization | [5] |

Preprocessing Pipeline

A standardized preprocessing pipeline is essential to ensure data quality and model performance.

- Grayscale Conversion: Images are often converted to grayscale to reduce computational complexity, as key diagnostic features are captured in intensity variations [5].

- Noise Reduction: A Gaussian filter is applied to blur images, reducing high-frequency noise and allowing the model to focus on relevant features [5].

- Intensity Normalization: Pixel intensities are normalized (e.g., min-max scaling) to a standard range (e.g., [0, 1]) to stabilize and accelerate the training process [13].

- Contrast Enhancement: Techniques like min-max normalization or histogram equalization can be used to improve the contrast between tumor regions and healthy tissue [13].

- Region of Interest (ROI) Extraction: Contour detection methods identify the largest contour, presumed to be the tumor region, and images are cropped to this ROI to eliminate irrelevant background information [5].

- Resizing: All images are resized to a uniform dimension compatible with the input layer of the chosen pre-trained model (e.g., 224x224 for models like VGG16 and DenseNet201) [13] [11].

Figure 1: Data Preprocessing Workflow for MRI Brain Scans

Data Augmentation Strategies

Data augmentation artificially expands the training dataset, improving model generalization and combating overfitting. This is especially critical in medical imaging where data scarcity is common [7] [46].

Conventional and Deep Learning-Based Augmentation

Table 2: Data Augmentation Techniques for Brain Tumor MRI

| Category | Technique | Description | Purpose |

|---|---|---|---|

| Geometric Transformations | Rotation, Flipping, Translation, Scaling | Affine transformations that alter image geometry while preserving tumor labels. | Increases invariance to object orientation and position. |

| Photometric Transformations | Brightness, Contrast, Gamma Adjustments | Modifies pixel intensity values across the image. | Improves robustness to variations in scanning protocols and lighting. |

| Noise Injection | Adding Gaussian or Salt-and-Pepper Noise | Introduces random noise to simulate image acquisition artifacts. | Enhances model robustness to noisy clinical data. |

| Advanced Generative Models | Generative Adversarial Networks (GANs), Denoising Diffusion Probabilistic Models (DDPMs) | Generates entirely new, realistic tumor images. The Multi-Channel Fusion Diffusion Model (MCFDiffusion) converts healthy images to tumor images. [47] | Addresses severe class imbalance; creates diverse and complex tumor morphologies. |

Transfer Learning Model Architectures and Training

Transfer learning involves using a pre-trained CNN model (typically on ImageNet) and fine-tuning it on the medical imaging task.

Model Selection and Performance

Researchers have evaluated numerous pre-trained architectures. The table below summarizes reported performance metrics from recent studies.

Table 3: Performance Comparison of Transfer Learning Models for Brain Tumor Classification

| Model Architecture | Reported Accuracy | Key Strengths | Citation |

|---|---|---|---|

| GoogleNet | 99.2% | High accuracy on multi-class classification (Figshare dataset). | [3] |

| Proposed DenseTransformer (DenseNet201 + Transformer) | 99.41% | Captures both local features and long-range dependencies via self-attention. | [11] |

| Lightweight CNN (5-layer custom) | 99% | Effective with limited data (189 images); suitable for resource-constrained environments. | [48] |

| Hybrid CNN-VGG16 | 94% | Demonstrates effective knowledge transfer across multiple neurological datasets. | [13] |

| MobileNetV2 | >95% (Comparative) | Lightweight architecture, efficient for potential clinical deployment. | [3] |

End-to-End Model Training Workflow

The following diagram and protocol describe the standard workflow for adapting a pre-trained model for brain tumor classification.

Figure 2: Transfer Learning and Fine-tuning Workflow

Experimental Protocol: Model Fine-tuning

Base Model and Classifier Replacement:

- Select a pre-trained model (e.g., DenseNet201, VGG16) [13] [11].

- Remove the original final fully-connected classification head.

- Append a new, randomly initialized classifier tailored to the brain tumor task. This typically consists of a global average pooling layer, followed by one or more dense layers with ReLU activation and dropout for regularization, and a final softmax/output layer with units equal to the number of classes (e.g., 4 for Figshare dataset).

Layer Freezing and Initial Training:

- Freeze the weights of the pre-trained convolutional base. This prevents the pre-learned, general-purpose features from being destroyed in the initial training phase.