Optimizing Pharmacophore Model Sensitivity: A Comprehensive Guide for Enhanced Virtual Screening

This article provides a systematic guide for researchers and drug development professionals on optimizing pharmacophore model sensitivity—a critical parameter for successful virtual screening.

Optimizing Pharmacophore Model Sensitivity: A Comprehensive Guide for Enhanced Virtual Screening

Abstract

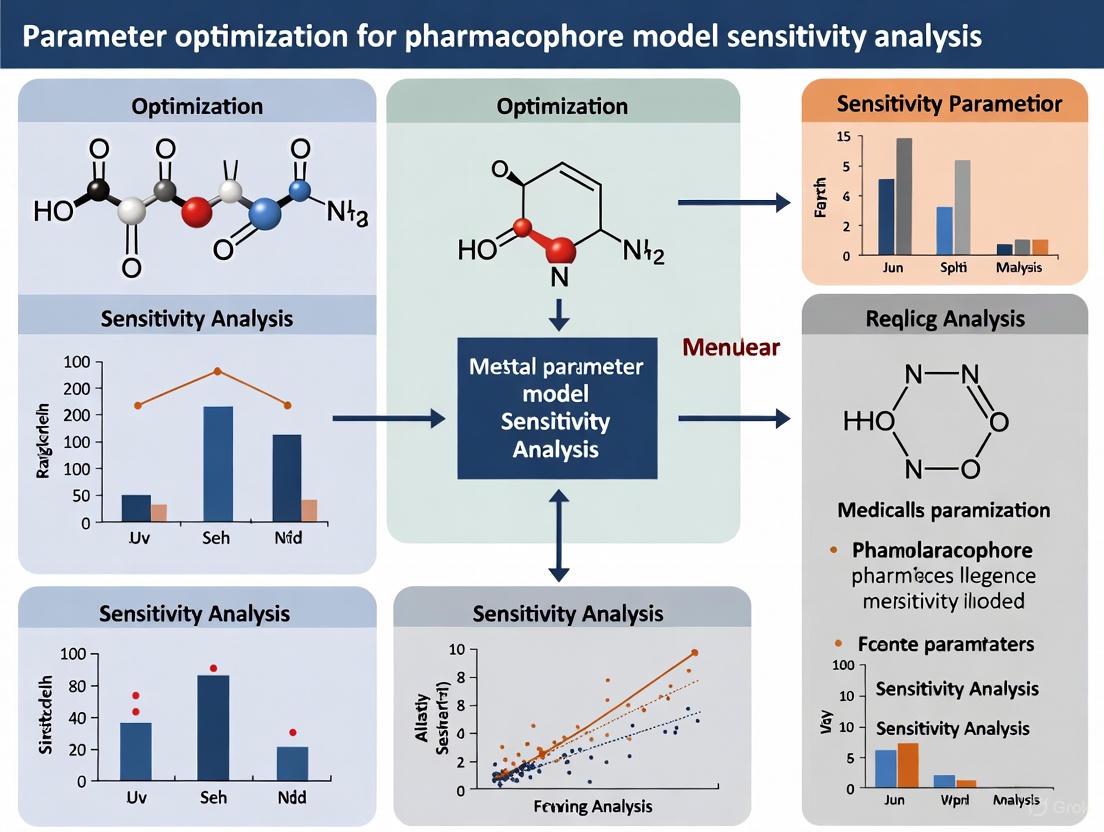

This article provides a systematic guide for researchers and drug development professionals on optimizing pharmacophore model sensitivity—a critical parameter for successful virtual screening. We explore the foundational principles of pharmacophore features and their direct impact on a model's ability to correctly identify active compounds. The content covers established and emerging methodological approaches, including structure-based and ligand-based modeling, alongside advanced AI-driven techniques. A dedicated section addresses common pitfalls and provides actionable strategies for parameter tuning to maximize sensitivity without compromising specificity. Finally, we detail rigorous validation protocols using statistical metrics like enrichment factor and GH score, supplemented by case studies from recent kinase inhibitor campaigns. This holistic framework aims to equip scientists with the knowledge to build highly sensitive, robust pharmacophore models that significantly improve hit rates in drug discovery.

Understanding Pharmacophore Sensitivity: Core Concepts and Key Features

Defining Sensitivity and Specificity in Pharmacophore Modeling

Frequently Asked Questions (FAQs)

FAQ 1: What is the fundamental difference between sensitivity and specificity in the context of pharmacophore-based virtual screening?

Sensitivity, often measured by the true positive rate, reflects a pharmacophore model's ability to correctly identify known active compounds from a database. Specificity, measured by the true negative rate, indicates the model's ability to correctly reject inactive compounds or decoys. A high-quality model must balance both; an overly sensitive model may retrieve many actives but with numerous false positives, while an overly specific model might miss potential actives. The goal of parameter optimization is to achieve a model with high sensitivity without compromising specificity [1].

FAQ 2: Which quantitative metrics should I use to formally evaluate the sensitivity and specificity of my pharmacophore model?

The primary metric for evaluating sensitivity and specificity is the Enrichment Factor (EF) [1]. The EF represents the model's ability to identify true positive active inhibitors compared to a random selection. It is calculated as the ratio of the active inhibitors in the screened subgroup over the ratio of active inhibitors in the entire database. A higher EF indicates better model performance. Additionally, the fitness score of a model, which evaluates the alignment between a ligand's conformation and the pharmacophore model, is a critical internal metric. The Receiver Operating Characteristic (ROC) curve and the area under it (AUC) provide a comprehensive view of the model's true positive rate (sensitivity) against its false positive rate (1-specificity) across all classification thresholds [1] [2].

FAQ 3: My model has high enrichment but a low fitness score for known actives. What parameters should I investigate?

This discrepancy often points to an overly restrictive model. We recommend investigating the following parameters:

- Feature Tolerance: Increase the distance tolerance (e.g., from 1.0 Å to 1.5 Å or 2.0 Å) for pharmacophore features like hydrogen bond donors/acceptors or hydrophobic centers. This makes the model more forgiving of minor spatial deviations [1].

- Exclusion Volumes: Review the number and size of exclusion spheres. An excess of restrictive volumes may sterically block valid active compounds from matching. Consider removing or reducing the radii of exclusion volumes in non-critical regions [2].

- Feature Weighting: If your software allows, adjust the weights of individual pharmacophore features. A model might be overly dependent on one particular feature that is not essential for biological activity.

FAQ 4: How can I use molecular dynamics (MD) simulations to improve the real-world reliability of my pharmacophore models?

Relying on a single, static crystal structure can lead to models that are not representative of dynamic binding interactions. Using MD simulations to generate an ensemble of protein-ligand conformations allows for the creation of multiple pharmacophore models [3]. You can then:

- Use a consensus approach (like the Common Hits Approach) across all models for virtual screening, which makes the process less sensitive to any single, poorly-performing model [3].

- Create a Hierarchical Graph Representation of Pharmacophore Models (HGPM) to visualize all unique models and their relationships. This tool helps you strategically select a diverse and representative set of models for virtual screening, rather than relying on a single "best" model, especially when active/inactive compound data is scarce [3].

FAQ 5: What are the best practices for creating a benchmark dataset to test my model's sensitivity and specificity?

A robust validation dataset is crucial for unbiased evaluation. Best practices include:

- Using established benchmark libraries like the Directory of Useful Decoys (DUD-e), which provides a curated set of active molecules and property-matched decoys for specific protein targets [1].

- Ensuring your dataset includes a diverse set of actives and decoys. Diversity can be assessed via chemical fingerprints (e.g., ECFP4) and clustering methods to avoid bias [3].

- Comparing the physicochemical properties (e.g., Molecular Weight, logP, Total Polar Surface Area) of the actives and decoys screened by your model using statistical tests like the Student's t-test. A good model will show no significant difference in these properties between the screened actives and decoys, indicating it is selecting actives based on pharmacophoric features rather than simple physicochemical properties [1].

Troubleshooting Guides

Issue 1: Low Sensitivity (Poor Recall of Known Actives)

Problem: Your pharmacophore model fails to retrieve a significant number of known active compounds during virtual screening.

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| Overly restrictive features | Check the fitness scores of known actives. If they are consistently just below the cutoff, the tolerance may be too tight. | Relax the distance and angle tolerances for key pharmacophore features. |

| Incorrect feature types | Re-evaluate the protein-ligand interaction patterns. Ensure critical features like metal coordination or halogen bonds are included if present. | Add missing essential pharmacophore features based on a detailed analysis of the binding site. |

| Excessive exclusion volumes | Temporarily disable exclusion volumes and re-run the screening. If sensitivity improves significantly, the volumes are too restrictive. | Strategically remove or reduce the radius of exclusion volumes that are not in direct conflict with the ligand binding mode. |

Issue 2: Low Specificity (High False Positive Rate)

Problem: Your model retrieves a large number of compounds, but most are confirmed to be inactive (decoys).

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| Too few defining features | Count the number of features in your model. A model with fewer than 3-4 features is often not selective enough. | Add more specific features from the binding site, such as a unique vector-based feature (e.g., hydrogen bond directionality). |

| Features are too generic | Analyze if features are located in common, non-specific regions of the binding site. | Incorporate rare or unique chemical features specific to your target, such as a cationic or anionic center. |

| Insufficient geometric constraints | The model may be matching features in the wrong spatial context. | Add exclusion volumes to define the shape of the binding pocket more accurately and prevent unrealistic matches. |

Experimental Protocols for Model Validation

Protocol 1: Validation Using the DUD-e Benchmark Set

Objective: To quantitatively assess the sensitivity and specificity of a pharmacophore model using a standardized dataset of actives and decoys.

- Dataset Preparation: Download the relevant target dataset (e.g., HIV-1 protease, acetylcholinesterase, cyclin-dependent kinase 2) from the DUD-e website [1].

- Pharmacophore Screening: Use your pharmacophore model as a query to screen the entire DUD-e dataset for your target in a virtual screening platform (e.g., Pharmit) [1].

- Result Analysis: From the screening results, count the number of true positives (TP, retrieved actives), false positives (FP, retrieved decoys), true negatives (TN, correctly rejected decoys), and false negatives (FN, missed actives).

- Metric Calculation:

- Sensitivity (Recall)= TP / (TP + FN)

- Specificity = TN / (TN + FP)

- Enrichment Factor (EF) = (TP / N) / (A / D), where N is the total number of compounds selected, A is the total number of actives in the database, and D is the total database size [1].

Protocol 2: Generating a Consensus Model from MD Simulations

Objective: To create a more robust and sensitive pharmacophore model by integrating information from molecular dynamics simulations.

- MD Simulation: Run a molecular dynamics simulation (e.g., 100-300 ns) of the protein-ligand complex using software like AMBER or GROMACS [3].

- Snapshot Extraction: Extract snapshots from the stabilized trajectory at regular intervals (e.g., every 1 ns).

- Pharmacophore Generation: For each snapshot, generate a structure-based pharmacophore model using software such as LigandScout [3].

- Model Consolidation: Instead of picking one model, use all models in a consensus screening approach, or use a tool like the Hierarchical Graph Representation (HGPM) to analyze the feature hierarchy and relationships, and then select a representative subset of models that capture the essential, persistent interactions for your virtual screening campaign [3].

Table 1: Performance Metrics of Pharmacophore Modeling Tools on DUD-e Benchmark

The following table summarizes typical performance metrics for various tools as reported in the literature, illustrating the trade-off between sensitivity and specificity [1].

| Tool / Method | Target (Family) | Sensitivity (Recall) | Specificity | Enrichment Factor (EF) |

|---|---|---|---|---|

| ELIXIR-A | HIVPR (Protease) | 0.85 | 0.92 | 25.4 |

| ELIXIR-A | ACES (Esterase) | 0.78 | 0.95 | 21.7 |

| ELIXIR-A | CDK2 (Kinase) | 0.81 | 0.89 | 19.5 |

| LigandScout | HIVPR (Protease) | 0.80 | 0.90 | 22.1 |

| Schrödinger Phase | HIVPR (Protease) | 0.82 | 0.88 | 20.8 |

Table 2: Key Research Reagent Solutions for Pharmacophore Modeling

This table lists essential software tools and datasets that function as key "research reagents" in this field [1] [2] [3].

| Item Name | Type | Function/Brief Explanation |

|---|---|---|

| DUD-e | Dataset | A benchmark database containing known active molecules and property-matched decoys for unbiased validation of virtual screening methods. |

| Pharmit | Software | An interactive online tool for pharmacophore-based and shape-based virtual screening of large compound libraries. |

| LigandScout | Software | A software application for creating structure-based and ligand-based pharmacophore models and performing virtual screening. |

| ELIXIR-A | Software | An open-source, Python-based pharmacophore refinement tool that helps unify interaction data from multiple pharmacophore models. |

| DiffPhore | Software | A knowledge-guided diffusion AI model that generates 3D ligand conformations to maximally map onto a given pharmacophore model. |

| CpxPhoreSet & LigPhoreSet | Dataset | High-quality datasets of 3D ligand-pharmacophore pairs used for training and refining AI-based pharmacophore models like DiffPhore. |

Workflow and Relationship Diagrams

Pharmacophore Validation Workflow

Sensitivity vs. Specificity Relationship

FAQ: Fundamental Concepts and Applications

Q1: What are the essential pharmacophoric features and their roles in molecular recognition? The four essential pharmacophoric features are Hydrogen Bond Donor (HBD), Hydrogen Bond Acceptor (HBA), Hydrophobic (H), and Aromatic (Ar). They represent the key steric and electronic features necessary for optimal supramolecular interactions with a biological target [4]. The table below details their roles.

Table 1: Essential Pharmacophoric Features and Their Functions in Molecular Recognition

| Feature | Abbreviation | Role in Molecular Recognition & Binding |

|---|---|---|

| Hydrogen Bond Donor | HBD | Forms a hydrogen bond by donating a hydrogen atom to a hydrogen bond acceptor on the target, often from groups like O-H or N-H [5]. |

| Hydrogen Bond Acceptor | HBA | Forms a hydrogen bond by accepting a hydrogen atom from a donor on the target, typically through electronegative atoms like oxygen or nitrogen [5] [6]. |

| Hydrophobic | H | Drives binding via van der Waals forces and the hydrophobic effect, often with non-polar aliphatic or aromatic regions of the target [5] [7]. |

| Aromatic | Ar | Engages in π-π stacking, cation-π, or polar-π interactions with aromatic residues in the binding pocket [5]. |

Q2: What is the difference between structure-based and ligand-based pharmacophore modeling? The choice of approach depends on the available input data [4].

- Structure-Based Pharmacophore Modeling: This method relies on the three-dimensional structure of a macromolecular target, often from sources like the Protein Data Bank (PDB). The model is generated by extracting the interaction pattern from a protein-ligand complex or by analyzing the topology of an empty binding site to define complementary features [8] [4].

- Ligand-Based Pharmacophore Modeling: This approach is used when the 3D structure of the target is unknown. It involves aligning the 3D structures of multiple known active molecules to identify the common pharmacophore features shared among them that are responsible for their biological activity [8] [4].

Q3: How can molecular dynamics (MD) simulations improve my pharmacophore model? Traditional structure-based models derived from a single crystal structure can be sensitive to the specific coordinates and may include transient features or miss others due to protein flexibility. MD simulations generate multiple snapshots of the protein-ligand complex over time, capturing its dynamic behavior. This information can be used to:

- Evaluate Feature Stability: Identify which pharmacophore features from the crystal structure are stable throughout the simulation and which appear only rarely (potentially as artifacts) [9].

- Create a Consensus Model: Derive a merged pharmacophore model that incorporates all features present in either the initial structure or any of the simulation snapshots, providing a more comprehensive representation of the binding interactions [9].

- Prioritize Features: The frequency with which a feature appears during the simulation can be used to rank its importance for inclusion in the final model [9].

Troubleshooting Guide: Sensitivity and Specificity

Table 2: Common Issues and Solutions in Pharmacophore Model Optimization

| Problem | Potential Causes | Solutions & Troubleshooting Steps |

|---|---|---|

| Low Sensitivity (Poor recall of known actives) | Model is too restrictive; features are too specific or numerous. | 1. Review Feature Selection: Define some features as "optional" in your pharmacophore query.2. Adjust Tolerances: Slightly increase the radius (tolerance) of feature spheres.3. Reduce Features: Remove features that are not critical for binding, especially those with low stability in MD simulations [9]. |

| Low Specificity (High recall of decoys/inactives) | Model is too permissive; lacks essential discriminatory features. | 1. Add Critical Features: Incorporate a key interaction proven by mutagenesis studies or present in all highly active ligands.2. Use Exclusion Volumes: Add exclusion volumes (XVols) to represent the protein's steric constraints and prevent the mapping of clashing compounds [8] [4].3. Refine Geometry: Adjust the spatial arrangement of features to better match the bioactive conformation. |

| Model fails to identify novel active chemotypes | Model may be over-fitted to the chemical scaffold of the training set. | 1. Use Diverse Training Set: For ligand-based models, ensure the training set contains structurally diverse molecules with a common binding mode [8].2. Employ a Structure-Based Approach: If possible, create a model directly from the protein-ligand complex to abstract away from specific ligand scaffolds [8]. |

Experimental Protocols for Model Validation

Protocol: Validation of a Pharmacophore Model Using a Decoy Set This protocol is essential for evaluating the performance of a pharmacophore model before its use in prospective virtual screening [10].

Prepare Validation Sets:

- Actives: Compile a set of known active compounds for your target. The direct interaction should be experimentally proven (e.g., by receptor binding or enzyme activity assays). Cell-based assay data should be avoided due to confounding factors like pharmacokinetics [8]. Public repositories like ChEMBL [8] are excellent sources.

- Decoys: Obtain a set of decoy molecules (assumed inactives) with similar 1D properties (e.g., molecular weight, logP, number of HBD/HBA) but different 2D topologies compared to the actives. The DUD-E website (http://dude.docking.org) can automatically generate optimized decoys for your active set [8] [10]. A ratio of 50 decoys per active is recommended [8].

Perform Virtual Screening:

- Screen the combined library of actives and decoys using your pharmacophore model as a query.

Calculate Validation Metrics:

- Analyze the screening results to calculate the following key metrics [8] [10]:

- Sensitivity (True Positive Rate) = (Ha / A) × 100

- Ha: Number of active compounds retrieved (hits).

- A: Total number of active compounds in the database.

- Specificity = (1 - (Hd / D)) × 100

- Hd: Number of decoy compounds retrieved.

- D: Total number of decoy compounds in the database.

- Enrichment Factor (EF): Measures how much better the model is at identifying actives compared to random selection [8].

- Goodness of Hit (GH) Score: A composite metric that balances the recall of actives with the false positive rate [10].

- Sensitivity (True Positive Rate) = (Ha / A) × 100

- Analyze the screening results to calculate the following key metrics [8] [10]:

Protocol: Assessing Pharmacophore Feature Stability via MD Simulations This protocol helps refine a structure-based pharmacophore by incorporating protein-ligand dynamics [9].

System Setup:

- Start with the high-resolution protein-ligand complex structure (e.g., from PDB).

- Solvate the complex in a water box, add counterions to neutralize the system, and assign appropriate force field parameters to both the protein and the ligand.

Simulation and Analysis:

- Run an all-atom molecular dynamics simulation (e.g., for 20-200 ns, depending on the system) [9] [5].

- Save snapshots of the trajectory at regular intervals (e.g., every 100 ps).

- For each saved snapshot, generate a structure-based pharmacophore model.

- Create a merged pharmacophore model that contains every unique feature observed in the initial crystal structure and across all analyzed snapshots.

Feature Prioritization:

- Calculate the frequency of occurrence for each pharmacophore feature across the entire simulation trajectory.

- Use this frequency data to rank features. Features present in the crystal structure but with a low occurrence frequency (<10%) during the simulation may be artifacts and considered for removal. Conversely, features that consistently appear (>90%) are likely critical for binding [9].

Key Research Reagents and Computational Tools

Table 3: Essential Resources for Pharmacophore Modeling and Validation

| Resource/Solution | Type | Function & Application in Research |

|---|---|---|

| Protein Data Bank (PDB) | Database | Primary repository for experimentally determined 3D structures of proteins and nucleic acids, serving as the foundational input for structure-based pharmacophore modeling [8] [4]. |

| ChEMBL / DrugBank | Database | Public compound repositories providing curated bioactivity data (e.g., IC50, Ki) for known active molecules, essential for building ligand training sets and validation decoy sets [8]. |

| DUD-E (Directory of Useful Decoys, Enhanced) | Online Tool | Generates optimized decoy molecules for a given list of actives, which are crucial for the theoretical validation of pharmacophore models to avoid over-optimistic performance estimates [8] [10]. |

| LigandScout | Software | A leading program for both structure-based and ligand-based pharmacophore model generation, analysis, and virtual screening [9] [5]. |

| ZINCPharmer / Pharmit | Online Tool | Web-based platforms that allow for the virtual screening of large chemical libraries (e.g., ZINC) using a pharmacophore model as a query [10] [5]. |

| Molecular Dynamics Software (e.g., GROMACS, AMBER) | Software | Packages used to run MD simulations to assess the stability of pharmacophore features and incorporate protein flexibility into the models [11] [9] [10]. |

Workflow and Relationship Visualizations

Diagram 1: Pharmacophore Model Development and Optimization Workflow

Diagram 2: Relationship Between Core Features and Optimization Strategies

The Impact of Exclusion Volumes (XVols) on Model Selectivity

Frequently Asked Questions (FAQs)

1. What are Exclusion Volumes (XVols) and what is their primary function? Exclusion Volumes (XVols) are steric constraints in a pharmacophore model that represent regions in the binding pocket occupied by the protein structure itself. Their primary function is to prevent the mapping of compounds that would be inactive due to steric clashes with the protein surface, thereby improving the model's selectivity [4] [8].

2. How do XVols improve the results of a virtual screening? By simulating the physical boundaries of the binding site, XVols filter out molecules whose atoms would occupy forbidden space. This enhances the "enrichment factor" – the model's ability to prioritize active compounds over inactive ones – and increases the hit rate of prospective virtual screening campaigns, which can typically range from 5% to 40% [8] [12].

3. When should I consider manually adding or removing XVols? Manual adjustment is crucial when the automated placement of XVols is insufficient. This includes refining the model based on a deeper analysis of the binding site residues to better represent its shape, or deleting automatically generated XVols that might be too restrictive and incorrectly exclude potentially active compounds with slightly different binding modes [13] [14].

4. Can a model with too many XVols be detrimental? Yes. An excessive number of XVols can make a model overly restrictive, leading to an undesirable drop in sensitivity. An overtuned model might incorrectly reject true active compounds, a problem known as "over-fitting." The goal is to balance selectivity (finding actives) with sensitivity (not missing actives) [14].

Troubleshooting Guides

Problem: Poor Selectivity – Model Retrieves Too Many Inactive Compounds

- Symptoms: Virtual screening hit lists are large, but the experimentally confirmed hit rate is very low. The model retrieves compounds that fit the feature pattern but are sterically incompatible with the target.

- Potential Causes & Solutions:

- Cause 1: Missing or insufficient exclusion volume definition.

- Solution: Generate a receptor-based excluded volumes shell. Most modern pharmacophore modeling software (e.g., Schrödinger's Phase, LigandScout) can automatically add XVols based on the 3D protein structure [13].

- Cause 2: XVols are too small or their tolerance is too high.

- Cause 1: Missing or insufficient exclusion volume definition.

Problem: Over-Restrictive Model – Model Misses Known Active Compounds

- Symptoms: The model fails to recognize known active compounds from a validation test set, or virtual screening returns an exceptionally small number of hits.

- Potential Causes & Solutions:

- Cause 1: Excessively large or inaccurately placed XVols.

- Solution: Systematically review and remove XVols that are not critical for defining the binding site's steric constraints. Focus on preserving XVols that correspond to backbone atoms or large, inflexible side chains [14].

- Cause 2: The model does not account for protein flexibility or minor ligand adjustments.

- Solution: Slightly reduce the radius of XVols in areas where side chains are known to have some rotational freedom. Use multiple protein conformations (e.g., from molecular dynamics simulations) to create a more averaged XVols definition [15].

- Cause 1: Excessively large or inaccurately placed XVols.

Problem: Inconsistent Performance Across Different Software

- Symptoms: A model validated in one software platform performs poorly when used for screening in another.

- Potential Causes & Solutions:

- Cause: Differences in how software algorithms handle the matching of compounds against XVols and other pharmacophore features.

- Solution: When comparing tools, ensure you understand their specific parameters for XVols tolerance. Standardize your validation protocol using a robust dataset of known active and inactive compounds to calibrate the model's performance in each software environment [16].

- Cause: Differences in how software algorithms handle the matching of compounds against XVols and other pharmacophore features.

Experimental Protocol: Optimizing XVols for Model Selectivity

The following protocol outlines a systematic method for integrating and refining XVols to maximize pharmacophore model selectivity, suitable for inclusion in a thesis methodology section.

1. Objective To construct a high-selectivity pharmacophore model by incorporating exclusion volumes (XVols) that accurately represent the steric constraints of the target protein's binding pocket.

2. Materials and Software Requirements Table: Essential Research Reagents and Solutions

| Item | Function in Protocol |

|---|---|

| Protein Data Bank (PDB) Structure | Source of the 3D atomic coordinates of the target protein, used to define the binding site and generate XVols [4]. |

| Pharmacophore Modeling Software (e.g., Schrödinger Phase, LigandScout) | Platform for hypothesis generation, manual manipulation of XVols, and performing virtual screening [13] [17]. |

| Validation Dataset (Active & Inactive Compounds/Decoys) | A pre-compiled set of molecules for theoretically assessing model quality by calculating enrichment factors and other metrics [8] [12]. |

| Directory of Useful Decoys, Enhanced (DUD-E) | Online resource for generating optimized decoy molecules with similar 1D properties but different 2D topologies compared to active molecules, used for rigorous validation [8]. |

3. Step-by-Step Methodology

Step 1: Initial Structure Preparation

- Obtain the 3D structure of your target, preferably from the PDB.

- Prepare the protein structure using a standard protein preparation workflow. This includes adding hydrogen atoms, assigning correct protonation states, and filling in missing side chains or loops [13].

Step 2: Generate a Preliminary Pharmacophore Model with XVols

- Using the prepared structure, create a structure-based pharmacophore model. If a co-crystallized ligand is present, an e-Pharmacophore method is recommended.

- In the hypothesis settings, select the option to "Create receptor-based excluded volumes shell" [13]. This will automatically populate the binding site with XVols.

Step 3: Theoretical Validation and Benchmarking

- Screen the validation dataset (containing known actives and inactives/decoys) against the initial model.

- Calculate key performance metrics:

- Record these baseline metrics for the model with automatically generated XVols.

Step 4: Manual Refinement of XVols

- Visually inspect the binding site and the generated XVols. Pay close attention to residues with flexible side chains.

- Use the software's "Manage Excluded Volumes" tool to:

- Add XVols: For critical atoms or residues that are not adequately covered, add XVols manually by selecting the specific atoms [13].

- Remove XVols: Delete XVols that are likely to be too restrictive, for example, in areas where small conformational changes could accommodate a ligand.

- The goal is to create a definition that closely matches the physical shape of the binding pocket without being overly rigid.

Step 5: Post-Refinement Validation and Iteration

- Re-run the virtual screening of the validation dataset using the refined model.

- Re-calculate the performance metrics (EF, Yield, Specificity).

- Iterate Step 4 until the model shows a strong balance, achieving high enrichment of actives while effectively excluding inactives. A successful refinement should show a significant increase in the Enrichment Factor [12].

Workflow Visualization

The following diagram illustrates the logical workflow for optimizing exclusion volumes.

Quantitative Impact of XVol Refinement

The table below summarizes real-world data from published studies demonstrating the performance improvement achieved through the systematic refinement of exclusion volumes.

Table: Impact of XVol Refinement on Model Selectivity in Published Studies

| Study Context | Model Version | Number of XVols | Active Compounds Found | Inactive/Decoys Found | Enrichment Factor (EF) | Reference |

|---|---|---|---|---|---|---|

| IKK-β Inhibitor Discovery | Initial Model (Hypo1) | Not Specified | 34 (27.4%) | 197 | 15.7 | [12] |

| Refined with XVols (Hypo1-R1) | Not Specified | 33 (26.6%) | 116 | 23.2 | [12] | |

| Refined with XVols & Shape (Hypo1-R1-S1) | Not Specified | 31 (25.0%) | 94 | 25.8 | [12] | |

| 17β-HSD2 Inhibitor Discovery | Model 1 | 54 | 8 Actives | 0 Inactives | Not Reported | [14] |

| Model 2 | Not Specified | 8 Actives | 0 Inactives | Not Reported | [14] | |

| Model 3 | 56 | 6 Actives | 0 Inactives | Not Reported | [14] |

Core Concepts and Sensitivity Profiles

The choice between structure-based and ligand-based drug design methodologies is fundamental, as each possesses distinct sensitivities, applicability domains, and performance characteristics. Understanding these differences is critical for parameter optimization and reliable model development.

Table 1: Core Characteristics and Sensitivities of Drug Design Approaches

| Feature | Structure-Based Drug Design (SBDD) | Ligand-Based Drug Design (LBDD) |

|---|---|---|

| Primary Data | 3D structure of the protein target (e.g., from X-ray, Cryo-EM, NMR) [18] [19] | Known active and inactive ligand molecules and their properties [20] [18] |

| Key Techniques | Molecular docking, Molecular Dynamics (MD), Free Energy Perturbation (FEP) [20] [18] | Quantitative Structure-Activity Relationship (QSAR), Pharmacophore modeling, Molecular similarity search [20] [18] [21] |

| Sensitivity to Data Scarcity | Less sensitive; can be applied to novel targets with little/no known ligands if a structure is available [22] | Highly sensitive; requires a sufficient number of known active compounds for model training [22] [18] |

| Sensitivity to Novel Chemotypes | Lower sensitivity; can, in principle, generate and identify novel chemotypes outside known ligand space [22] [23] | High sensitivity; models are biased towards chemical space similar to the training data, limiting novelty [22] [23] |

| Sensitivity to Protein Flexibility | Highly sensitive; static protein structures may not represent dynamic binding events [20] [18] | Not directly sensitive; implicitly captures some effects via ligand structure-activity data [20] |

| Key Sensitivity Advantage | Exploits physical principles of binding, enabling exploration of unprecedented chemistry [22] [24] | High throughput and efficiency when ample ligand data is available; excels at pattern recognition [18] [25] |

| Key Sensitivity Limitation | Accuracy depends heavily on the quality and relevance of the protein structure and scoring functions [20] [18] | Limited applicability domain; poor extrapolation to chemotypes not represented in training set [22] [18] |

Frequently Asked Questions (FAQs) and Troubleshooting

FAQ 1: My structure-based docking campaign is producing poor results. The poses look incorrect, and the scoring does not correlate with experimental activity. What are the primary parameters to optimize?

This is a common issue often rooted in the simplifications of the docking process.

Problem: Inadequate Protein Structure Preparation.

- Troubleshooting Guide: The input protein structure is critical.

- Check Protonation States: Ensure key residues (e.g., His, Asp, Glu) in the binding site have correct protonation states at physiological pH.

- Add Missing Residues: Homology models or structures with poor electron density may have missing loops or side chains that affect the pocket.

- Consider Protein Flexibility: A single rigid protein structure may not be representative. If available, use an ensemble of protein conformations (from multiple crystal structures, MD simulations, or conformational sampling) for docking to account for binding site flexibility [20] [18].

- Handle Water Molecules: Decide on the role of key water molecules in the binding site. Test docking protocols that both include (as part of the protein) and exclude specific, conserved water molecules [20].

- Troubleshooting Guide: The input protein structure is critical.

Problem: Limitations of the Scoring Function.

- Troubleshooting Guide: Scoring functions are approximations and have known limitations.

- Use Consensus Scoring: Combine rankings from multiple, different scoring functions to improve the reliability of hit identification [20] [25].

- Rescore with Advanced Methods: Take top-ranked docking poses and rescore them using more rigorous, but computationally expensive, methods like Free Energy Perturbation (FEP) or MM/GBSA [20] [18].

- Validate Your Protocol: Always perform a control docking with a known native ligand or a well-characterized inhibitor to verify that your protocol can reproduce the correct binding mode (pose validation) and rank it favorably (scoring validation) [18].

- Troubleshooting Guide: Scoring functions are approximations and have known limitations.

FAQ 2: My ligand-based QSAR model performs well on the training set but fails to predict the activity of new compound series. How can I improve its generalizability and sensitivity to novel scaffolds?

This indicates a classic case of overfitting and a model that has not learned the underlying structure-activity relationship (SAR) but has instead memorized the training data.

- Problem: Overfitting and Limited Applicability Domain.

- Troubleshooting Guide:

- Simplify the Model: Reduce the number of molecular descriptors. Use feature selection algorithms (e.g., genetic algorithms, stepwise regression) to retain only the most statistically significant and meaningful descriptors [21].

- Define the Applicability Domain: Explicitly define the chemical space where your model can make reliable predictions. This can be based on the ranges of descriptors in the training set. Flag new compounds that fall outside this domain as "unreliable" [22] [18].

- Incorporate 3D Information: If possible, move from 2D-QSAR to 3D-QSAR (e.g., CoMFA, CoMSIA). 3D-QSAR models, particularly those grounded in physics-based representations, can sometimes generalize better across diverse ligands [18].

- Data Augmentation: Incorporate data on inactive or weakly active compounds to help the model learn what features are detrimental to activity, improving its discriminatory power [20].

- Troubleshooting Guide:

FAQ 3: I have some ligand data and a protein structure. How can I combine these approaches to mitigate the sensitivity limitations of each?

A hybrid approach is often the most powerful strategy, leveraging the strengths of both methods to compensate for their individual weaknesses [20] [18] [25].

- Problem: Isolating SBDD or LBDD approaches leads to missed opportunities or high false-positive rates.

- Troubleshooting Guide: Implement a sequential or parallel workflow.

- Sequential Filtering: This is highly efficient. First, use a fast ligand-based method (e.g., 2D similarity or a QSAR model) to screen a massive virtual library and reduce it to a manageable size. Then, apply more computationally intensive structure-based methods (docking, FEP) to this pre-filtered set [18] [25]. This saves resources while applying the most powerful tools to the most promising candidates.

- Parallel Screening with Consensus: Run both ligand-based and structure-based virtual screens independently on the same compound library. You can then either:

- Take the union of the top-ranked compounds from each list to maximize the chance of finding actives (increasing sensitivity), or

- Take the intersection of the top-ranked compounds to increase confidence in the selection (increasing specificity) [25].

- Structure-Based Pharmacophore: Generate a pharmacophore model based on key interactions observed in the protein's binding site (e.g., from a crystal structure or a docked potent ligand). This pharmacophore, which embodies structure-based information, can then be used as a query for high-throughput ligand-based screening [24].

- Troubleshooting Guide: Implement a sequential or parallel workflow.

Detailed Experimental Protocols

Protocol 1: Structure-Based Virtual Screening using Molecular Docking

This protocol is used to computationally screen large compound libraries against a known protein structure to identify potential hits [20] [18].

- Objective: To identify novel small molecules that bind to a specific target protein with high predicted affinity, focusing on optimizing docking parameters for sensitivity.

Materials:

- Protein Structure: High-resolution (preferably < 2.5 Å) 3D structure from PDB (e.g., PDB ID: 7ONS for PARP1 [24]).

- Compound Library: Database of small molecules in a ready-to-dock format (e.g., SDF, MOL2).

- Software: Docking software (e.g., Glide [22], AutoDock, GOLD).

- Computing Hardware: High-performance computing (HPC) cluster or cloud computing resources.

Step-by-Step Methodology:

- Protein Preparation:

- Obtain the protein structure from the PDB. Remove any non-essential water molecules, ions, and original ligands.

- Add hydrogen atoms and assign correct protonation states to residues in the binding site using a tool like Epik or PROPKA.

- Energy minimize the protein structure to relieve any steric clashes introduced during preparation.

- Binding Site Grid Generation:

- Define the coordinates and dimensions of the binding site cavity for docking. This is typically centered on a known co-crystallized ligand or a key residue.

- Generate a grid file that the docking program will use to evaluate ligand interactions.

- Ligand Library Preparation:

- Prepare the ligand library by generating plausible 3D conformations for each molecule.

- Assign correct bond orders, charges, and protonation states at physiological pH (e.g., using LigPrep or MOE).

- Molecular Docking:

- Run the docking calculation using a validated protocol. For initial screening, standard precision (SP) mode is often used.

- Ensure the protocol is validated by re-docking the native ligand and confirming it reproduces the crystallographic pose (Root-Mean-Square Deviation, RMSD < 2.0 Å).

- Post-Docking Analysis:

- Analyze the top-ranking compounds based on docking score. Visually inspect the predicted binding poses to check for sensible interactions (e.g., hydrogen bonds, hydrophobic contacts, salt bridges).

- Apply post-docking filters, such as ADMET property prediction or interaction with key residues, to further prioritize hits.

- Protein Preparation:

Table 2: Key Research Reagent Solutions for SBDD

| Reagent / Resource | Function / Description | Example / Source |

|---|---|---|

| Protein Structure | Provides the 3D atomic coordinates of the target for docking and analysis. | Protein Data Bank (PDB) [19] |

| Homology Model | A computationally predicted 3D protein model used when an experimental structure is unavailable. | AlphaFold2, MODELLER [18] |

| Virtual Compound Library | A digital collection of compounds for in silico screening. | ZINC, ChEMBL, in-house corporate libraries [24] |

| Docking Software | Algorithm that predicts the bound pose and scores the binding affinity of a ligand. | Glide, AutoDock Vina, GOLD [22] [20] |

| Molecular Dynamics Software | Simulates the physical movements of atoms and molecules over time to study dynamics. | GROMACS, AMBER, Desmond [18] |

Protocol 2: Developing a 3D-QSAR Model using Comparative Molecular Field Analysis (CoMFA)

This protocol describes the creation of a 3D-QSAR model to understand the relationship between the molecular fields of a set of ligands and their biological activity [21].

- Objective: To build a predictive 3D-QSAR model that identifies steric and electrostatic features critical for biological activity, optimizing for model robustness and predictive power.

- Materials:

- Dataset: A series of molecules with known biological activities (e.g., IC50, Ki). A minimum of 20-30 compounds is typically required.

- Software: 3D-QSAR software (e.g., SYBYL, MOE, Open3DQSAR).

- Step-by-Step Methodology:

- Ligand Preparation and Alignment:

- This is the most critical step. Generate low-energy 3D conformations for all molecules in the dataset.

- Align the molecules to a common template or using a pharmacophore hypothesis. The alignment should reflect a presumed consistent binding mode to the target.

- Field Calculation:

- Place the aligned molecules inside a 3D grid.

- Calculate steric (Lennard-Jones) and electrostatic (Coulombic) interaction energies between a probe atom and each molecule at every grid point.

- Partial Least Squares (PLS) Analysis:

- The calculated field values (independent variables) are correlated with the biological activity data (dependent variable) using PLS regression.

- The dataset is split into a training set (to build the model) and a test set (to validate it).

- Model Validation:

- Assess the model using the cross-validated correlation coefficient (q²) from the training set. A q² > 0.5 is generally considered acceptable.

- Use the external test set to calculate the predictive correlation coefficient (r²_pred), which is the true measure of the model's predictive power [21].

- Model Interpretation:

- Visualize the results as 3D coefficient contour maps. These maps show regions where specific steric or electrostatic features are favorable or unfavorable for activity, providing a visual guide for molecular design.

- Ligand Preparation and Alignment:

Workflow and Pathway Visualizations

Diagram 1: Decision Workflow for Model Selection

Diagram 2: Sequential LB-SB Screening Workflow

Frequently Asked Questions

1. What constitutes a high-quality training set for pharmacophore model development? A high-quality training set is a balanced collection of confirmed active and inactive compounds, alongside carefully selected decoy molecules that act as negative controls. The actives should be diverse, ideally representing multiple chemical scaffolds, to prevent the model from learning narrow, scaffold-specific patterns instead of the fundamental pharmacophore [26] [27]. Inactives provide direct negative feedback, while decoys, which are property-matched to actives but are structurally distinct, help assess the model's ability to discriminate true binders from non-binders in virtual screening [28] [29].

2. My pharmacophore model has high enrichment in training but performs poorly in validation. What could be wrong? This is a classic sign of overfitting. Common causes include:

- Insufficient Data: The training set is too small or lacks chemical diversity [27].

- Data Leakage: The validation set contains compounds that are structurally too similar to those in the training set. To avoid this, split your data based on molecular scaffolds to ensure the model is validated on genuinely novel chemotypes [27].

- Inadequate Decoys: Using random compounds as decoys can make screening too easy and give a false sense of model accuracy. Decoys should be matched to actives in terms of molecular weight and other physicochemical properties to provide a rigorous test [28].

3. How can I generate meaningful decoy molecules for my target? Utilize established public databases like DUD-E (Directory of Useful Decoys: Enhanced) and its optimized version DUDE-Z [28]. These resources provide pre-generated decoys that are matched to known actives for a wide range of targets, ensuring they have similar physical properties (making them hard to distinguish based on simple filters) but different 2D topologies (making them unlikely to bind) [28] [29]. This approach is considered a best practice for benchmarking.

4. Why is sensitivity analysis important for my pharmacophore model's parameters? Sensitivity analysis helps you understand which model parameters (e.g., feature tolerances, weights) have the most significant impact on your output (e.g., enrichment factor) [30] [31]. This allows you to:

- Focus Optimization: Identify and prioritize the tuning of the most sensitive parameters, making the calibration process more efficient [31].

- Improve Robustness: Analyze how uncertainty or variability in input parameters affects model predictions, leading to more reliable and interpretable models [32] [30].

Troubleshooting Guides

Problem: Poor Virtual Screening Performance and Low Hit Rates

Issue: Your pharmacophore model fails to enrich active compounds at the top of a virtual screening ranking list.

Diagnosis and Solution:

| Step | Action | Rationale & Details |

|---|---|---|

| 1 | Audit Training Set Composition | Ensure actives cover multiple chemical series. Use Bemis-Murcko scaffold analysis to confirm diversity. A cluster of similar ligands will lead to overfitted, non-generalizable models [2] [27]. |

| 2 | Validate with Rigorous Decoys | Test model performance on a benchmark set with property-matched decoys from DUDE-Z. Low enrichment indicates poor discriminatory power, likely due to a non-robust training set [28]. |

| 3 | Apply a Sensitivity Analysis | Perform a global sensitivity analysis (GSA) to identify which pharmacophore features most influence screening outcome. Methods like Sobol' or Morris can quantify each parameter's contribution [32] [30]. |

| 4 | Optimize Critical Parameters | Use the results of the sensitivity analysis to guide parameter optimization. Techniques like Markov Chain Monte Carlo (MCMC) can be used to find the parameter set that maximizes your performance metric [31]. |

Problem: Model Fails to Generalize to New Scaffolds

Issue: The model successfully identifies actives similar to those in the training set but misses actives with novel core structures.

Diagnosis and Solution:

| Step | Action | Rationale & Details |

|---|---|---|

| 1 | Re-split Your Data | Implement a scaffold-based split for training and validation. This ensures the model is tested on chemotypes it has never seen, providing a true measure of its generalizability [27]. |

| 2 | Incorporate Inactive Compounds | Include confirmed inactive compounds in the training set. This provides the model with explicit negative examples, helping it learn which interaction patterns are not conducive to binding and refining the essential pharmacophore pattern [27]. |

| 3 | Utilize Ensemble and AI Methods | Consider advanced approaches like dyphAI, which combines multiple pharmacophore models from different ligand complexes and clusters into an ensemble. This captures a broader range of valid protein-ligand interactions and conformational dynamics [26]. Alternatively, AI methods like PharmRL use reinforcement learning to elucidate optimal pharmacophores directly from protein structures, reducing reliance on a limited set of known ligands [29]. |

Experimental Protocols for Data Set Construction and Validation

Protocol 1: Building a Robust Training Set for QSAR-Based Pharmacophore Modeling

This protocol is adapted from studies developing QSAR models for targets like Estrogen Receptor beta and Monoamine Oxidase (MAO) [33] [27].

Key Research Reagent Solutions:

| Item | Function in the Protocol |

|---|---|

| ChEMBL / BindingDB | Public databases to source bioactivity data (IC₅₀, Ki) for known active and inactive compounds [26] [27]. |

| Schrödinger Canvas / RDKit | Software tools for calculating molecular fingerprints and performing similarity clustering and Bemis-Murcko scaffold analysis [26] [27]. |

| DUD-E / DUDE-Z | Databases of property-matched decoys for rigorous virtual screening benchmarking [28] [29]. |

| ZINC Database | A public database of commercially available compounds that can be used for prospective virtual screening [26] [2]. |

Methodology:

- Data Curation: Extract compounds with reported activity (e.g., IC₅₀ ≤ 199,000 nM for actives) and explicit inactivity from reliable databases like ChEMBL or the Binding Database [26] [27].

- Structure Preparation: Generate 3D structures for all compounds using a tool like LigPrep (Schrödinger) at a physiological pH of 7.4 ± 0.2 [26].

- Clustering for Diversity: Perform structural similarity clustering using radial fingerprints and the Tanimoto metric. Determine the optimal number of clusters to balance over- and under-clustering. This ensures the final training set contains representative molecules from diverse chemical classes [26].

- Train-Test Split: Partition the data into training and test sets using a scaffold-based splitting method. This guarantees that the model is validated on chemically distinct scaffolds, providing a true test of its generalizability and pharmacophore hypothesis [27].

Protocol 2: Global Sensitivity Analysis for Pharmacophore Model Parameter Optimization

This protocol is informed by sensitivity analyses conducted in PBPK modeling and ecological model calibration [32] [31].

Methodology:

- Define Model Output: Select a key performance metric as your model output, such as Enrichment Factor (EF₁%) or the Area Under the Curve (AUC) of a receiver operating characteristic (ROC) plot.

- Identify Input Parameters: List all variable pharmacophore parameters (e.g., feature tolerances, weights, exclusion sphere radii).

- Assign Probability Distributions: Define a plausible range and distribution (e.g., uniform, normal) for each input parameter [32].

- Generate Parameter Sets: Use a sampling method (e.g., Latin Hypercube Sampling) to generate a large number of parameter sets from the defined distributions.

- Run and Analyze: Execute your pharmacophore screening for each parameter set and record the output metric. A variance-based method like Sobol' analysis can then be applied to decompose the output variance and compute sensitivity indices (Si). These indices quantify the relative contribution of each parameter (and their interactions) to the model's performance [32].

The diagram below illustrates the logical workflow of this protocol.

Diagram 1: Workflow for Global Sensitivity Analysis (GSA) in parameter optimization.

Workflow Visualization: From Data Curation to Validated Model

The following diagram summarizes the complete integrated workflow for developing a sensitive and generalizable pharmacophore model, emphasizing the critical role of high-quality training data and parameter optimization.

Diagram 2: Integrated workflow for developing a validated pharmacophore model.

Advanced Techniques for High-Sensitivity Pharmacophore Modeling

Frequently Asked Questions (FAQs)

FAQ 1: Why does my pharmacophore model perform poorly when using an AlphaFold-predicted protein structure? AlphaFold-predicted structures often represent an "apo" or unbound protein conformation, which may have binding pockets that are structurally different from the "holo" conformation adopted when a ligand is bound. Key side chains might be in unfavorable rotamer configurations, making the binding pocket appear inaccessible or incorrectly shaped for your ligand [34]. Using a dynamic docking method that can adjust the protein conformation, or sourcing a holo-structure from a high-quality curated dataset, is recommended.

FAQ 2: What are the main advantages of cryo-EM in structure-based drug design, and what are its key challenges? Cryo-electron microscopy (cryo-EM) allows you to determine the structures of large protein complexes and membrane proteins that are difficult to crystallize. A major benefit is the ability to capture multiple conformational states of a protein in a near-native environment, which is invaluable for designing drugs that target specific protein states [35]. The primary challenges involve sample preparation, which can be an iterative and time-consuming process due to issues like protein denaturation at the air-water interface. It also requires significant investment in infrastructure and specialized training for staff [35].

FAQ 3: How can I improve the selection of scoring functions for my virtual screening campaign? Instead of relying on a single scoring function, use a consensus scoring approach. The performance of individual scoring functions can be highly dependent on the target protein [36]. To enhance results, employ a feature selection-based consensus scoring (FSCS) method. This supervised approach uses docked native ligand conformations to select a set of complementary scoring functions, which has been shown to improve the enrichment of active compounds compared to using individual functions or rank-by-rank consensus [36].

FAQ 4: My ligand poses have high steric clashes when docked into a predicted structure. How can I resolve this? Significant clashes often occur when a rigid docking protocol is used on a protein structure that is not in a ligand-compatible state. Traditional docking methods that treat the protein as rigid are particularly prone to this [34]. Consider using a "dynamic docking" method that allows for side-chain and, in some cases, backbone adjustments upon ligand binding. Alternatively, using molecular dynamics simulations for conformational sampling can help, though this is computationally demanding [34].

FAQ 5: What can I do if my target protein has no experimentally determined structure? You have several options. The primary method is to use a deep learning-based protein structure prediction tool like AlphaFold to generate a reliable model [34] [37]. If a homologous structure exists, you can perform homology modeling [38] [37]. For an end-to-end solution that does not require a separate protein structure, emerging deep generative models can predict the protein-ligand complex structure directly from the protein sequence and ligand molecular graph [39].

Troubleshooting Guides

Troubleshooting Low-Accuracy Ligand Posing

Symptoms: Predicted ligand binding poses consistently show a high root-mean-square deviation (RMSD) when compared to a known experimental structure.

| Potential Cause | Diagnostic Steps | Recommended Solution |

|---|---|---|

| Rigid Protein Backbone | Check if your initial protein structure (e.g., from AlphaFold) has a high pocket RMSD compared to the holo reference. | Implement a dynamic docking tool like DynamicBind, which can accommodate large conformational changes in the protein from its initial state [34]. |

| Incorrect Protonation/Tautomer State | Visually inspect the ligand's functional groups in the binding site. Use software to calculate probable protonation states at physiological pH. | Use a ligand-fixing module (e.g., within the HiQBind-WF workflow) to ensure correct bond order, protonation states, and aromaticity before docking [40]. |

| Poor Quality Input Structure | Review the source of your protein structure. Check for missing residues, atoms, or severe steric clashes in the binding pocket. | Use a structure refinement workflow (e.g., HiQBind-WF) to add missing atoms and resolve clashes. Prefer high-resolution experimental structures or high-quality curated models [40]. |

| Insufficient Sampling | Check if the docking software provides multiple pose predictions and if they are all similarly inaccurate. | Increase the exhaustiveness or number of sampling runs in your docking software. For generative models, generate a larger number of candidate structures (e.g., several dozen) to improve the chance of sampling a correct pose [39]. |

Troubleshooting Feature Selection for Scoring

Symptoms: Your virtual screening fails to enrich active compounds, or the ranking of compounds by predicted affinity does not correlate with experimental data.

| Potential Cause | Diagnostic Steps | Recommended Solution |

|---|---|---|

| Target-Dependent Scoring Performance | Test individual scoring functions on a small set of known active and inactive compounds for your specific target. | Adopt a feature selection-based consensus scoring (FSCS) strategy. Use a known active ligand to select a complementary set of scoring functions before running the full screen [36]. |

| Training Data Artifacts | If using a machine learning scoring function, investigate the dataset it was trained on for known errors or biases. | Consider retraining models with a high-quality, curated dataset like HiQBind, which aims to correct structural errors and improve annotation reliability [40]. |

| Inadequate Interaction Descriptors | Analyze which molecular features (e.g., hydrogen bonds, hydrophobic contacts) are important for binding in your target. | Ensure your feature set comprehensively captures the key interactions. Use a docking program that provides a wide array of intermolecular interaction features for post-docking analysis. |

Troubleshooting Protein Structure Preparation

Symptoms: Docking or pharmacophore generation fails, or results contain unrealistic atomic distances and clashes.

| Potential Cause | Diagnostic Steps | Recommended Solution |

|---|---|---|

| Missing Heavy Atoms or Residues | Visually inspect the binding site in software like PyMol. Check the PDB file for "REMARK" sections noting missing residues. | Use a protein-fixing module (e.g., ProteinFixer in HiQBind-WF) to add missing atoms and residues in the binding site region [40]. |

| Incorrect Hydrogen Placement | Check for unrealistic bond lengths or angles involving hydrogen atoms. | Use a refinement tool that adds hydrogens to the protein and ligand in their complexed state, followed by constrained energy minimization to optimize hydrogen positions [40]. |

| Untreated Flexibility | Identify flexible loops or side chains in the binding site that could move to accommodate the ligand. | If using rigid docking, consider generating an ensemble of protein conformations from molecular dynamics simulations or using a flexible docking algorithm. |

Experimental Protocols & Data Presentation

Key Research Reagent Solutions

The following table details essential resources for conducting structure-based drug discovery, as featured in the cited research.

| Item Name | Function / Description | Example Use Case in Research |

|---|---|---|

| PDBbind Dataset | A comprehensive collection of biomolecular complexes with experimentally measured binding affinities, used for training and testing scoring functions [34] [40]. | Served as the primary training data for the DynamicBind model and is a common benchmark for docking accuracy [34]. |

| HiQBind-WF Workflow | An open-source, semi-automated workflow of algorithms for curating high-quality, non-covalent protein-ligand complex structures [40]. | Used to create the HiQBind dataset by correcting structural errors in PDBbind, such as fixing ligand bond orders and adding missing protein atoms [40]. |

| DynamicBind | A deep learning method that uses equivariant geometric diffusion networks to perform "dynamic docking," adjusting the protein conformation to a holo-like state upon ligand binding [34]. | Employed to accurately recover ligand-specific conformations from unbound (e.g., AlphaFold-predicted) protein structures, handling large conformational changes [34]. |

| GNINA / AutoDock Vina | Molecular docking software that uses scoring functions to predict ligand poses within a protein binding site [39]. | Used as baseline methods in benchmarking studies to compare the performance of new docking and complex generation tools [39]. |

| ESM-2 Protein Language Model | A deep learning model that generates informative representations from protein sequences, capturing structural and phylogenetic information [39]. | Used as a sequence input representation for end-to-end protein-ligand complex structure generation models, bypassing the need for an existing protein structure [39]. |

Performance Benchmarking Data

Table: Benchmarking Ligand Pose Prediction Accuracy (RMSD < 2 Å) on Different Test Sets. Data adapted from DynamicBind study [34].

| Method | PDBbind Test Set (Success Rate) | Major Drug Target (MDT) Set (Success Rate) |

|---|---|---|

| DynamicBind | 33% | 39% |

| DiffDock | 19% | Data Not Available |

| Traditional Docking (Vina, etc.) | <19% | <39% |

Table: Structural Improvements in a Curated Dataset (HiQBind) vs. PDBbind. Data illustrates the impact of a rigorous curation workflow [40].

| Curated Attribute | Improvement in HiQBind Workflow |

|---|---|

| Ligand Bond Order | Corrected for numerous structures |

| Protein Missing Atoms | Added for a more complete model |

| Steric Clashes | Identified and resolved |

| Hydrogen Placement | Optimized in the complexed state |

Detailed Methodology: Structure-Based Virtual Screening with Consensus Scoring

This protocol outlines a virtual screening process integrating structure-based docking and feature selection for consensus scoring, based on the method described by Teramoto et al. [36]

Protein and Ligand Library Preparation

- Protein Target: Obtain the 3D structure of your target protein. If an experimental structure is unavailable, generate a high-quality model using AlphaFold [34] or perform homology modeling with a tool like Modeller [38]. Prepare the structure by adding hydrogen atoms, assigning partial charges, and ensuring missing loops or side chains in the binding site are modeled.

- Ligand Library: Prepare a library of small molecules for screening in an appropriate format (e.g., SDF, MOL2). Generate 3D conformations and optimize the structures using tools like RDKit [34]. Apply filters for drug-likeness and other desirable properties.

Molecular Docking

- Use docking software (e.g., GNINA, AutoDock Vina) to dock each compound in your library into the binding site of the prepared protein structure [39].

- For each compound, output multiple poses (e.g., 10-20) and record the scoring function values from multiple scoring functions (e.g., F-Score, D-Score, PMF, G-Score, ChemScore) [36].

Feature Selection for Consensus Scoring (FSCS)

- Prepare Training Data: Dock a known native ligand or a small set of confirmed active compounds against your target. These will serve as the "active" reference.

- Generate Molecular Descriptors: For all docked poses (of both your test library and the known actives), calculate a comprehensive set of molecular descriptors and fingerprints using a tool like PaDEL-Descriptor [38].

- Feature Selection: Apply a supervised feature selection algorithm (e.g., based on statistical tests or model importance) to the data from the known active compounds. The goal is to identify which scoring functions and molecular descriptors best distinguish the correct pose of the active compound from decoy poses.

- Select Complementary Functions: Based on the feature selection results, choose a subset of individual scoring functions that are complementary to each other, rather than redundant.

Apply Consensus Scoring and Rank Compounds

- Apply the selected combination of scoring functions to the poses from your full virtual screening library.

- Combine the scores from the selected functions into a single consensus score for each ligand pose (e.g., by averaging ranks or using a machine learning model trained on the feature-selected data).

- Rank all compounds in the screening library based on this consensus score.

Validation

- If possible, validate the top-ranked compounds through experimental assays (e.g., binding affinity or functional assays).

Workflow Visualization

Structure-Based Drug Discovery Workflow

Troubleshooting Common Structural Challenges

FAQs and Troubleshooting Guides

Q1: My pharmacophore model matches most active compounds but also retrieves many inactive compounds during virtual screening. How can I improve its selectivity?

- Answer: This is a common issue related to model specificity. To address this:

- Incorporate Inactive Compounds: During the hypothesis generation phase, provide a set of confirmed inactive compounds. Modern tools like the approach developed by PMC can then optimize the model to match active compounds while avoiding matches to the inactives [41].

- Utilize Excluded Volumes: Generate excluded volumes from your set of inactive compounds. These volumes represent steric barriers that inactives clash with, preventing the model from matching compounds that occupy the same unfavorable space [42].

- Adjust Feature Constraints: Overly generic features can reduce selectivity. Review the types and tolerances of the pharmacophore features in your model. You may need to reduce distance tolerances or replace a common feature like a hydrogen bond acceptor with a more specific one like a negatively charged group if the data supports it.

Q2: What should I do if my active ligands are structurally diverse and will not align properly, making it difficult to identify a common pharmacophore?

- Answer: Difficult alignment is a key limitation of some traditional methods.

- Use Alignment-Free Methods: Consider tools that employ a 3D pharmacophore signature or hash representation. These methods identify common pharmacophores across a set of active compounds without requiring a prior molecular alignment step [41].

- Leverage Hierarchical Graphs: For complex data from molecular dynamics simulations, a hierarchical graph representation of pharmacophore models can help visualize the relationships between many different pharmacophores, making it easier to identify core, conserved features across diverse ligand poses [43].

- Manual Pre-alignment: If using alignment-dependent software, you can manually pre-align your ligands to a known bioactive conformation or a presumed active core using a flexible ligand alignment tool before importing them into the pharmacophore modeling workflow [42].

Q3: How can I validate my ligand-based pharmacophore model to ensure it is not random and has predictive power?

- Answer: Proper validation is crucial for trusting your model's results.

- Retrospective Screening: Perform a virtual screen of a database that contains known actives and decoys (inactive compounds). A good model should efficiently enrich the actives in the top-ranked hits [41] [43].

- Compare to 2D Methods: Compare the enrichment performance of your 3D pharmacophore model against a simple 2D fingerprint-based similarity search. A robust 3D model should offer advantages over 2D similarity alone [41].

- Check Against Crystallographic Data: If the 3D structure of a target-ligand complex is available, verify that your top pharmacophore hypothesis can match the pose of the co-crystallized ligand. This confirms the model's biological relevance [41].

Key Parameters for Pharmacophore Model Sensitivity

The sensitivity and specificity of a ligand-based pharmacophore model are highly dependent on the parameters set during its development. The table below summarizes critical parameters and their optimization strategies.

Table 1: Key Parameters for Optimizing Pharmacophore Model Sensitivity

| Parameter | Description | Optimization Guidance for Sensitivity |

|---|---|---|

| Active/Inactive Thresholds | The activity values used to categorize compounds as 'active' or 'inactive' for the model [41]. | Use consistent, stringent thresholds based on robust experimental data (e.g., pIC50 ≥ 7 for actives, ≤ 5 for inactives) to ensure clear separation between groups [41] [42]. |

| Number of Features | The range of pharmacophore features (e.g., donor, acceptor, hydrophobic) allowed in the final hypothesis [42]. | Specify a range (e.g., 4 to 6) and a preferred minimum to allow the algorithm to find the optimal balance between completeness and simplicity [42]. |

| Matching Actives Criterion | The minimum percentage or number of active compounds that the model must match [42]. | Start with a high percentage (e.g., 80%); lower it if actives are known to bind in multiple modes to avoid missing valid hypotheses [42]. |

| Excluded Volumes | Regions in space where atoms are forbidden, derived from inactive compounds or the protein structure [42]. | Generate shells from inactives to improve model selectivity and reduce false positives [42]. |

| Hypothesis Difference Criterion | The distance cutoff used to determine if two hypotheses are redundant [42]. | A smaller value (e.g., 0.5-1.0 Å) ensures returned hypotheses are distinct, providing a broader set of models for screening [42]. |

Experimental Protocol: Developing a Selective Pharmacophore Model

This protocol outlines the steps for generating a ligand-based pharmacophore model using both active and inactive compounds to optimize sensitivity and selectivity.

1. Compound Selection and Curation:

- Gather Actives: Collect a set of active compounds with consistent, potent activity against the target. A larger, structurally diverse set is preferable [41].

- Select Inactives: Curate a set of confirmed inactive compounds. These are crucial for teaching the algorithm which features to avoid [41].

- Prepare Structures: Convert all 2D structures to 3D. Generate multiple low-energy conformers for each compound to account for flexibility [41] [42].

2. Pharmacophore Hypothesis Generation:

- Input Actives and Inactives: Load the prepared compounds into a pharmacophore modeling tool (e.g., Phase, Pmapper) and define the active/inactive split based on pre-determined thresholds [41] [42].

- Set Modeling Parameters: Configure key parameters as described in Table 1. For instance, set the number of features to 5-6 and require the model to match at least 70-80% of actives initially [42].

- Run Generation Algorithm: Execute the search for common pharmacophore hypotheses. The algorithm will score hypotheses based on their ability to match actives and avoid inactives [41].

3. Model Validation and Selection:

- Review Top Hypotheses: Examine the highest-ranked hypotheses. Check the alignment of active compounds and ensure inactives show poor fits or steric clashes [42].

- Perform Retrospective Screening: Use the top models to screen a validation database spiked with known actives. Calculate enrichment factors (EF) to quantitatively assess performance [41].

- Select the Best Model: Choose the model with the best balance of high active recall (sensitivity) and low inactive matching (specificity), as confirmed by the enrichment analysis.

The following workflow diagram illustrates the key steps in this protocol.

Table 2: Key Tools and Resources for Ligand-Based Pharmacophore Modeling

| Item | Function in Research |

|---|---|

| CheMBL Database | A public repository of bioactive molecules with curated binding data. Used to source structures of active and inactive compounds for model building and validation [41] [43]. |

| Structure Curation Workflows | Standardized pipelines (e.g., via KNIME) for processing compound structures, standardizing chemistry, and filtering data to ensure high-quality input for modeling [41] [43]. |

| Conformer Generation Algorithms | Software components (e.g., idbgen in LigandScout) that generate multiple 3D conformations for each 2D molecule, essential for capturing the flexible nature of ligands [43]. |

| Free Modeling Tools (e.g., Pmapper) | Open-source software that provides access to advanced algorithms, such as alignment-free 3D pharmacophore hashing, without commercial license requirements [41]. |

| Virtual Screening Databases (e.g., ZINCPharmer) | Public platforms that allow researchers to screen millions of commercially available compounds against a custom pharmacophore query to identify potential hit molecules [44]. |

| DUD-E Library | The "Database of Useful Decoys: Enhanced." A benchmark set containing known actives and property-matched decoys, critical for rigorous validation of model selectivity and avoiding artificial enrichment [42]. |

Incorporating Protein Flexibility via Molecular Dynamics (MD) Simulations

Understanding and incorporating protein flexibility is a cornerstone of modern computational drug discovery. Proteins are highly dynamic biomolecules, and the presence of flexible regions is critical for their biological function, influencing substrate specificities, turnover rates, and pH dependency of enzymes [45]. Traditional rigid receptor docking (RRD) methods often fall short because they model the protein as a static structure, which fails to capture the true dynamic nature of biomolecular interactions [46].

Research has demonstrated that predictions incorporating protein flexibility show higher linear correlations with experimental data compared to those from RRD methods [46]. For studies focused on parameter optimization for pharmacophore model sensitivity, accounting for this flexibility is not merely an enhancement but a necessity. It ensures that the generated pharmacophore models, which are critical for virtual screening and pose prediction, accurately represent the functional conformational space of the target protein [47]. This technical support guide provides practical methodologies and troubleshooting advice to help researchers effectively integrate MD simulations into their workflows, thereby improving the sensitivity and accuracy of their pharmacophore models.

FAQs & Troubleshooting Guide

Q1: During molecular dynamics, my visualization software shows that a crucial bond in my DNA/protein ligand is missing. What is wrong?

A: This is a common visualization artifact, not necessarily an error in your simulation data.

- Root Cause: Visualization tools like VMD often guess molecular connectivity based on ideal bond lengths and atom proximity. If your initial structure has unusual bond lengths or if atoms are slightly displaced, the visual bond may not be rendered.

- Solution:

- Trust Your Topology: The definitive record of bonds in your system is the

[ bonds ]section of your topology file. Bonds cannot break or form during a classical MD simulation; they are defined at the start in the topology [48]. - Validate with an Energy-Minimized Frame: Load an energy-minimized structure (e.g., from your

em.grofile) alongside your trajectory in VMD. The minimized frame should have corrected bond geometries, allowing VMD to correctly display the bonds throughout the trajectory [48].

- Trust Your Topology: The definitive record of bonds in your system is the

Q2: When running pdb2gmx, I get the error "Residue 'XXX' not found in residue topology database". How do I resolve this?

A: This error occurs when the force field you selected does not contain a definition for the residue 'XXX' [49].

- Root Cause: The force field's residue topology database (

.rtpfile) lacks an entry for the specific molecule or residue you are trying to process. - Solution Path:

- Check Residue Naming: Ensure the residue name in your PDB file matches the name used in the force field's database. Using "HIS" for a histidine is different from "HSD".

- Obtain Topology Parameters: You cannot use

pdb2gmxfor arbitrary molecules without a database entry. Your options are:- Find a pre-existing topology file (

.itp) for your molecule and include it manually in your system's topol.top file. - Parameterize the molecule yourself using specialized tools (e.g.,

x2topor external servers). - Search the literature for published parameters compatible with your force field [49].

- Find a pre-existing topology file (

- Add Residue to Database (Advanced): For advanced users, you can create a new residue entry in the force field's

.rtpfile, defining all atom types, bonds, and interactions.

Q3: My simulation fails with an "Out of memory" error during analysis. What can I do?

A: This happens when the analysis tool requires more memory (RAM) than is available on your system [49].

- Root Cause: The memory cost of analysis scales with the number of atoms (N) and simulation length (T), sometimes as badly as N². Large systems or long trajectories can easily exhaust memory.

- Solution: