Optimizing NGS Variant Calling Pipelines: A Comprehensive Guide from Foundational Concepts to Clinical Validation

This article provides researchers, scientists, and drug development professionals with a comprehensive framework for next-generation sequencing (NGS) variant calling pipelines.

Optimizing NGS Variant Calling Pipelines: A Comprehensive Guide from Foundational Concepts to Clinical Validation

Abstract

This article provides researchers, scientists, and drug development professionals with a comprehensive framework for next-generation sequencing (NGS) variant calling pipelines. Covering foundational concepts through advanced applications, it explores critical pipeline components from raw data processing to clinical interpretation. The content delivers evidence-based comparisons of state-of-the-art tools like DRAGEN, DeepVariant, and GATK, alongside optimization strategies for improving accuracy in challenging genomic regions. With practical guidance on validation, benchmarking, and emerging trends in AI and multi-omics integration, this resource supports the implementation of robust, clinically-reliable NGS workflows for precision medicine applications.

Demystifying NGS Variant Calling: Core Principles and Pipeline Architecture

The field of genomics has undergone a transformative revolution, moving from the painstaking, low-throughput methods of first-generation sequencing to the massively parallel, high-speed capabilities of next-generation sequencing (NGS). This paradigm shift has fundamentally altered the scale and scope of biological inquiry, enabling researchers to address questions that were previously impractical or impossible [1]. The seminal Human Genome Project, completed in 2003, relied on Sanger sequencing—a first-generation technology. This monumental international effort took 13 years and cost nearly $3 billion to produce the first complete sequence of a human genome [1]. In stark contrast, NGS technologies today can sequence an entire human genome in a matter of hours for under $1,000 [1]. This staggering reduction in time and cost has democratized access to genomic information, fueling advances in personalized medicine, cancer genomics, infectious disease surveillance, and fundamental biological research [2].

This document provides detailed application notes and protocols, framed within the context of a broader thesis on NGS data analysis pipelines for variant calling. Variant calling—the process of identifying differences between a sequenced sample and a reference genome—is a critical first step in extracting biological and clinical meaning from raw sequencing data [3]. The transition from Sanger to NGS has not only changed the laboratory workflow but has also created an immense computational challenge, necessitating the development of sophisticated bioinformatics pipelines, advanced algorithms, and, increasingly, artificial intelligence (AI) to manage and interpret the vast volumes of data generated [4] [3].

Table: Historical and Technical Comparison of Sanger vs. Next-Generation Sequencing

| Feature | Sanger Sequencing (First-Gen) | Next-Generation Sequencing (NGS) |

|---|---|---|

| Throughput Principle | Sequential processing of single DNA fragments. | Massively parallel processing of millions of fragments simultaneously [1]. |

| Typical Read Length | Long (500 - 1000 base pairs). | Short (50 - 600 base pairs for dominant short-read platforms) [1]. |

| Cost per Human Genome | ~$3 billion (circa 2003). | Under $1,000 (2025) [1]. |

| Time per Human Genome | ~13 years (Human Genome Project timeline). | ~Hours to days [1]. |

| Primary Applications | Targeted sequencing, validation of specific variants. | Whole-genome sequencing, exome sequencing, transcriptomics, epigenomics, metagenomics [2]. |

| Data Output per Run | Kilobases to megabases. | Gigabases to terabases [5]. |

NGS Technologies and Platform Specifications

The NGS landscape in 2025 is characterized by a diverse array of platforms employing different biochemical principles, each with distinct strengths tailored for specific research applications. These platforms are broadly categorized by their read length output: short-read and long-read technologies [5].

Short-read sequencing (50-600 base pairs) is dominated by Illumina's Sequencing by Synthesis (SBS) technology, which remains the industry gold standard for high-accuracy, high-throughput applications [1] [2]. The process involves library preparation, cluster generation on a flow cell, and cyclic addition of fluorescently labeled nucleotides. Competing short-read technologies, such as Ion Torrent's semiconductor sequencing, which detects pH changes, have also played a role in the market [2].

Long-read sequencing (thousands to millions of base pairs), often termed third-generation sequencing, is primarily represented by Pacific Biosciences (PacBio) and Oxford Nanopore Technologies (ONT). These platforms excel at resolving complex genomic regions, detecting large structural variants, and performing de novo genome assembly without the need for fragmentation [1] [5]. Recent chemistry breakthroughs have dramatically improved their accuracy. PacBio's HiFi (High-Fidelity) reads use circular consensus sequencing to achieve >99.9% accuracy from reads of 10-25 kilobases [5]. Oxford Nanopore's Q20+ and Q30 Duplex kits enable sequencing of both strands of a DNA molecule, pushing accuracy to over 99.9% for duplex reads while maintaining the capability for ultra-long reads [5].

Table: Comparison of Major NGS Platforms and Specifications (2025)

| Platform (Company) | Technology Type | Key Chemistry/Principle | Typical Read Length | Key Strength | Common Applications |

|---|---|---|---|---|---|

| NovaSeq X Series (Illumina) | Short-read | Sequencing-by-Synthesis (SBS) with reversible dye-terminators [2]. | 50-600 bp | Ultra-high throughput (up to 16 Tb/run), high accuracy (>99%) [5]. | Large cohort WGS/WES, population studies, RNA-seq. |

| Revio (PacBio) | Long-read | Single Molecule Real-Time (SMRT) sequencing with HiFi circular consensus [5]. | 10-25 kb (HiFi) | Long reads with very high accuracy (>99.9%) [5]. | De novo assembly, phasing, structural variant detection. |

| PromethION (Oxford Nanopore) | Long-read | Nanopore sensing of electrical current changes [2]. | Up to >1 Mb; commonly 10-100 kb. | Extremely long reads, real-time analysis, direct detection of modifications [5]. | Real-time surveillance, complex SV detection, direct RNA sequencing. |

| Onso (PacBio) | Short-read | Sequencing-by-Binding (SBB) [2]. | 100-200 bp | High accuracy, potentially lower cost per genome [2]. | Targeted sequencing, validation, flexible throughput. |

| Ion GeneStudio S5 (Thermo Fisher) | Short-read | Semiconductor (pH detection) [2]. | 200-400 bp | Fast run times, simple workflow. | Targeted gene panels, small genome sequencing. |

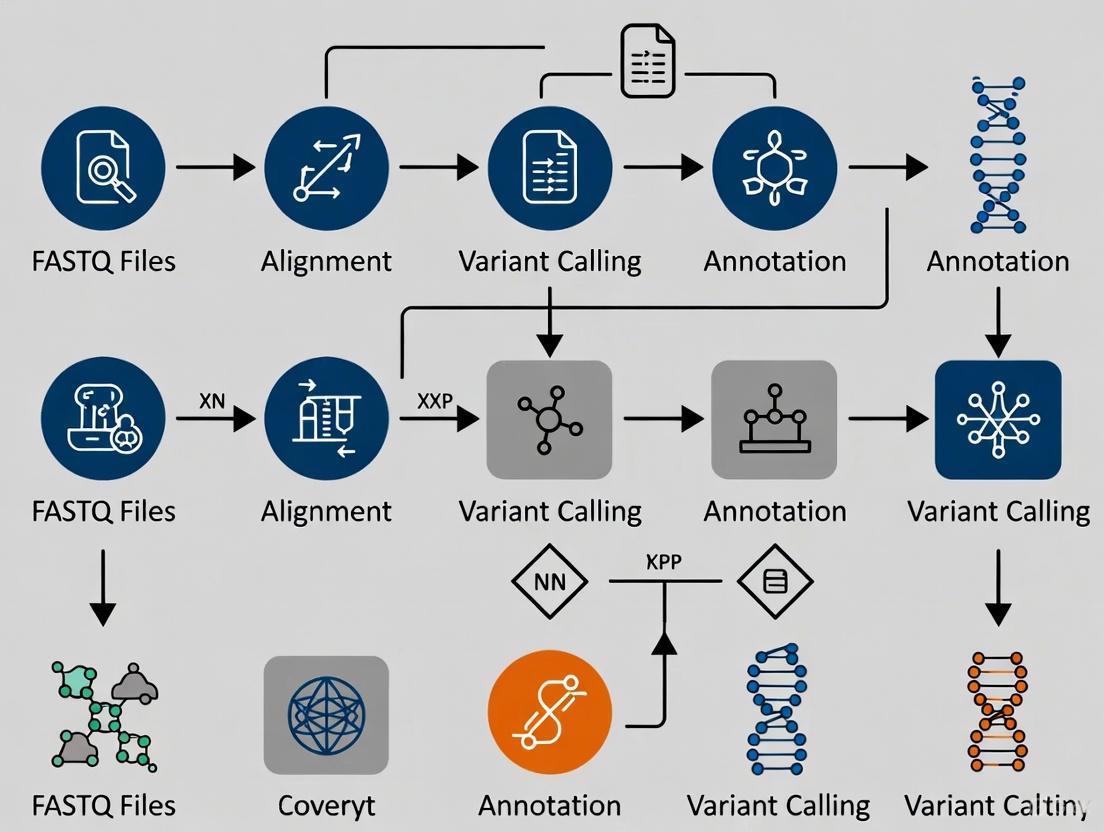

Diagram 1: Generalized NGS Workflow from Sample to Data (Max 760px)

NGS Data Analysis Pipelines for Variant Calling

The raw output of an NGS instrument is a collection of FASTQ files containing millions of short DNA sequences (reads) and their corresponding quality scores. Transforming this data into biologically meaningful variant calls requires a multi-step computational pipeline. The standard reference-based variant calling pipeline involves: 1) Quality Control & Trimming, 2) Read Alignment/Mapping to a reference genome, 3) Post-Alignment Processing (e.g., duplicate marking, base quality recalibration), 4) Variant Calling to identify genomic positions that differ from the reference, and 5) Variant Filtering & Annotation [3].

A significant computational challenge arises from the massive scale of NGS data, especially for large cohorts. This has spurred innovation in distributed computing pipelines. For instance, a 2025 study introduced a scalable, distributed pipeline for reference-free variant calling using a De Bruijn graph constructed from sequencing reads [4]. This approach, implemented with the Apache Spark framework, partitions the graph across multiple machines using a specialized clustering algorithm, enabling efficient parallel processing of large datasets without the bottleneck of aligning all reads to a reference genome first [4].

The most transformative trend in variant calling is the integration of Artificial Intelligence (AI), particularly deep learning (DL). AI-based callers analyze sequencing data in novel ways—for example, by treating aligned reads as multi-channel images—to achieve superior accuracy, especially in complex genomic regions where traditional statistical models struggle [3].

Table: Selected AI-Based Variant Calling Tools (2025)

| Tool | Underlying AI Model | Key Feature | Supported Data | Reported Advantage |

|---|---|---|---|---|

| DeepVariant [3] | Deep Convolutional Neural Network (CNN) | Treats read pileups as images for classification. | Short-read, PacBio HiFi, ONT. | High accuracy, used in large-scale projects like UK Biobank [3]. |

| DeepTrio [3] | Deep CNN (extends DeepVariant) | Jointly analyzes parent-child trios to improve accuracy and identify de novo mutations. | Short-read. | Superior accuracy for family-based studies compared to non-trio methods [3]. |

| DNAscope [3] | Machine Learning (ML) enhancement of core algorithms | Integrates ML-based genotyping with optimized HaplotypeCaller logic. | Short-read, PacBio HiFi, ONT. | High speed and computational efficiency without requiring GPU acceleration [3]. |

| Clair3 [3] | Deep Neural Network | Designed for efficient and accurate calling from both short and long reads. | Short-read, PacBio HiFi, ONT. | Fast performance and good accuracy at lower sequencing coverages [3]. |

Diagram 2: Standard Reference-Based Variant Calling Pipeline (Max 760px)

Detailed Experimental Protocols

Protocol: Reference-Based Germline SNP/Indel Calling using an AI-Based Pipeline

This protocol outlines the steps for identifying germline single nucleotide polymorphisms (SNPs) and small insertions/deletions (Indels) from whole-genome sequencing data using the DeepVariant pipeline, an accurate AI-based tool [3].

1. Preliminary Setup and Quality Control

- Input: Paired-end FASTQ files (e.g.,

sample_R1.fastq.gz,sample_R2.fastq.gz). - Software: Install

fastp,samtools,DeepVariant, and associated dependencies. Ensure access to a high-quality reference genome (e.g., GRCh38) and its index. - Quality Control: Run

fastpto perform adapter trimming, quality filtering, and generate QC reports.

2. Read Alignment

- Align trimmed reads to the reference genome using

bwa mem. Convert the output SAM file to BAM format, sort it, and index it.

3. Post-Alignment Processing (Optional but Recommended)

- Mark PCR duplicate reads using

samtools markdupor Picard Tools. This step is crucial for reducing artifacts in downstream variant calling.

4. Variant Calling with DeepVariant

- Run DeepVariant in its recommended mode. This involves creating labeled examples from the BAM file and running the TensorFlow model to make variant calls.

- The output is a VCF file (

sample.deepvariant.vcf.gz) containing unfiltered variant calls with genotype qualities.

5. Variant Filtering and Annotation

- Basic Filtering: While DeepVariant outputs high-quality calls, additional hard-filtering based on quality metrics (e.g.,

QUAL,DP,GQ) can be applied usingbcftools. - Annotation: Use tools like

SnpEffor Ensembl's VEP to annotate variants with functional consequences (e.g., missense, stop-gain), population frequencies, and links to disease databases.

Protocol: Executing a Distributed, Reference-Free Variant Calling Pipeline

This protocol summarizes the method from the 2025 study on scalable, reference-free variant calling for isolated SNPs using a distributed De Bruijn graph on an Apache Spark cluster [4].

1. Input and Environment Setup

- Input: A large collection of raw sequencing reads (FASTQ) from multiple samples.

- Environment: Set up an Apache Spark cluster with multiple worker nodes. Ensure all necessary libraries (e.g., for graph processing) are available.

2. Graph Construction and Partitioning

- k-mer Extraction: The pipeline begins by extracting all k-mers (substrings of length k) from the input reads across all samples in a distributed manner (Map step).

- De Bruijn Graph Assembly: Distinct k-mers are used as vertices. Edges are created between k-mers that overlap by k-1 bases. This constructs a massive, distributed De Bruijn graph representing the collective sequence data.

- Optimized Partitioning: Instead of using Spark's default hash partitioning, the pipeline employs a custom clustering algorithm based on hierarchical clustering and the Jaccard index. This algorithm groups structurally related vertices (i.e., those likely part of the same genomic region) onto the same physical computing node. This minimizes communication overhead between nodes during traversal, which is the key performance innovation [4].

3. Bubble Detection for SNP Identification

- Parallel Graph Traversal: The pipeline traverses the partitioned graph in parallel across all cluster nodes.

- Bubble Recognition: The algorithm searches for specific subgraph patterns called "simple bubbles"—two parallel paths between a common start and end vertex. In a De Bruijn graph, such bubbles often represent alternative alleles at a single genomic locus (i.e., a SNP) [4].

- Variant Output: Each identified bubble is processed to output the candidate isolated SNP, including its sequence context.

4. Performance Consideration

- The study demonstrated that this specialized partitioning strategy led to a relevant performance speed-up compared to standard partitioning techniques, making large-scale reference-free variant calling computationally feasible [4].

The Scientist's Toolkit: Research Reagent Solutions

A successful NGS experiment requires careful selection of reagents and tools at each stage. The following table details key components for a typical variant calling research project.

Table: Essential Research Toolkit for NGS-Based Variant Calling Studies

| Category | Item/Reagent | Function & Importance | Example/Note |

|---|---|---|---|

| Library Prep | Fragmentation Enzyme/Kit | Randomly shears DNA to optimal size for sequencing. Critical for library complexity and even coverage. | Acoustic shearing (Covaris) or enzymatic fragmentation (NEB Next Ultra II). |

| Library Prep | Adapter Ligation Kit | Attaches platform-specific oligonucleotide adapters to DNA fragments. Enables binding to the flow cell and PCR amplification. | Illumina-compatible forked adapters with sample index barcodes for multiplexing. |

| Library Prep | PCR Enrichment Mix | Amplifies adapter-ligated DNA to create the final sequencing library. Polymerase fidelity impacts error rates. | High-fidelity, low-bias PCR enzymes (e.g., KAPA HiFi). |

| Sequencing | Sequencing Kit & Flow Cell | Contains enzymes, buffers, and fluorescent nucleotides for the sequencing reaction. The flow cell is the consumable surface where clustering and sequencing occur. | Illumina NovaSeq X Series 25B Reagent Kit, S1/S2 Flow Cell. |

| Data Analysis | Primary Analysis Software | Performs base calling and demultiplexing on the instrument computer. Converts raw images to FASTQ files. | Illumina DRAGEN, onboard RTA software. |

| Data Analysis | Variant Calling Pipeline | Core software for identifying genetic variants. Choice depends on required accuracy, speed, and data type. | AI-Based: DeepVariant [3]. Traditional: GATK HaplotypeCaller. Distributed: Custom Apache Spark pipeline [4]. |

| Data Analysis | Variant Annotation Database | Provides biological context (gene, effect, frequency) to raw variant calls. Essential for interpretation. | Local installations of SnpEff/Ensembl VEP with custom databases (gnomAD, ClinVar). |

| Computational | Workflow Management System | Automates, documents, and ensures reproducibility of multi-step analysis pipelines. | Nextflow, Snakemake [6]. |

| Computational | Containerization Platform | Packages software and dependencies into isolated, portable units to guarantee consistent execution environments. | Docker, Singularity [6]. |

Economic Impact and Market Trajectory

The widespread adoption of NGS has created a substantial and rapidly growing market for both sequencing services and, critically, the informatics solutions required to analyze the data. The global NGS data analysis market was valued at approximately $5.91 billion in 2025 and is projected to advance at a compound annual growth rate (CAGR) of 16.7%, reaching $14.93 billion by 2033 [7]. Similarly, the broader NGS informatics market is expected to grow from $7.21 billion in 2024 to $25.43 billion by 2035 (CAGR of 12.15%) [8].

This growth is driven by several key factors:

- Expanding Clinical Adoption: NGS is moving from research into routine clinical diagnostics for oncology, rare diseases, and pharmacogenomics [7].

- Government Initiatives: Large-scale national genomics projects (e.g., UK's 100,000 Genomes Project) fuel demand [7].

- Rising Prevalence of Chronic Diseases: Increased focus on cancer and genetic disorders necessitates genomic analysis for personalized treatment strategies [8].

- Technological Advancements: The integration of AI and cloud computing is making analysis faster, more accurate, and more accessible, further propelling market expansion [9].

Geographically, North America currently holds the largest market share due to strong infrastructure and significant investment [8]. However, the Asia-Pacific region is anticipated to be the fastest-growing market, driven by increasing healthcare expenditure, large populations, and rising research funding [8].

The NGS revolution continues to accelerate, with several key trends shaping its future trajectory within variant calling research:

- Convergence of Sequencing Technologies: The distinction between short- and long-read platforms is blurring. Companies are developing multi-omics chemistries (like PacBio's SPRQ for simultaneous sequence and chromatin accessibility detection [5]) and hybrid platforms to offer more comprehensive solutions.

- Ubiquity of AI and Machine Learning: AI will move beyond variant calling to integrate across the entire analysis workflow, including quality control, alignment optimization, and, most importantly, the biological interpretation of variant lists to predict clinical significance [6] [9].

- Democratization and Cloud-Native Analysis: Cloud-based platforms (AWS HealthOmics, Google Cloud Life Sciences) and serverless computing will become the default for many researchers, eliminating local infrastructure barriers and facilitating global collaboration [6]. This must be paired with robust security protocols to protect sensitive genetic data [9].

- Shift to Pangenome References: The use of a single linear reference genome (like GRCh38) will increasingly be supplemented by graph-based pangenome references that incorporate population variation. This will improve alignment accuracy in diverse populations and variant discovery [4] [6].

In conclusion, the journey from Sanger sequencing to high-throughput NGS has unlocked the genomic era. For researchers focused on variant calling, this means navigating a landscape of ever-evolving sequencing technologies, leveraging sophisticated distributed computing pipelines to manage data scale, and employing cutting-edge AI tools to achieve unprecedented accuracy. The future lies in seamlessly integrating these technological advances into robust, reproducible, and accessible analysis workflows. This will fully realize the promise of genomics in driving discoveries in basic biology and delivering on the goals of precision medicine, ultimately transforming how we understand, diagnose, and treat disease.

The translation of raw next-generation sequencing (NGS) data into reliable variant calls constitutes the foundational pillar of modern genomic research and precision medicine. Within the context of a thesis on NGS data analysis pipelines for variant calling, this document establishes that the reproducibility and accuracy of downstream biological insights are inextricably linked to the robustness of the preprocessing and analysis workflow. The transition from FASTQ files, containing raw sequence reads and quality scores, to the final Variant Call Format (VCF) file, is a multi-step computational process where each stage introduces specific biases and errors that can propagate [10]. In clinical and drug development settings, where variants inform diagnoses and therapeutic strategies, non-reproducible results from non-standardized pipelines present a significant challenge [11]. Therefore, a detailed understanding of each essential component—from quality control and alignment to variant calling and filtering—is not merely a technical exercise but a critical scientific requirement for ensuring data integrity, enabling valid cross-study comparisons, and ultimately, supporting confident clinical decision-making [12] [13].

Core Components of the NGS Variant Calling Pipeline

The canonical pipeline for DNA variant discovery follows a logical progression where the output of one stage becomes the input for the next. The following breakdown details the function, key tools, and objectives of each essential component.

1.1. Raw Data & Quality Control (FASTQ) The primary outputs from NGS instruments are FASTQ files, which contain nucleotide sequences for each read and a corresponding per-base quality score (Phred score). The initial Quality Control (QC) step is crucial for identifying issues arising from the sequencing process itself, such as diminishing quality over read length, adapter contamination, or abnormal nucleotide composition. FastQC is the ubiquitous tool for this stage, providing an overview of multiple quality metrics [10]. Findings from QC often necessitate trimming or adapter removal using tools like Trimmomatic or Cutadapt to eliminate low-quality bases or technical sequences before alignment, thereby reducing false mappings and subsequent variant errors [14].

1.2. Read Alignment (BAM/SAM) In this step, cleaned sequence reads are mapped to a reference human genome (e.g., GRCh38). The goal is to determine the genomic origin of each read, accounting for sequencing errors and genuine genetic differences. The Burrows-Wheeler Aligner (BWA-MEM) is a widely adopted, efficient aligner for short reads [12]. The alignment results are stored in Sequence Alignment/Map (SAM) format or its compressed binary version (BAM). SAMtools provides essential utilities for manipulating, sorting, and indexing these files [14]. The integrity and accuracy of the alignment file (BAM) are paramount, as all subsequent variant detection is based on its contents.

1.3. Post-Alignment Processing & Refinement The initial BAM file requires further processing to correct for technical artifacts before variant calling. This stage, often guided by the GATK Best Practices, includes:

- Duplicate Marking: Identification and tagging of PCR duplicate reads (using Picard or SAMtools) that arose from clonal amplification during library preparation, which can bias variant allele frequencies [12].

- Base Quality Score Recalibration (BQSR): A machine-learning technique (GATK BaseRecalibrator) that systematically corrects inaccuracies in the per-base quality scores assigned by the sequencer, using known variant sites (e.g., dbSNP) as a training set [15].

- Local Realignment: Correction of alignment artifacts, particularly around small insertions and deletions (indels), to prevent false-positive variant calls [10]. Following these steps yields an analysis-ready BAM file.

1.4. Variant Calling (VCF) Variant calling algorithms interrogate the processed BAM file to identify genomic positions that differ from the reference. Callers are specialized for different biological contexts and variant types. For germline variants (inherited), GATK HaplotypeCaller is a standard tool that uses a local de novo assembly approach to accurately call SNPs and indels [12]. For somatic variants (acquired, as in cancer), callers compare a tumor sample to a matched normal sample. Mutect2 (part of GATK) and VarScan2 are commonly used somatic callers, each employing different statistical models [16]. The output is a VCF file listing genomic coordinates, reference and alternate alleles, quality scores, and sample-specific genotype information.

1.5. Variant Filtering, Annotation & Interpretation Raw VCF files contain both true biological variants and false positives. Hard filtering (e.g., based on depth, quality, strand bias) or variant quality score recalibration (VQSR) in GATK is applied to refine the call set [12]. Subsequently, annotation tools like SnpEff or VEP add biological context, predicting the functional impact (e.g., missense, stop-gained), population frequency from databases like gnomAD, and links to disease (e.g., ClinVar) [17]. This annotated VCF is the final product for researcher interpretation, visualization in browsers like IGV, and clinical reporting [16].

Table 1: Essential Software Tools for Each Pipeline Stage

| Pipeline Stage | Exemplar Tools | Primary Function | Key Output |

|---|---|---|---|

| Quality Control | FastQC [10], Trimmomatic [14] | Assess read quality; remove adapters/low-quality bases. | Trimmed, high-quality FASTQ files. |

| Read Alignment | BWA-MEM [12], Bowtie2 | Map sequencing reads to a reference genome. | Sorted, indexed BAM/SAM file. |

| Alignment Processing | Picard MarkDuplicates [12], GATK (BQSR, Realigner) [15] | Mark PCR duplicates; recalibrate base qualities; realign indels. | Analysis-ready BAM file. |

| Variant Calling | Germline: GATK HaplotypeCaller [12], FreeBayes [18]. Somatic: Mutect2 [16], VarScan2 [16], Strelka. | Identify genomic variants relative to the reference. | Raw VCF file. |

| Variant Refinement | GATK VariantFiltration [12], BCFtools [10] | Filter variants based on quality metrics. | Filtered VCF file. |

| Annotation | SnpEff [18], VEP [17], ANNOVAR | Add functional, population, and clinical data to variants. | Annotated VCF file. |

Detailed Experimental Protocols

2.1. Protocol: Germline Variant Calling via GATK Best Practices Workflow This protocol outlines a standardized pipeline for identifying inherited SNPs and indels from a single sample, using tools compatible with high-performance computing clusters.

- Input: Paired-end FASTQ files (

sample_R1.fastq.gz,sample_R2.fastq.gz), human reference genome FASTA (GRCh38.fa) and its pre-built BWA index, known variant sites VCF (dbsnp_146.grch38.vcf). - Software: BWA (0.7.17), SAMtools (1.9), Picard (2.25.0), GATK (4.2.0).

- Step-by-Step Commands:

- Map Reads:

bwa mem -M -R "@RG\tID:sample\tPL:ILLUMINA\tSM:sample" GRCh38.fa sample_R1.fastq.gz sample_R2.fastq.gz > aligned.sam - Sort & Convert to BAM:

samtools sort -o sorted.bam aligned.samthensamtools index sorted.bam - Mark Duplicates:

java -jar picard.jar MarkDuplicates I=sorted.bam O=dedupped.bam M=metrics.txt - Base Quality Recalibration: First, build the recalibration model:

gatk BaseRecalibrator -I dedupped.bam -R GRCh38.fa --known-sites dbsnp_146.grch38.vcf -O recal_data.table. Then, apply it:gatk ApplyBQSR -I dedupped.bam -R GRCh38.fa --bqsr-recal-file recal_data.table -O recalibrated.bam - Variant Calling:

gatk HaplotypeCaller -R GRCh38.fa -I recalibrated.bam -O raw_variants.vcf - Variant Filtering (SNPs): Apply recommended hard filters:

gatk VariantFiltration -R GRCh38.fa -V raw_variants.vcf --filter-expression "QD < 2.0 || FS > 60.0 || MQ < 40.0 || MQRankSum < -12.5 || ReadPosRankSum < -8.0" --filter-name "SNP_FILTER" -O filtered_snps.vcf

- Map Reads:

- Output: A filtered VCF file (

filtered_snps.vcf) containing high-confidence germline variant calls for the sample [12] [15].

2.2. Protocol: Somatic Variant Discovery Using a Three-Caller Consensus Approach To maximize sensitivity and specificity in cancer genomics, a consensus approach combining multiple callers is recommended [16]. This protocol uses matched tumor-normal pairs.

- Input: Analysis-ready BAM files for tumor (

tumor.bam) and matched normal (normal.bam), reference genome (GRCh38.fa), panel of normals VCF (PoN), and germline resource (e.g.,af-only-gnomad.vcf). - Software: Mutect2 (GATK 4.2.0), VarScan2 (2.4.3), Strelka2 (2.9.10), BCFtools.

- Step-by-Step Commands:

- Call variants with Mutect2:

gatk Mutect2 -R GRCh38.fa -I tumor.bam -I normal.bam --tumor-sample TUMOR --normal-sample NORMAL --panel-of-normals pon.vcf.gz --germline-resource af-only-gnomad.vcf.gz -O mutect2_unfiltered.vcf - Call variants with VarScan2: First, generate an mpileup:

samtools mpileup -q 1 -f GRCh38.fa tumor.bam normal.bam > tumor_normal.mpileup. Then run VarScan:varscan somatic tumor_normal.mpileup varscan_output --mpileup 1 --output-vcf 1 - Call variants with Strelka2: Configure for the BAM files:

configureStrelkaSomaticWorkflow.py --tumorBam tumor.bam --normalBam normal.bam --referenceFasta GRCh38.fa --runDir strelka_workflow. Then execute:strelka_workflow/runWorkflow.py -m local -j 8 - Intersect Callers: Extract PASS variants from each caller's VCF and use

bcftools isecto find variants identified by at least two of the three callers:bcftools isec -p output_dir -n +2 mutect2_filtered.vcf varscan_filtered.vcf strelka_snvs.vcf

- Call variants with Mutect2:

- Output: A high-confidence somatic VCF file derived from the intersection of multiple callers, significantly reducing false positives while maintaining sensitivity [11] [16].

Quantitative Analysis of Variant Caller Performance

Empirical data underscores the critical impact of tool selection on research outcomes. A study comparing three variant callers on the same 105 breast cancer tumor samples (without matched normal) revealed stark differences in output [11].

Table 2: Comparative Output of Three Variant Callers on 105 Breast Cancer Samples

| Variant Caller | Total Variants Called (Aggregate) | Average Variants Per Sample | ClinVar Significant Variants (Aggregate) | Notable Clinical Examples Missed by Other Callers [11] |

|---|---|---|---|---|

| GATK HaplotypeCaller | 25,130 | 4152.4 | 1,491 | Pathogenic/likely pathogenic variants in ABCA4 (associated with macular degeneration) and CFTR (target of FDA-approved drugs). |

| VarScan2 | 16,972 | 2925.3 | 1,400 | Variants in DHCR7 (linked to Smith-Lemli-Opitz syndrome). |

| Mutect2 (Tumor-Only Mode) | 4,232 | 159.2 | 321 | Variants in CYP4V2 (associated with Bietti crystalline dystrophy). |

| Key Finding | An average of 16.5% of clinically significant (ClinVar) variants were detected by only one of the three callers, highlighting the risk of relying on a single algorithm [11]. |

Integrated Workflow Diagram: From FASTQ to Annotated VCF

NGS Pipeline Main Workflow: FASTQ to VCF

Beyond software, a reliable pipeline requires curated data resources and quality control measures.

Table 3: Key Research Reagent Solutions for Pipeline Implementation

| Category | Resource/Reagent | Function & Purpose | Source / Example |

|---|---|---|---|

| Reference Standards | Genome in a Bottle (GIAB) Benchmark Sets [12] | Provides "ground truth" variant calls for reference samples (e.g., NA12878) to validate and benchmark pipeline accuracy. | NIST (National Institute of Standards and Technology) |

| Reference Data | dbSNP Database [15], gnomAD [15] | Curated databases of known human genetic variants and population frequencies, used for BQSR, filtering, and annotation. | NCBI, Broad Institute |

| Clinical Annotation | ClinVar Database [11] | Public archive of reports linking human genetic variants to observed health status (pathogenic, benign, etc.). | NCBI |

| Quality Control | Picard Metrics (HsMetrics, AlignmentSummary) [13] | Suite of tools to generate quantitative metrics (e.g., coverage uniformity, insert size) for verifying library prep and sequencing quality. | Broad Institute |

| Validation | Synthetic Diploid (Syndip) Benchmark [12] | A less biased benchmarking dataset derived from long-read assemblies, useful for evaluating performance in complex genomic regions. | Available via sequencing repositories |

| Containerization | Docker/Singularity Containers | Packages the entire pipeline software environment to guarantee absolute reproducibility and portability across computing platforms. | Container registries (Docker Hub, Biocontainers) |

The advent of Next-Generation Sequencing (NGS) has revolutionized genomics, enabling the rapid, high-throughput analysis of DNA and RNA to drive progress in cancer research, rare disease diagnosis, and personalized medicine [19]. The transformation of raw sequencing signals into actionable biological insights hinges on a robust and reproducible bioinformatics pipeline. This pipeline is universally structured around three critical, sequential data processing steps: Quality Control (QC), Alignment (Mapping), and Variant Calling [19]. The integrity of each step is paramount, as errors introduced early in the workflow propagate and magnify, potentially leading to false conclusions in downstream analyses. This thesis frames these technical steps within the broader context of constructing reliable NGS analysis pipelines for variant discovery research, emphasizing standardized protocols, performance metrics, and the emerging integration of artificial intelligence (AI) to enhance accuracy and scalability [20] [3].

Primary Analysis and Data Quality Control

Primary Analysis constitutes the first computational assessment of raw sequencing data, typically performed by the instrument's software or dedicated pipelines like bcl2fastq. This step translates raw signal data (e.g., .bcl files from Illumina platforms) into nucleotide sequences, generating FASTQ files that contain read sequences and their corresponding per-base quality scores [21]. Quality Control (QC) is an inseparable and iterative component of primary and secondary analysis, designed to evaluate data integrity and filter technical artifacts before biological interpretation.

Key Quality Metrics and Assessment Protocols

Rigorous QC employs both quantitative metrics and visual assessments. The cornerstone metric is the Phred Quality Score (Q-score), which is logarithmically linked to base-calling error probability (Q20 = 99% accuracy, Q30 = 99.9% accuracy). A Q-score ≥ 30 across the majority of bases is a standard benchmark for high-quality data [21].

Table 1: Core Quality Control Metrics for NGS Data [21] [22]

| Metric | Description | Optimal Range / Target |

|---|---|---|

| Per-base Sequence Quality | Phred score distribution across all sequencing cycles. | Q ≥ 30 for majority of bases. |

| Per-sequence Quality | Average quality score for each read. | High mean score; few outliers. |

| Sequence Length Distribution | Distribution of read lengths after trimming. | Uniform, as expected from library prep. |

| Adapter Contamination | Presence of adapter sequences in reads. | Minimal to none after trimming. |

| GC Content | Distribution of guanine-cytosine content per read. | Matches reference organism's distribution. |

| Sequence Duplication Level | Proportion of PCR/optical duplicates. | Low percentage; context-dependent. |

| Overrepresented Sequences | Sequences appearing at high frequency. | Investigate sources (e.g., contaminants). |

A standard QC protocol involves using tools like FastQC for initial assessment and Trimmomatic or Cutadapt for read cleaning [23]. The workflow is: 1) Run FastQC on raw FASTQ files; 2) Trim adapter sequences and low-quality bases from read ends (e.g., using a sliding window trimming approach); 3) Remove reads falling below a minimum length threshold (e.g., < 36 bp); 4) Run FastQC again on the trimmed files to verify improvement [23] [21]. For advanced applications, Unique Molecular Identifiers (UMIs) are used during library preparation to enable accurate deduplication at the level of original molecules, correcting for PCR amplification bias [21].

Visualizing the Quality Control Workflow

The following diagram illustrates the iterative and branching nature of a standard QC workflow, from raw data to cleaned reads ready for alignment.

Diagram: Iterative NGS Data Quality Control and Cleaning Workflow

Sequence Alignment to a Reference Genome

Alignment (or mapping) is the process of determining the genomic origin of each sequencing read by computationally matching it to a location within a reference genome. The accuracy of alignment directly influences the sensitivity and precision of all subsequent variant detection [19].

Alignment Algorithms and Tools

Alignment tools must efficiently handle millions of short reads against a gigabase-sized reference. Most aligners, such as the widely adopted Burrows-Wheeler Aligner (BWA) and Bowtie2, use a seed-and-extend strategy with sophisticated indexing (e.g., FM-index) for speed [23] [21]. The choice of reference genome is critical; for human studies, the current standard is GRCh38/hg38, though GRCh37/hg19 remains in widespread use [24] [21]. Consistency in the reference version across an entire study is essential.

The alignment protocol involves two main steps [23]:

- Indexing the Reference Genome (one-time operation):

- Mapping Reads (per sample):

The output is a Sequence Alignment/Map (SAM) file, a tab-delimited text file detailing each read's mapping position, alignment quality (MAPQ score), and CIGAR string representing matches, insertions, deletions, and splices [23]. SAMtools is then used to convert the SAM file to its compressed binary equivalent, the BAM file, sort it by genomic coordinate, and index it for rapid access [23]:

Post-Alignment Processing and Visualization

The sorted BAM file undergoes further refinement before variant calling [24]:

- Duplicate Marking/Removal: Identifies and flags reads originating from the same PCR amplicon or optical duplicate using tools like

Picard MarkDuplicatesto prevent bias in variant allele frequency calculations. - Local Realignment: Tools like the GATK HaplotypeCaller perform local realignment around indels to correct mapping artifacts.

- Base Quality Score Recalibration (BQSR): Uses known variant sites to empirically adjust base quality scores, correcting for systematic technical errors.

Alignment success is evaluated using metrics like mapping rate (percentage of reads mapped), depth of coverage (average reads covering a base), and coverage uniformity [21]. Visualization with genome browsers like the Integrative Genomics Viewer (IGV) is crucial for manual inspection of read pileups, splice junctions, and potential variant sites [24] [21].

Table 2: Common Alignment and Post-Processing Tools

| Tool | Primary Function | Key Notes |

|---|---|---|

| BWA (MEM) | Short-read alignment. | Industry standard for speed/accuracy balance [23] [21]. |

| Bowtie2 | Short-read alignment. | Fast, especially for shorter reads [21]. |

| Minimap2 | Long-read/pacBio/Nanopore alignment. | Standard for aligning long-read sequencing data. |

| SAMtools | Manipulate/view SAM/BAM files. | Essential utility for sorting, indexing, and filtering [23]. |

| Picard | Java-based tools for BAM processing. | Standard for duplicate marking and metric collection. |

| GATK | Broad toolkit for variant discovery. | Provides Best Practices pipelines for realignment, BQSR, and calling [24]. |

| IGV | Interactive visualization. | Critical for inspecting alignments and validating calls [24] [21]. |

Variant Calling: From Aligned Reads to Genetic Variants

Variant calling is the comparative analysis of aligned reads against a reference genome to identify sites of genetic difference. These variants include Single Nucleotide Polymorphisms (SNPs), small Insertions/Deletions (Indels), and larger Structural Variations (SVs) [24].

Methodologies: From Traditional Heuristics to AI

Traditional variant callers (e.g., GATK HaplotypeCaller, SAMtools mpileup) use statistical models and heuristic rules. For example, the HaplotypeCaller works by: 1) Identifying active regions; 2) Assembling reads into potential haplotypes; 3) Determining likelihoods of haplotypes given the read data; 4) Assigning sample genotypes [24]. The output is a Variant Call Format (VCF) file, which records the genomic position, reference/alternate alleles, quality scores, and sample genotype information for each variant [24] [23].

A paradigm shift is underway with the adoption of AI-based variant callers, which use deep learning models (typically Convolutional Neural Networks - CNNs) trained on vast datasets to distinguish true variants from sequencing artifacts [20] [3].

Table 3: Comparison of AI-Enhanced Variant Callers [3]

| Caller | Core Technology | Key Strength | Consideration |

|---|---|---|---|

| DeepVariant | CNN analyzing read pileup images. | Exceptional accuracy; reduces need for hard filtering. | High computational cost (GPU recommended). |

| DNAscope | Machine learning-enhanced algorithm. | High speed and accuracy; efficient CPU-based. | ML-based, not a deep learning model per se. |

| DeepTrio | CNN for family trio analysis. | Jointly calls families, improving de novo mutation detection. | Requires trio data. |

| Clair/Clair3 | CNN optimized for long-read data. | High performance for PacBio HiFi and Nanopore. | Actively developed for long-read tech. |

A protocol for traditional germline variant calling with bcftools involves [23]:

For AI-based calling with DeepVariant, the protocol shifts to using a pre-trained model:

Variant Filtering, Annotation, and the Integrated Workflow

Raw variant calls contain false positives. Filtering applies thresholds on metrics like QUAL (phred-scaled probability of variant), DP (read depth), and QD (quality by depth) to refine the call set [24]. Variant annotation using tools like SnpEff or ANNOVAR adds biological context, predicting the effect on genes (e.g., missense, frameshift), and overlaying population frequency data from databases like dbSNP, gnomAD, and COSMIC [24] [19].

The complete logical relationship from raw data to annotated variants is summarized in the following sequential workflow diagram.

Diagram: Core NGS Variant Calling Pipeline with AI Integration Points

Constructing and executing a reliable NGS analysis pipeline requires both software tools and curated data resources. The following toolkit is essential for researchers in variant calling.

Table 4: Essential Research Reagent Solutions for NGS Variant Analysis

| Category | Item / Resource | Function / Purpose | Example / Source |

|---|---|---|---|

| Wet-Lab Preparation | Library Prep Kits | Fragment DNA/RNA, add adapters & indices for sequencing. | Illumina TruSeq, NEBNext. |

| Wet-Lab Preparation | Unique Molecular Identifiers (UMIs) | Tag individual molecules pre-PCR to correct for duplication bias. | Integrated UMI adapters. |

| Wet-Lab Preparation | Positive Control DNA | Assess sequencing run performance and error rates. | PhiX Control v3 (Illumina). |

| Computational Tools | QC & Trimming Software | Assess raw data quality and remove artifacts. | FastQC [22], Trimmomatic [23]. |

| Computational Tools | Alignment Software | Map sequencing reads to a reference genome. | BWA [23] [21], Bowtie2 [21]. |

| Computational Tools | Variant Callers | Identify genetic variants from aligned reads. | GATK [24], DeepVariant [3], DNAscope [3]. |

| Computational Tools | VCF Manipulation | Filter, compare, and manipulate variant files. | BCFtools [23], SnpSift [24], VCFtools. |

| Computational Tools | Visualization Software | Manually inspect alignments and variant calls. | IGV [24] [21], Tablet. |

| Reference Data | Reference Genomes | Standardized genomic sequence for alignment. | GRCh38 from Genome Reference Consortium [24]. |

| Reference Data | Variant Databases | Annotate variants with known frequency/pathogenicity. | dbSNP [19], gnomAD, ClinVar [24], COSMIC [19]. |

| Reference Data | Gene Annotation | Define genomic coordinates of genes and transcripts. | GENCODE, RefSeq. |

| Infrastructure | High-Performance Compute | Process large NGS datasets in a reasonable time. | Local cluster (HPC) or cloud (AWS, GCP, Azure). |

| Infrastructure | Workflow Managers | Automate, reproduce, and scale analysis pipelines. | Nextflow, Snakemake, WDL/Cromwell. |

The triad of Quality Control, Alignment, and Variant Calling forms the foundational, non-negotiable core of any NGS analysis pipeline for variant research. As sequencing technologies evolve toward long-read and single-cell applications, and data volumes grow, these steps must adapt [2]. The integration of Artificial Intelligence is the most transformative current trend, with AI models demonstrating superior accuracy in basecalling, alignment optimization, and particularly in variant calling, where they reduce false positives in challenging genomic regions [20] [3]. Future pipelines will increasingly be AI-native, leveraging federated learning for privacy-preserving analysis on distributed datasets and explainable AI (XAI) to build clinical trust [20]. For researchers and drug development professionals, mastery of both the established principles outlined here and the emerging AI-enhanced methodologies is critical to generating the high-fidelity genomic insights that underpin modern precision medicine [19].

The comprehensive identification and interpretation of genomic variation form the cornerstone of advanced genetic research, clinical diagnostics, and therapeutic development. Within the framework of Next-Generation Sequencing (NGS) data analysis pipelines, variant calling is the critical process that translates raw sequencing data into a catalog of DNA sequence differences relative to a reference genome [4]. These variants are traditionally classified by size and complexity into several major types: Single Nucleotide Variants (SNVs), short Insertions and Deletions (Indels), Copy Number Variants (CNVs), and broader Structural Variations (SVs) [25]. Each category presents unique challenges for detection and requires specialized computational approaches, especially when comparing the capabilities of prevalent short-read sequencing with emerging long-read technologies [25]. The accurate delineation of this full spectrum of variation is essential for unraveling the genetic basis of diseases, from Mendelian disorders to complex neurodevelopmental conditions like autism spectrum disorder (ASD), where a significant diagnostic gap remains despite high heritability [26]. This article provides detailed application notes and protocols for detecting these variant types, framed within the context of building robust NGS data analysis pipelines for research and clinical applications.

Defining the Variant Landscape

Genomic variants are systematically categorized based on their molecular characteristics and size, which directly influence the choice of sequencing technology and computational tool required for their discovery.

Table 1: Classification and Characteristics of Major Variant Types

| Variant Type | Size Range | Description | Common Subtypes | Detection Challenge |

|---|---|---|---|---|

| Single Nucleotide Variant (SNV) | 1 bp | A substitution of one single nucleotide for another. | Transition (AG, CT), Transversion (all other swaps). | Distinguishing true variants from sequencing errors; genotyping in low-complexity regions. |

| Insertion/Deletion (Indel) | < 50 bp | The insertion or deletion of a small number of nucleotides. | Insertion, Deletion. | Accurate alignment of reads around the variant; size bias in short-read data (insertions >10 bp are poorly detected) [25]. |

| Copy Number Variant (CNV) | > 50 bp | A large-scale deletion or duplication of a genomic segment, altering the copy number. | Deletion (CN loss), Duplication (CN gain). | Differentiating from technical read-depth fluctuations; precise breakpoint resolution. |

| Structural Variation (SV) | ≥ 50 bp | Genomic rearrangements that may or may not alter copy number. | Deletion, Duplication, Insertion, Inversion, Translocation, Complex Rearrangement [26]. | Detection in repetitive genomic regions (e.g., segmental duplications) is difficult with short reads [25]. |

CNVs are often considered a subset of SVs focused specifically on copy-number changes. The >50 bp threshold distinguishing indels from SVs is conventional, but the detection limit for short-read-based indel callers is often lower, around 10-15% of the read length [25]. Long-read sequencing (e.g., PacBio HiFi, Oxford Nanopore) is particularly powerful for resolving SVs and larger indels because its reads span complex and repetitive regions [25] [26].

Variant Detection within NGS Analysis Pipelines

A generic, high-level workflow for germline variant calling from whole-genome sequencing (WGS) data involves sequential steps from raw data to annotated variants. This pipeline must be adapted based on the sequencing technology (short- vs. long-read) and the variant type of interest.

High-Level NGS Variant Calling Pipeline Workflow

Pipeline Architecture Considerations: Modern pipelines must integrate both reference-based and emerging reference-free or alignment-free (AF) approaches. Reference-based methods align reads to a linear reference genome (e.g., GRCh38) and look for discrepancies [4]. In contrast, reference-free methods, such as those based on De Bruijn graphs, construct sequence relationships directly from reads to identify polymorphisms like isolated SNPs without alignment, which can be advantageous for non-model organisms or highly polymorphic regions [4]. Scalability is a critical concern, leading to the development of distributed pipelines using frameworks like Apache Spark to parallelize tasks such as graph construction and k-mer counting across compute clusters, making the analysis of large cohorts feasible [4].

Detailed Experimental Protocols

Protocol: Germline SNV and Indel Calling from Short-Read WGS

This protocol is validated for human whole-genome sequencing data from Illumina or DNBSEQ platforms (coverage >30x) aligned to GRCh37/38 [25] [27].

Materials:

- Input: Paired-end FASTQ files.

- Software: FastQC, Trimmomatic, BWA-MEM, SAMtools, GATK (v4.0+), DeepVariant.

- Reference Files: Reference genome (FASTA), known variant sites (dbSNP, gnomAD).

Procedure:

- Quality Control: Assess raw reads with

FastQC. Trim adapters and low-quality bases usingTrimmomatic. - Alignment: Align reads to the reference genome using

BWA-MEM. Convert SAM to sorted BAM withSAMtools. - Post-Alignment Processing: Mark duplicate reads with

GATK MarkDuplicates. Perform base quality score recalibration (BQSR) usingGATK BaseRecalibratorand known variant sites. - Variant Calling: Call SNVs and small indels using a primary caller (e.g.,

GATK HaplotypeCallerin GVCF mode). For enhanced accuracy, especially in difficult regions, consider a deep learning-based caller likeDeepVariant[25]. - Joint Genotyping & Filtering: If processing a cohort, perform joint genotyping on all samples' GVCFs using

GATK GenotypeGVCFs. Apply variant quality score recalibration (VQSR) or hard filters (e.g.,GATK VariantFiltration) to remove low-confidence calls. - Annotation: Annotate the final VCF file with functional consequences using

SnpEfforEnsembl VEP.

Protocol: Structural Variant Detection from Long-Read WGS

This protocol is designed for detecting SVs (≥50 bp) using Oxford Nanopore or PacBio HiFi data, as applied in studies of neurodevelopmental disorders [26].

Materials:

- Input: High molecular weight (HMW) genomic DNA (>20 kb fragment size), Oxford Nanopore SQK-LSK114 ligation kit, R10.4.1 flow cells [26].

- Software: Guppy (basecalling), Minimap2, Samtools, cuteSV, Sniffles2, SVIM, AnnotSV.

Procedure:

- Library Preparation & Sequencing: Prepare an amplification-free library from 1 µg of HMW gDNA using the ligation sequencing kit per manufacturer instructions [26]. Load onto a MinION Mk1C sequencer for ~48 hours to achieve a target coverage of >7x per run [26].

- Basecalling & Alignment: Perform high-accuracy basecalling on raw FAST5/POD5 files using

Guppy(super-accurate model). Align the resulting FASTQ files to the GRCh38 reference genome usingMinimap2with the-ax map-ontpreset. - SV Calling & Consolidation: Call SVs using multiple callers for robustness (e.g.,

cuteSV,Sniffles2,SVIM) [26]. To generate a high-confidence set, select SVs detected by at least 3 out of 5 callers, requiring breakpoint proximity (≤200 bp for insertions) or reciprocal overlap (≥50% for others) [25] [26]. - Annotation & Prioritization: Annotate the high-confidence SV set with

AnnotSVto identify overlaps with genes, regulatory elements, and known pathogenic regions. Prioritize rare, genic, or copy-number altering SVs for further validation.

Performance Comparison of Technologies and Tools

The choice of sequencing platform and analytical tool significantly impacts the sensitivity and precision of variant detection, particularly for indels and SVs.

Table 2: Performance of Short-Read vs. Long-Read Sequencing for Variant Detection [25]

| Variant Type | Key Metric | Short-Read Performance | Long-Read Performance | Contextual Note |

|---|---|---|---|---|

| SNV | Recall & Precision | High | High | Comparable performance in non-repetitive regions [25]. |

| Indel (Deletion) | Recall & Precision | High | High | Comparable performance [25]. |

| Indel (Insertion >10bp) | Recall | Low (Poorly detected) | High | Short-read algorithms struggle with insertions >10 bp [25]. |

| SV (All) | Recall in Repetitive Regions | Significantly Lower | Higher | Short-read recall is low for small-to-intermediate SVs in repeats [25]. |

| SV (All) | Recall/Precision in Non-Repetitive | Moderate | Moderate | Similar performance between technologies in accessible regions [25]. |

Table 3: Detection Metrics for SVs Across Sequencing Platforms (NA12878 Sample) [28]

| SV Type | Platform | Average Number Detected | Average Precision | Average Sensitivity |

|---|---|---|---|---|

| Deletion (DEL) | Illumina | 2,676 | 53.06% | 9.81% |

| Deletion (DEL) | DNBSEQ | 2,838 | 62.19% | 15.67% |

| Insertion (INS) | Illumina | 737 | 44.01% | 2.80% |

| Insertion (INS) | DNBSEQ | 1,117 | 43.98% | 3.17% |

| Inversion (INV) | Illumina | 239 | 26.79% | 11.06% |

| Inversion (INV) | DNBSEQ | 422 | 25.22% | 11.58% |

Data shows high consistency between DNBSEQ and Illumina platforms for SV detection, with DNBSEQ showing marginally higher sensitivity for deletions [28]. Overall, sensitivity for INS and INV remains low with short-read technologies, underscoring a limitation.

The algorithmic approach of the variant caller is paramount. SV detection tools for short-read data rely on indirect signals like read depth (RD), split reads (SR), read pairs (RP), or a combination (CA) [28]. Each has strengths and weaknesses, leading to the recommendation of using multiple callers and integrating their results.

Short-Read SV Detection Algorithms and Their Targets

The Scientist's Toolkit: Essential Reagents and Software

Table 4: Key Research Reagent Solutions for Variant Discovery

| Item Name | Category | Function in Workflow | Example/Supplier |

|---|---|---|---|

| High Molecular Weight gDNA Kit | Sample Prep | Extracts long, intact genomic DNA essential for long-read sequencing and reliable SV detection. | Qiagen Genomic-tip, Nanobind CBB. |

| PCR-Free Library Prep Kit | Library Prep | Prevents amplification bias, improving uniform coverage and accurate detection of SNVs, indels, and CNVs [27]. | Illumina DNA PCR-Free Prep, Tagmentation [27]. |

| Ligation Sequencing Kit | Library Prep (Long-Read) | Prepares amplification-free libraries for Oxford Nanopore sequencing, preserving native DNA for long reads. | Oxford Nanopore SQK-LSK114 [26]. |

| BWA-MEM2 | Alignment Software | Aligns short sequencing reads to a reference genome quickly and accurately. | Open-source aligner. |

| Minimap2 | Alignment Software | Aligns long, error-prone reads (ONT/PacBio) to a reference genome. | Open-source aligner [25]. |

| GATK HaplotypeCaller | Variant Caller | The industry standard for calling germline SNVs and indels from short-read data. | Broad Institute. |

| DeepVariant | Variant Caller | Uses a deep learning model to call SNVs and indels from aligned reads, often outperforming traditional methods [25]. | Google Health. |

| cuteSV / Sniffles2 | Variant Caller | Specialized tools for sensitive detection of SVs from long-read sequencing data [26]. | Open-source. |

| AnnotSV | Annotation Tool | Comprehensively annotates structural variants with gene, regulatory, and disease association information. | Open-source [26]. |

A comprehensive understanding of SNVs, Indels, CNVs, and SVs is fundamental to exploiting NGS data fully. As evidenced, no single technology or tool captures the complete variome. Short-read sequencing excels in cost-effective, accurate SNV and small indel detection, while long-read sequencing is indispensable for resolving complex SVs and large insertions, particularly in repetitive regions [25] [26]. The future of variant calling pipelines lies in integrative approaches: combining short- and long-read data (hybrid sequencing), leveraging ensemble calling methods across multiple algorithms, and incorporating population-scale resources and pangenome graphs to reduce reference bias [4]. For clinical translation, as demonstrated by lab-developed procedures (LDPs) for population screening, rigorous validation of wet-lab and computational components is essential to achieve the high sensitivity and specificity required for reporting actionable findings in genes associated with hereditary disease and pharmacogenomics [27]. By strategically selecting and combining the protocols and tools outlined here, researchers can construct robust analysis pipelines to unlock the full spectrum of genomic variation.

Reference Genomes and Their Impact on Variant Discovery

Within the framework of next-generation sequencing (NGS) data analysis pipelines for variant calling research, the choice of reference genome is a foundational determinant of accuracy and comprehensiveness. A reference genome serves as the standard coordinate system against which sample reads are aligned to identify genetic differences. The evolution from single, linear references to more sophisticated graph-based and population-aware genomes directly addresses historical limitations, enabling more precise variant discovery in complex genomic regions [29]. This progression is critical for applications in clinical genomics, drug target discovery, and personalized medicine, where missing or mis-calling a variant can alter biological interpretation and downstream clinical decisions [2] [30].

The central challenge in variant discovery is distinguishing true biological variants from sequencing artifacts and alignment errors. This challenge is most acute in difficult-to-map regions such as segmental duplications, low-complexity repeats, and highly polymorphic loci like the Major Histocompatibility Complex (MHC) [31] [29]. Traditional linear references, representing a mosaic of haplotypes from a few individuals, provide a poor representation of global genetic diversity. Consequently, reads from an individual that diverge from the reference in these complex regions may map poorly or incorrectly, leading to false negatives (missing real variants) or false positives (artifactual calls) [31]. Advancements in reference genomes are therefore not merely incremental improvements but essential refinements that enhance the fidelity of the entire NGS analysis pipeline.

Evolution and Types of Reference Genomes

The landscape of human reference genomes has progressed significantly, moving beyond a single canonical sequence to incorporate population diversity and alternate genomic pathways. This evolution is summarized in the diagram below.

Linear Reference Genomes and Their Limitations

The widely used GRCh37/hg19 and GRCh38/hg38 assemblies from the Genome Reference Consortium are linear, monolithic sequences [29]. GRCh38 introduced significant improvements, including corrected misassemblies and expanded coverage of complex regions. A key feature of the official GRCh38 assembly is the inclusion of alternative (ALT) contigs—long, alternative haplotype sequences for approximately 60 genomic loci, providing alternate representations for highly variable regions [29]. However, a major limitation is that these ALT contigs are assembled from a very small number of individuals and are not fully integrated into the primary chromosome sequences, complicating their use during read alignment.

To mitigate mapping ambiguity, enhanced versions of the linear reference incorporate decoy sequences (often derived from alternative haplotypes and common microbial contaminants) that "catch" reads that would otherwise map ambiguously to multiple primary locations. Furthermore, the approach to handling native ALT contigs has evolved. The initial "ALT-aware" method used complex liftover alignments but could create dense clusters of mismapped reads [29]. The newer, recommended ALT-masking strategy strategically masks ALT contig segments that are highly similar to the primary assembly, preventing them from competitively stealing alignments. Divergent segments remain unmasked, functioning as decoys. This approach simplifies the analysis and improves variant calling accuracy over the liftover-based method [29].

Graph-based references represent a paradigm shift by explicitly incorporating known genetic variation (e.g., from the 1000 Genomes Project) directly into the reference structure [31] [29]. Instead of one linear path, the reference is a graph with nodes (sequence blocks) and edges (connections). Common haplotypes form alternate paths through the graph. During alignment, a read that differs from the primary path but matches a known alternate haplotype path can map with higher confidence and quality. This is particularly powerful for resolving reads in difficult, polymorphic regions where a linear reference provides a poor match [29]. Tools like Illumina's DRAGEN employ such graph genomes, which have been shown to improve accuracy in benchmark challenges [29].

The Emerging Pangenome

The most comprehensive evolution is the concept of a human pangenome, which aims to represent the full spectrum of human genetic diversity by assembling complete genomes from hundreds of diverse individuals [31]. This collective reference moves beyond a single coordinate system to a truly population-aware framework, promising to dramatically reduce reference bias and improve variant discovery equity across all ancestries.

Table 1: Comparison of Major Human Reference Genome Types

| Reference Type | Key Characteristics | Primary Advantage | Key Limitation | Example/Version |

|---|---|---|---|---|

| Canonical Linear | Single, monolithic sequence for each chromosome. | Simplicity; standard for annotation and reporting. | High reference bias; poor representation of diversity. | GRCh37, GRCh38 primary assembly [29]. |

| Enhanced Linear (with ALT/Decoy) | Primary assembly supplemented with alternative contigs and decoy sequences. | Reduces ambiguous mapping for reads similar to paralogous regions. | ALT contigs are discrete, unphased, and from limited haplotypes [29]. | GRCh38 full assembly (with ALT), hg38 with decoys. |

| ALT-Masked | Enhanced linear reference where problematic segments of ALT contigs are masked. | Prevents mapping artifacts from incorrect ALT liftover, improving accuracy [29]. | Still based on a limited set of alternate haplotypes. | DRAGEN hg38-alt-masked reference [29]. |

| Graph-Based | Encodes population haplotypes as alternate paths within a graph data structure. | Dramatically improves mapping accuracy and variant calling in polymorphic and difficult regions [31] [29]. | Computational complexity; larger reference size. | DRAGEN hg38 graph reference [29]. |

| Pangenome | Collection of multiple, complete genome assemblies from diverse individuals. | Minimizes reference bias; represents global genetic diversity. | In early stages of development and adoption; complex to use. | Human Pangenome Reference Consortium assemblies [31]. |

Quantitative Impact on Variant Calling Accuracy

The choice of reference genome has a measurable, direct impact on key performance metrics in variant calling: precision (the fraction of calls that are real) and recall (the fraction of real variants that are called).

Impact on Small Variant Calling

Benchmarking using gold-standard datasets like those from the Genome in a Bottle (GIAB) consortium quantifies this impact. For small variants (SNVs and indels), using an ALT-masked reference reduces false positives and false negatives compared to older ALT-aware methods. Furthermore, transitioning from a standard linear reference to a graph-based reference yields significant gains. For example, in the GIAB benchmark, using a DRAGEN graph reference showed improved accuracy for both SNPs and indels compared to its non-graph counterpart [29]. A 2025 benchmarking study of whole-exome sequencing software found that the highest-performing pipeline (DRAGEN Enrichment) achieved precision and recall scores >99% for SNVs and >96% for indels against GIAB truth sets, a benchmark that assumes the use of an optimized reference [32].

Impact on Structural Variant (SV) Calling

The effect is even more pronounced for structural variants (SVs), which are often rooted in complex, repetitive, or duplicated sequences. Studies demonstrate that leveraging a graph-based multigenome reference significantly improves SV calling in complex genomic regions compared to the standard linear GRCh38 [31]. This is because graph references provide the necessary context to correctly place reads spanning breakpoints or within segmental duplications. The performance gap between short-read (srWGS) and long-read sequencing (lrWGS) for SV detection also narrows with better references, as improved mapping in difficult regions boosts the sensitivity of srWGS [31].

Table 2: Performance Metrics of Leading Variant Callers (2025 Benchmark)

| Variant Calling Software / Pipeline | SNV Precision (%) | SNV Recall (%) | Indel Precision (%) | Indel Recall (%) | Average Runtime (WES Sample) | Reference Genome Used |

|---|---|---|---|---|---|---|

| Illumina DRAGEN Enrichment | >99 | >99 | >96 | >96 | 29-36 minutes [32] | GRCh38 (graph-based assumed) |

| CLC Genomics Workbench | >98 | >98 | ~94 | ~94 | 6-25 minutes [32] | GRCh38 |

| Varsome Clinical | >98 | >98 | ~93 | ~94 | Not Specified | GRCh38 |

| Partek Flow (GATK) | >98 | >98 | ~92 | ~92 | 3.6 - 29.7 hours [32] | GRCh38 |

| GATK Best Practices (BWA + GATK) | High (Literature) | High (Literature) | High (Literature) | High (Literature) | Hours (Cluster-dependent) | GRCh38 (typically) |

Experimental Protocols for Benchmarking Reference Genome Performance

To empirically evaluate the impact of different reference genomes on a variant calling pipeline, researchers should conduct systematic benchmarking. The following protocol outlines a robust methodology based on current best practices [31] [32].

Protocol: Benchmarking Reference Genome Impact on SV Calling

Objective: To assess the precision and recall of structural variant (deletion) calling using short-read data aligned to different versions of the GRCh38 reference genome.

Materials:

- Benchmark Sample: HG002 (Ashkenazim son) sequencing data (Illumina WGS, 25-30x coverage). Download from GIAB FTP site [31].

- Truth Set: GIAB HG002 Tier 1 deletion callset (v0.6, lifted to GRCh38) [31].

- Reference Genomes:

- Software: DRAGEN Bio-IT Platform (v4.2 or higher) or open-source aligner (minimap2, DRAGMAP) + SV caller (Manta, DRAGEN SV caller). BEDTools for region analysis.

Procedure:

- Data Preparation: Subsample HG002 FASTQ files to a uniform coverage (e.g., 30x) using

seqtk. - Alignment & Calling (Parallel Runs): For each reference genome (Ref1-4):

a. Align reads using the

dragencommand with the--ht-referencepointing to the reference hash table, or useminimap2 -ax sr. b. Perform SV calling focused on deletions. In DRAGEN, use the SV Calling Workflow. With Manta, configure and runrunMantaWorkflow.py. c. Filter output VCF toPASSvariants and variant typeDEL. - Benchmarking: Compare each resulting VCF against the GIAB Tier 1 truth set using

hap.py(vcfeval) ortruvari. a. Use the high-confidence region BED file from GIAB to restrict evaluation. b. Calculate precision = TP/(TP+FP) and recall = TP/(TP+FN). - Region-Specific Analysis: Stratify performance in Low-Complexity Regions (LCRs). Use a BED file defining LCRs to intersect the variant calls and truth set, then recalculate metrics for variants inside and outside these regions [31].

Expected Outcome: A clear gradient of improving recall (and often precision) is expected, moving from the primary assembly to the graph-based reference, with the most significant improvements observed within LCRs and other difficult-to-map regions [31] [29].

Protocol: Evaluating Reference Impact on Small Variant Calling in Exomes

Objective: To measure the effect of reference choice on SNV and indel detection accuracy in whole-exome sequencing data.

Materials:

- Benchmark Samples: GIAB WES data for HG001, HG002, and HG003 (Agilent SureSelect V5) [32].

- Truth Sets: GIAB small variant truth sets (v4.2.1) for each sample.

- Reference Genomes: GRCh38 standard vs. GRCh38 graph-based.

- Software: DRAGEN Enrichment pipeline or GATK Best Practices pipeline (BWA-MEM + GATK HaplotypeCaller),

hap.pyfor benchmarking.

Procedure:

- Pipeline Execution: Process each sample's FASTQs through the chosen variant calling pipeline twice—once with the standard linear GRCh38 and once with the graph-based GRCh38 reference. Keep all other parameters identical.

- Variant Evaluation: Run

hap.pyon the two output VCFs for each sample against their respective truth sets, confined to the exome capture regions. - Metrics Collection: Record the F1 score (harmonic mean of precision and recall), stratified by variant type (SNV, insertion, deletion) and genomic context (e.g., high-confidence regions vs. all targeted bases).

- Variant Concordance Analysis: Use

bcftools isecto identify variants unique to each reference run. Manually inspect a subset of discordant calls in a genome browser (e.g., IGV) to determine if graph-based mapping resolved ambiguous alignments.

Expected Outcome: The graph-based reference should yield a higher F1 score, particularly for indels. Variants unique to the graph-based run are likely located in regions with high population diversity or sequence similarity, where the linear reference caused mapping ambiguity [29].

Table 3: Key Research Reagent Solutions for Reference-Based Variant Discovery

| Item / Resource | Function / Purpose | Example / Supplier | Critical Consideration |

|---|---|---|---|

| High-Quality Reference Genome | The baseline sequence for read alignment and variant identification. | GRCh38 from GENCODE; DRAGEN Graph Reference from Illumina [29]. | Choice between linear, ALT-masked, or graph-based directly impacts accuracy [31] [29]. |

| Benchmark Truth Sets | Gold-standard variant calls for a specific sample to validate and benchmark pipeline performance. | Genome in a Bottle (GIAB) Consortium datasets for HG001-HG007 [31] [32]. | Essential for objectively measuring precision and recall. Use version-matched truth sets and high-confidence regions. |

| Variant Calling Assessment Tool | Software to quantitatively compare pipeline output VCFs to a truth set. | hap.py (Illumina); VCAT; truvari. |

Provides standardized metrics (TP, FP, FN, precision, recall) for performance comparison. |

| Alignment Software | Aligns sequencing reads to the chosen reference genome. | DRAGEN Mapper, DRAGMAP, BWA-MEM2, minimap2 [31]. | Performance varies by reference type; graph references require compatible aligners [29]. |

| Variant Caller | Identifies positions where sample data differs from the reference. | DRAGEN, GATK HaplotypeCaller, DeepVariant, Manta (for SVs) [31] [32]. | Must be chosen based on variant type (SNV/indel vs. SV) and sequencing technology (short vs. long read) [30]. |

| Sequence Data Archive | Source of publicly available sequencing data for testing and benchmarking. | NCBI Sequence Read Archive (SRA), GIAB FTP site [31] [32]. | Allows method validation without generating new sequencing data. |

| Region Annotation Files | Defines genomic intervals for stratified performance analysis (e.g., difficult regions). | Low-Complexity Region (LCR) BED files; GIAB high-confidence regions; exome capture kit BED files [31]. | Enables understanding of pipeline weaknesses in specific genomic contexts. |

Integrated Analysis Workflow and Best Practices

A modern, reference-aware NGS analysis pipeline for variant discovery integrates the choice of reference genome at its core. The workflow below visualizes this integrated process.

Best Practice Recommendations:

- Default to the Latest Graph-Enabled Reference: For any new study, especially in human genetics, begin evaluations with the most recent graph-based reference (e.g., DRAGEN's hg38 graph). It provides the best chance of accurate mapping in polymorphic regions without downsides for standard regions [29].

- Maintain Reference Consistency: The same reference version must be used throughout the entire pipeline—from alignment through variant calling and annotation. Mixing references will cause coordinate mismatches and severe errors [29].

- Stratify Performance Metrics: Always benchmark pipeline performance separately for easy-to-map regions and difficult-to-map regions (e.g., LCRs, MHC). Aggregate metrics can mask poor performance in complex areas that are often medically relevant [31].

- Match the Tool to the Variant Type: Use specialized callers for different variant classes: optimized small variant callers (DRAGEN, GATK) for SNVs/indels, and dedicated SV callers (Manta, DRAGEN SV, Sniffles2) for structural variants. Performance varies significantly by tool and technology [31] [30] [32].

- Plan for the Pangenome Transition: Anticipate the future adoption of pangenome references. Design data storage and analysis workflows with flexibility in mind, ensuring raw data (FASTQs) are preserved for potential realignment against new references in the future.

The reference genome is far from a passive backdrop in variant discovery; it is an active and critical component that shapes the sensitivity and specificity of the entire NGS analysis pipeline. The transition from linear to graph-based and pangenome references marks a fundamental shift towards reducing reference bias and achieving more equitable genomic analysis across diverse human populations [31].

For researchers building pipelines for variant calling research, the evidence mandates the adoption of advanced references. The quantitative improvements in accuracy, especially for technically challenging variant types and genomic regions, are clear [31] [29] [32]. Future developments will involve the seamless integration of long-read sequencing data, which provides inherent advantages for resolving complex variation, with pangenome graph references. Furthermore, the application of machine learning models for variant quality scoring and filtering will become increasingly sophisticated, potentially using reference context as a key feature [33]. Ultimately, the goal is a fully population-aware, context-sensitive analysis framework where the reference genome dynamically represents the continuum of human genetic diversity, ensuring that variant discovery is both comprehensive and unbiased.

Implementing Robust Variant Calling Workflows: Tools, Techniques, and Clinical Applications

In next-generation sequencing (NGS) data analysis pipelines for variant calling, the alignment of sequencing reads to a reference genome is a foundational step whose accuracy and efficiency critically determine the quality of all downstream results [34]. This article provides detailed application notes and protocols for three prominent mapping tools—BWA-MEM2, DRAGEN, and Novoalign—framed within the context of a comprehensive thesis on optimizing NGS pipelines for genomic research and drug development.

BWA-MEM2 represents a performance-optimized successor to the widely adopted BWA-MEM algorithm, focusing on computational speed while maintaining identical alignment output [35] [36]. In contrast, DRAGEN (Dynamic Read Analysis for GENomics) is a comprehensive, hardware-accelerated bioinformatics platform designed for end-to-end analysis, from mapping to variant calling across all variant types [37] [38]. Novoalign is a commercial aligner renowned for its high sensitivity and accuracy, particularly for short reads, and is often cited for its superior performance in germline variant detection pipelines [39] [40].

Selecting the appropriate aligner requires balancing multiple factors, including accuracy, speed, cost, and the specific requirements of the research project, such as the need to detect structural variants or analyze multi-species samples [41] [37]. The following sections provide a detailed comparative analysis, experimental protocols, and a decision framework to guide researchers in integrating these tools into robust variant calling workflows.

Comparative Analysis of Mapping Tools

The choice of alignment software impacts pipeline speed, computational resource consumption, and the ultimate accuracy of variant detection. The following tables summarize the core technical specifications and performance metrics of BWA-MEM2, DRAGEN, and Novoalign.

Table 1: Technical Specifications and Primary Use Cases