Overcoming the Activity Cliff Challenge: Strategies for Robust 3D-QSAR Prediction

Activity cliffs (ACs), where minute structural modifications cause drastic potency shifts, represent a significant source of prediction error and a central challenge for 3D-QSAR modeling in drug discovery.

Overcoming the Activity Cliff Challenge: Strategies for Robust 3D-QSAR Prediction

Abstract

Activity cliffs (ACs), where minute structural modifications cause drastic potency shifts, represent a significant source of prediction error and a central challenge for 3D-QSAR modeling in drug discovery. This article provides a comprehensive resource for researchers and drug development professionals, exploring the foundational nature of SAR discontinuity and its quantifiable impact on model accuracy. We detail advanced methodological frameworks, from novel molecular representations to activity cliff-aware machine learning algorithms like ACARL, and offer practical troubleshooting protocols for data curation and model interpretation. Finally, we establish rigorous validation standards and comparative benchmarks for assessing model performance on cliff-prone compounds, synthesizing these insights into a forward-looking perspective on creating more predictive and reliable 3D-QSAR models.

Understanding SAR Discontinuity: Deconstructing the Activity Cliff Phenomenon in 3D-QSAR

FAQs on Activity Cliffs & SAR Discontinuity

What is an Activity Cliff (AC) and why is it problematic for QSAR modeling?

An Activity Cliff (AC) is a pair of small molecules that exhibit high structural similarity but simultaneously show an unexpectedly large difference in their binding affinity against a given pharmacological target [1]. The existence of ACs directly defies the molecular similarity principle, which states that chemically similar compounds should have similar biological activities [1]. These cliffs form discontinuities in the SAR landscape and are a major roadblock for successful Quantitative Structure-Activity Relationship (QSAR) modeling because machine learning algorithms struggle to predict these abrupt changes in potency [1].

What are the common technical issues encountered when building 3D-QSAR models for cliff-rich datasets?

The primary challenge in 3D-QSAR is molecular alignment [2]. Unlike 2D-QSAR where molecular descriptors are fixed, the input for a 3D-QSAR model is a set of aligned molecules, and the correct alignment is generally not known [2]. If alignments are incorrect, the model will have limited or no predictive power. A frequent error is to tweak alignments based on model outputs, which violates the independence of the input data and can lead to invalid, over-optimistic models [2].

How can I assess whether my analog series is becoming chemically saturated?

Chemical saturation of an analog series can be computationally assessed by evaluating the sampling of chemical space around the series. This involves generating a population of Virtual Analogs (VAs) and projecting both existing analogs and VAs into a chemical feature space [3]. Key scores can then be calculated:

- Coverage Score (C): Measures the extensiveness of chemical space coverage by your existing analogs [3].

- Density Score (D): Determines how closely your existing analogs map the chemical space by quantifying the overlap of their neighborhoods [3]. These are combined into a chemical saturation score (S), which helps diagnose the progress of lead optimization [3].

My QSAR model performs well overall but fails on specific compounds. Could activity cliffs be the reason?

Yes. It has been observed that QSAR models, including modern deep learning approaches, frequently fail to predict activity cliffs and incur a significant drop in performance when the test set is restricted to "cliffy" compounds involved in many ACs [1]. This low sensitivity in predicting ACs is a major source of prediction error, even for otherwise well-performing models [1].

Are there specific molecular representations that are better for predicting activity cliffs?

Research indicates that graph isomorphism networks (GINs), a type of graph neural network, are competitive with or even superior to classical molecular representations like extended-connectivity fingerprints (ECFPs) for the specific task of AC classification [1]. However, for general QSAR prediction tasks, ECFPs still consistently deliver the best performance among tested input representations [1].

Troubleshooting Guides

Issue: Poor 3D-QSAR Model Performance Due to Incorrect Alignment

Problem: Your 3D-QSAR model shows poor predictive power (low q²), potentially because the molecular alignments are suboptimal or biased.

Solution: Implement a rigorous, activity-agnostic alignment workflow.

- Identify a Reference Molecule: Choose a representative molecule and invest time to establish its likely bioactive conformation, using crystal structures or tools like FieldTemplater if available [2].

- Initial Alignment: Align the rest of the dataset to the reference using a substructure alignment algorithm to ensure the common core is consistently positioned [2].

- Iterative Refinement: Manually inspect alignments for poorly specified molecules (e.g., those with substituents going into unexplored areas). Select a good example, manually adjust its alignment to a plausible conformation, and promote it to a reference. Re-align the dataset using multiple references and 'Maximum' scoring mode [2].

- Finalize Before Modeling: Repeat step 3 until all molecules are aligned satisfactorily. Crucially, this entire process must be done without considering the activity values of the compounds. Only after the alignments are fixed should you run the QSAR analysis [2].

Issue: Low AC-Prediction Sensitivity in QSAR Models

Problem: Your QSAR model has acceptable overall accuracy but fails to identify critical Activity Cliffs, limiting its utility for compound optimization.

Solution:

- Incorporate Pairwise Information: Repurpose your QSAR model for AC prediction by using it to predict the activities of two structurally similar compounds and then thresholding the predicted absolute activity difference [1].

- Use AC-Sensitive Representations: Experiment with molecular representations that capture finer structural details, such as Graph Isomorphism Networks (GINs), which have shown promise for AC-classification tasks [1].

- Leverage Known Activity Data: AC-prediction sensitivity substantially increases when the actual activity of one compound in the pair is known. In diagnostic workflows, use available experimental data for one molecule to improve the cliff prediction for its similar partner [1].

Issue: Diagnosing Progress in Lead Optimization for an Analog Series

Problem: It is challenging to decide whether to continue or terminate work on an analog series due to uncertainty about chemical saturation and SAR progression.

Solution: Use a combined diagnostic approach like the Compound Optimization Monitor (COMO) concept [3].

- Generate Virtual Analogs (VAs): Decorate the common core structure of your series with a large library of substituents to define the series-centric chemical space [3].

- Calculate Diagnostic Scores:

- Interpret Score Combinations:

- High Saturation + Low SAR Progression: The series is chemically saturated with little room for potency improvement; consider terminating the series [3].

- Low Saturation + High SAR Progression: Significant chemical space remains to be explored and structural changes yield strong potency responses; the series has high potential for further optimization [3].

Experimental Protocols & Data

Protocol: Evaluating QSAR Models for AC-Prediction Performance

This protocol outlines how to benchmark a QSAR model's ability to classify Activity Cliffs [1].

1. Data Set Curation:

- Select a target-specific data set (e.g., dopamine receptor D2, factor Xa) with measured binding affinities.

- Standardize structures (e.g., using the ChEMBL structure pipeline) and remove duplicates [1].

2. Define Activity Cliffs:

- For all pairs of structurally similar compounds (similarity can be defined by Tanimoto coefficient on a fingerprint like ECFP), calculate the absolute difference in activity (e.g., pKi or pIC50).

- Define a threshold for "large activity difference" (e.g., >100-fold change in potency) to formally label a pair as an Activity Cliff [1].

3. Model Training & Prediction:

- Train QSAR models using various molecular representations (e.g., ECFPs, GINs) and algorithms (e.g., Random Forest, MLP) on a training set.

- Ensure the test set contains a hold-out collection of AC and non-AC pairs [1].

4. Performance Assessment:

- Task 1 (AC Classification): Use the model to predict activities for both compounds in a pair. Classify the pair as an AC if the predicted activity difference exceeds the threshold. Report sensitivity and specificity [1].

- Task 2 (Compound Ranking): For each pair, predict which compound is more active. Report the accuracy of this ranking [1].

Quantitative Data on QSAR Model Performance for AC Prediction

The table below summarizes findings from a systematic study comparing different QSAR models on their ability to predict activity cliffs [1].

Table 1: AC-Prediction Performance of Different QSAR Models

| Molecular Representation | Regression Algorithm | General QSAR Prediction Performance | AC Classification Sensitivity | Key Finding |

|---|---|---|---|---|

| Extended-Connectivity Fingerprints (ECFPs) | Random Forest (RF) | Consistently good | Low when both activities unknown | Best for general QSAR prediction [1] |

| Physicochemical-Descriptor Vectors (PDVs) | k-Nearest Neighbors (kNN) | Variable | Low when both activities unknown | - |

| Graph Isomorphism Networks (GINs) | Multilayer Perceptron (MLP) | Competitive | Competitive or superior to ECFPs/PDVs | Best baseline for AC-prediction [1] |

| All Representations | All Algorithms | - | Increases substantially | Knowing the activity of one compound in the pair greatly helps [1] |

Key Research Reagent Solutions

Table 2: Essential Computational Tools for SAR Analysis

| Item / Software | Primary Function in SAR Analysis | Relevance to Activity Cliff Research |

|---|---|---|

| QSAR Toolbox | A software application that integrates various databases and tools for (Q)SAR assessment [4] [5]. | Used for chemical hazard identification, data gap-filling, and profiling, helping to identify outliers and SAR trends. |

| Cresset's Forge/Torch | Software for 3D molecular modeling and 3D-QSAR analysis, specializing in field-based molecular alignment [2]. | Critical for generating and validating the molecular alignments that are the foundation of 3D-QSAR models on cliff-rich datasets. |

| RDKit | An open-source toolkit for Cheminformatics and machine learning [1]. | Used for standardizing structures, calculating molecular descriptors, generating fingerprints (like ECFPs), and handling SMILES strings. |

| Graph Neural Network Libraries (e.g., PyTor Geometric) | Libraries for implementing deep learning on graph-structured data [1]. | Enables the implementation and testing of modern representations like Graph Isomorphism Networks (GINs) for improved AC-prediction. |

Diagnostic Workflows & Pathways

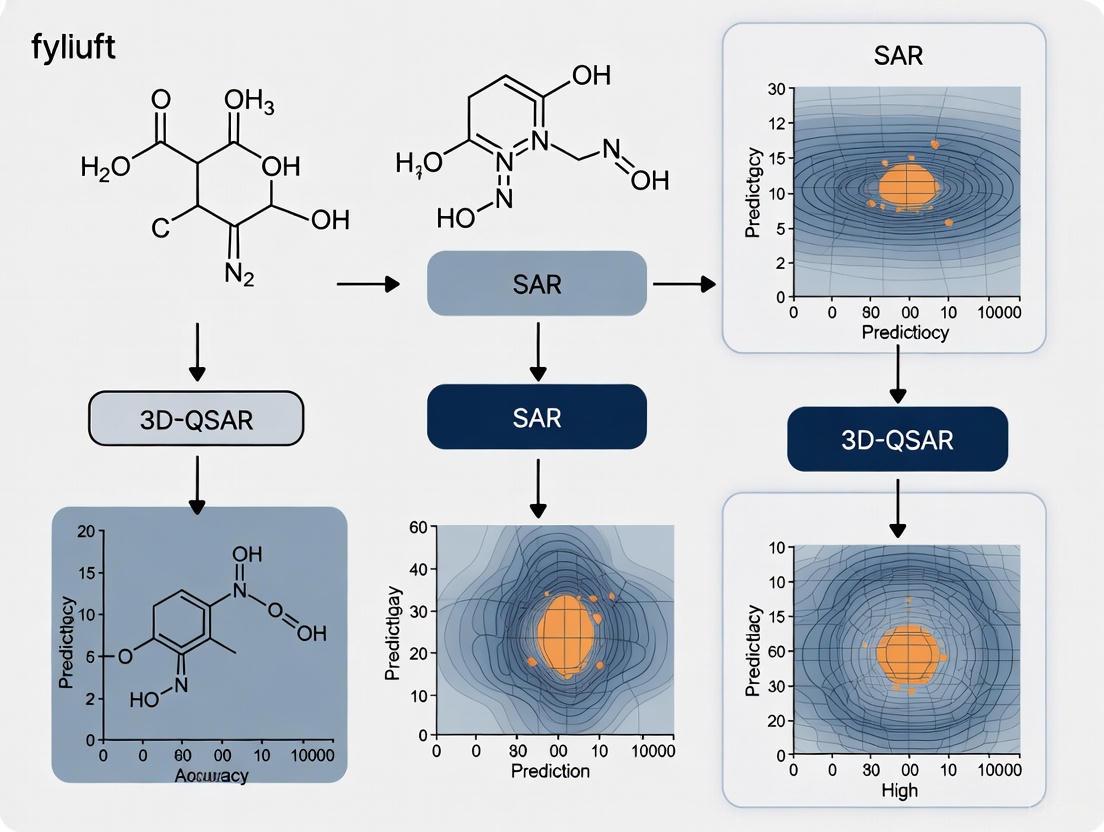

Activity Cliff Identification and Diagnosis Workflow

The following diagram visualizes the recommended pathway for identifying and diagnosing Activity Cliffs within a compound dataset, integrating computational checks and decision points.

3D-QSAR Alignment Validation Protocol

This diagram outlines a critical experimental protocol for validating molecular alignments in 3D-QSAR to prevent the creation of biased models, a common issue when dealing with SAR discontinuities.

Frequently Asked Questions (FAQs)

1. What are activity cliffs and why are they a problem in QSAR modeling? Activity cliffs are pairs of structurally similar molecules that exhibit a large, unexpected difference in their biological activity or binding affinity [6] [1]. They represent discontinuities in the Structure-Activity Relationship (SAR) landscape. From a modeling perspective, these cliffs are problematic because they defy the fundamental principle of similar structures having similar activities, which is a cornerstone of many statistical QSAR approaches. Datasets containing numerous activity cliffs can lead to inaccurate and unreliable predictive models [6] [1].

2. How do SALI and SARI metrics differ in their approach? The core difference lies in their scope and calculation. The Structure-Activity Landscape Index (SALI) is a pairwise measure that focuses on individual molecule pairs independent of targets. It calculates the ratio of the absolute activity difference to the structural dissimilarity (1 - similarity) for a given pair [6]. In contrast, the SAR Index (SARI) is designed to characterize groups of molecules for a specific target. It combines separate continuity and discontinuity scores to provide a more global view of SAR trends, allowing for the direct identification of both continuous and discontinuous regions within a dataset [6] [7].

3. My QSAR model is performing poorly. Could activity cliffs be the cause? Yes, this is a common issue. Recent systematic studies provide strong support for the hypothesis that QSAR models frequently fail to predict activity cliffs, which forms a major source of prediction error [1]. If your test set contains a significant number of "cliffy" compounds (those involved in activity cliffs), you are likely to observe a substantial drop in model performance, even when using highly adaptive machine learning or deep learning models [1].

4. What is the best way to visualize an activity landscape? Several visualization methods exist, each with its own strengths:

- SALI Matrix Heatmap: A simple visualization where the SALI matrix is plotted as an image, with axes often ordered by potency. Large SALI values (indicative of cliffs) are color-coded for easy identification [6].

- SALI Network: A network graph where nodes represent molecules and edges connect pairs with a SALI value above a defined threshold. This helps in interactively exploring significant cliffs and identifying key compounds [6].

- SAS Maps: A 2D plot of structural similarity against activity similarity, divided into quadrants that help identify smooth SAR regions, activity cliffs, and other interesting behaviors [6].

Troubleshooting Guides

Issue 1: Identifying and Characterizing Activity Cliffs in Your Dataset

Problem: You have a dataset of active compounds and need to systematically identify and quantify all significant activity cliffs.

Solution: Implement a computational workflow to calculate pairwise landscape metrics.

- Step 1: Data Preparation. Ensure your dataset is curated, with standardized chemical structures and reliable, consistent activity measurements (e.g., IC50, Ki).

- Step 2: Calculate Molecular Similarity. Choose an appropriate molecular representation (e.g., ECFP fingerprints, physicochemical descriptors) and calculate the pairwise structural similarity (e.g., Tanimoto coefficient) for all compounds in your dataset [6] [1].

- Step 3: Compute Pairwise Activity Differences. Calculate the absolute difference in activity (e.g., ΔpIC50) for all compound pairs.

- Step 4: Calculate SALI Values. For each compound pair, compute the SALI using the formula [6]:

- SALI = |Ai - Aj| / (1 - sim(i, j))

- Where Ai and Aj are the activities of the two molecules, and sim(i, j) is their structural similarity.

- Step 5: Set a Threshold and Analyze. Rank the compound pairs by their SALI values. Pairs with the highest SALI values represent the most significant activity cliffs. You can set a threshold (e.g., top 5% of SALI values) to focus on the most critical cliffs for further analysis [6].

Issue 2: Assessing Global SAR Trends and Modelability

Problem: Before building a QSAR model, you want to assess the overall "cliffiness" or smoothness of your dataset's SAR landscape to anticipate potential modeling challenges.

Solution: Use the SARI metric to evaluate the global SAR characteristics.

- Step 1: Data Preparation. As with the SALI method, start with a clean, curated dataset.

- Step 2: Calculate SARI Components. The SARI is composed of continuity and discontinuity scores derived from the potency-weighted mean of pairwise similarities and the average potency difference [6] [7].

- Step 3: Interpret Results. The SARI provides a quantitative measure of the overall SAR nature. A high degree of discontinuity (indicating many cliffs) suggests the dataset may have low "modelability," and standard QSAR models may perform poorly, particularly on cliffy compounds [1]. This analysis can help you decide whether to use more advanced modeling techniques or to segment your dataset before modeling.

Issue 3: Poor QSAR Prediction on Structurally Similar Compounds

Problem: Your QSAR model has good overall statistics but makes significant errors when predicting the activity of compounds that are structural analogs of each other.

Solution: Diagnose and address activity cliff-related prediction failures.

- Step 1: Post-Model Analysis. After building your model, use the SALI method (from Issue 1) to identify all activity cliff pairs within your test set.

- Step 2: Evaluate Cliff Prediction Accuracy. Check your model's predictions specifically for these cliff pairs. A low sensitivity in predicting the correct activity trend for cliffs confirms this as the core issue [1].

- Step 3: Model Refinement Strategies:

- Incorporate Cliff Information: If enough data is available, try to build a dedicated model for "cliffy" regions of the chemical space.

- Leverage Known Cliffs: Some studies suggest that providing the actual activity of one compound in a cliff pair can substantially improve the prediction sensitivity for the other [1]. Consider this if you are optimizing around a known high-potency compound.

- Use Advanced Representations: Explore if graph-based molecular representations (e.g., from Graph Isomorphism Networks) can capture the subtle structural features that lead to activity cliffs better than traditional fingerprints [1].

Key Metrics for SAR Landscape Quantification

The following table summarizes the core metrics for analyzing structure-activity landscapes.

Table 1: Key Metrics for SAR Landscape Analysis

| Metric | Full Name | Formula/Description | Primary Application | Key Advantage | ||

|---|---|---|---|---|---|---|

| SALI [6] | Structure-Activity Landscape Index | `SALI_i,j = | Ai - Aj | / (1 - sim(i, j))` | Identifying and ranking individual activity cliffs within a dataset. | Simple, intuitive pairwise measure that directly quantifies the "steepness" of a cliff. |

| SARI [6] [7] | SAR Index | SARI = 1/2 * (score_cont + (1 - score_disc))Combines separate continuity and discontinuity scores. |

Characterizing the global nature of the SAR for a target (smooth vs. discontinuous). | Provides a holistic view of SAR trends, enabling modelability assessment. |

Essential Experimental Protocols

Protocol 1: Calculating SALI for Activity Cliff Detection

Objective: To identify all significant activity cliff pairs in a congeneric series of compounds.

Materials:

- A dataset of chemical structures and corresponding biological activities (e.g., IC50 values).

- Cheminformatics software (e.g., RDKit, OpenBabel) for handling chemical data.

Methodology:

- Structure Standardization: Standardize all molecular structures (e.g., neutralize charges, remove salts, generate canonical tautomers) to ensure consistent representation.

- Descriptor Calculation: Generate a numerical representation for each molecule. Extended-Connectivity Fingerprints (ECFPs) are a widely used and effective choice for this purpose [1].

- Similarity Matrix Calculation: Compute the pairwise Tanimoto similarity for all compounds based on the chosen fingerprints.

- Activity Difference Matrix: Calculate the matrix of absolute activity differences. For potency data (e.g., IC50), it is common practice to use pIC50 (-log10(IC50)) to make differences more linear.

- SALI Matrix Calculation: For each compound pair (i, j), calculate the SALI value using the formula in Table 1.

- Thresholding and Identification: Sort all compound pairs by their SALI value. Select a threshold (e.g., top 5% of values or a specific SALI cutoff) to define significant activity cliffs for further visual inspection or analysis [6].

Protocol 2: Workflow for SAR Landscape Visualization

This protocol outlines the steps to create a SALI network visualization for exploring activity cliffs.

Objective: To create an interactive network graph for visualizing and exploring activity cliffs.

Materials:

- The SALI matrix calculated in Protocol 1.

- Data visualization libraries (e.g., Python's NetworkX and Plotly, or Cytoscape).

Methodology:

- Node Creation: Represent each unique molecule in your dataset as a node in the network.

- Edge Creation: For each compound pair, create an edge (link) if their SALI value exceeds a user-defined threshold.

- Graph Layout: Use a force-directed or organic layout algorithm to position the nodes. This typically results in highly connected clusters (activity cliffs) being grouped.

- Interactive Visualization: Implement an interactive visualization where nodes can be selected to view the chemical structure and activity, and the SALI threshold can be adjusted dynamically. This allows users to smoothly transition from a dense "hairball" to a sparse network highlighting only the most significant cliffs [6].

Research Reagent Solutions

The following table lists key computational tools and concepts essential for conducting SAR landscape analysis.

Table 2: Essential Research Reagents & Tools for SAR Landscape Analysis

| Item / Concept | Function / Description | Application in SAR Analysis |

|---|---|---|

| Extended-Connectivity Fingerprints (ECFPs) [1] | A circular topological fingerprint that captures molecular features within a given radius from each atom. | A standard molecular representation for calculating structural similarity, a core component of SALI and SARI. |

| Matched Molecular Pairs (MMPs) [6] [7] | Pairs of compounds that differ only by a single, well-defined structural transformation. | Used in SAR data mining to systematically identify small structural changes that lead to large activity shifts (i.e., cliffs). |

| Graph Isomorphism Networks (GINs) [1] | A type of graph neural network that operates directly on the molecular graph structure. | An advanced molecular representation that can be competitive or superior for AC-classification tasks compared to classical fingerprints. |

| SAS Maps [6] | A 2D plot of structural similarity versus activity similarity. | A visualization technique to divide a dataset into regions of smooth SAR, activity cliffs, and scaffold hops. |

Workflow and Relationship Diagrams

SALI Analysis Workflow

The diagram below illustrates the logical sequence of steps for performing activity cliff analysis using the SALI metric.

Frequently Asked Questions (FAQs)

1. What is an Activity Cliff and why is it a problem for QSAR models? An activity cliff is a pair of structurally similar compounds that exhibit a large difference in their biological activity or binding affinity for a given target [8] [9]. This phenomenon creates a discontinuity in the Structure-Activity Relationship (SAR) landscape. QSAR models, which often rely on the principle that similar molecules have similar properties, struggle with these abrupt changes. They tend to make analogous predictions for structurally similar molecules, which leads to significant errors when those molecules form an activity cliff [1] [10].

2. How significant is the performance drop for QSAR models on activity cliff compounds? The performance drop is substantial. Research shows that the predictive capability of various QSAR methods, including descriptor-based, graph-based, and even advanced deep learning models, significantly deteriorates when applied to activity cliff compounds [10] [1]. One study found that neither enlarging the training set size nor increasing model complexity reliably improves accuracy for these challenging compounds [10].

3. Can modern Deep Learning methods like AlphaFold 3 accurately predict protein-ligand complexes involving novel binding poses? While deep learning co-folding methods have shown impressive results, they are still challenged by prediction targets with novel protein-ligand binding poses. Benchmark studies indicate that even state-of-the-art models like AlphaFold 3 fail to identify a structurally and chemically accurate pose for a considerable fraction of complexes, particularly those representing functionally distinct binding pockets not commonly seen in training data [11].

4. Are there specific molecular representations that are better at handling activity cliffs? Some studies suggest that graph isomorphism features can be competitive with or even superior to classical molecular representations like extended-connectivity fingerprints (ECFPs) for the specific task of activity-cliff classification. However, for general QSAR prediction tasks, ECFPs often still deliver the most consistent performance [1]. The choice of representation remains a critical factor for model performance on discontinuous SARs.

5. What practical steps can I take to improve my model's performance on discontinuous SARs?

- Incorporate Activity Cliff Awareness: Novel frameworks like Activity Cliff-Aware Reinforcement Learning (ACARL) explicitly identify activity cliffs during the molecular generation process and incorporate them into the optimization via a tailored contrastive loss function, leading to the generation of higher-affinity molecules [10].

- Use Structure-Based Docking for Validation: Docking software has been shown to reflect activity cliffs more authentically than simpler scoring functions. Using docking as an evaluation step can help identify cliffs that QSAR models might miss [10].

- Expand Data Diversity via Federation: Federated learning, which trains models across distributed proprietary datasets without centralizing data, can systematically expand a model's effective domain and improve its robustness when predicting unseen scaffolds, thereby mitigating some discontinuity issues [12].

Troubleshooting Guides

Issue 1: Poor QSAR Model Performance on "Cliffy" Compounds

Problem: Your QSAR model performs well on most compounds but shows large prediction errors for compounds involved in activity cliffs.

Solution:

- Diagnose the Issue: Systematically identify activity cliffs in your dataset. Calculate the pairwise structural similarity (e.g., using Tanimoto similarity on ECFP4 fingerprints) and the absolute difference in activity (e.g., pKi or pIC50) for all compound pairs [9] [1]. Pairs with high similarity but a large activity difference (commonly a 100-fold or 2 log unit difference) are activity cliffs.

- Analyze Model Sensitivity: Evaluate your model's prediction accuracy specifically on the subset of compounds identified as part of activity cliffs. Compare it to the accuracy on the rest of the dataset to quantify the performance gap [1].

- Refine the Model:

- Leverage Graph Neural Networks: Consider using models based on Graph Isomorphism Networks (GINs), which have shown potential for better handling cliff-related tasks [1].

- Implement a Hybrid Approach: For critical lead optimization, use a combination of a QSAR model and a structure-based method like molecular docking. The docking score can serve as an independent check for compounds flagged as potential cliffs [10] [13].

Issue 2: Handling Multi-Ligand Binding and Novel Pockets in Structure-Based Predictions

Problem: When using protein-ligand structure prediction or docking tools for targets involving multiple ligands or novel binding pockets, the accuracy of the predicted complex is low.

Solution:

- Benchmark Your Setup: Before a large-scale screen, run control calculations on a benchmark set with known structures, such as those from PoseBench, which includes challenging multi-ligand and apo-to-holo docking scenarios [11].

- Evaluate Multiple Methodologies: Do not rely on a single tool. Empirically test different DL co-folding methods (like AlphaFold 3, Chai-1, Boltz-1) and conventional docking algorithms (like AutoDock Vina) on your specific target to identify the best-performing approach [11].

- Assess Input Dependencies: Be aware that the performance of some methods, such as AlphaFold 3, is highly sensitive to the quality and availability of input Multiple Sequence Alignments (MSAs). For novel targets with poor MSA coverage, consider using methods that are less MSA-dependent or that can leverage single-sequence inputs [11].

Experimental Protocols

Protocol 1: Systematic Identification and Analysis of Activity Cliffs

Objective: To identify and quantify activity cliffs within a compound dataset to understand the source of QSAR model errors.

Materials:

- A dataset of compounds with associated bioactivity values (e.g., Ki, IC50).

- Cheminformatics software (e.g., RDKit, Schrodinger's Canvas).

Methodology:

- Data Curation: Convert all activity values to a uniform measure of potency, preferably pKi or pIC50 (-log10 of the molar concentration). Standardize molecular structures.

- Calculate Molecular Similarity: Generate molecular fingerprints (e.g., ECFP4) for all compounds. Compute the pairwise Tanimoto similarity matrix.

- Define Activity Cliffs: Identify all compound pairs that meet the following criteria [9]:

- Structural Similarity: Tanimoto coefficient ≥ 0.85 (or use the matched molecular pair, MMP, criterion).

- Potency Difference: |ΔpKi| or |ΔpIC50| ≥ 2.0 (equivalent to a 100-fold difference in potency).

- Visualize and Analyze: Create an activity landscape plot with similarity versus activity difference. Analyze the chemical modifications associated with the identified cliffs to rationalize the SAR discontinuity.

Protocol 2: Implementing an Activity Cliff-Aware Molecular Optimization Loop

Objective: To use reinforcement learning (RL) to generate novel compounds with high activity, explicitly accounting for activity cliffs.

Materials:

- A starting set of active compounds.

- A scoring function (e.g., a trained QSAR model, docking score, or experimental assay).

- An RL framework for molecular generation (e.g., utilizing a Transformer decoder).

Methodology:

- Train a Prior Model: Pre-train a generative model (e.g., a SMILES-based Transformer) on a large corpus of drug-like molecules to learn the rules of chemical validity.

- Define the Reward: The reward function should combine the primary objective (e.g., predicted activity from a scoring function) with an activity cliff term [10].

- Calculate Activity Cliff Index (ACI): For a generated molecule

x, identify its nearest neighboryin the training data. The ACI can be defined as:ACI(x, y) = (|f(x) - f(y)|) / (1 - Sim(x, y)), wherefis the activity andSimis the structural similarity. A high ACI indicates a cliff. - RL Fine-Tuning: Fine-tune the generative model using a policy gradient method (e.g., REINFORCE) where the reward is a weighted sum of the primary score and the ACI. This incentivizes the model to explore regions of chemical space near known activity cliffs.

- Iterate and Validate: Generate new compounds, score them, and use the most promising ones to update the model iteratively. Validate top-ranked generated compounds experimentally or via rigorous molecular docking [13].

Data Presentation

Table 1: QSAR Model Performance on Standard vs. Activity Cliff Compounds

This table summarizes the typical degradation in performance (measured by sensitivity or RMSE) that QSAR models experience when predicting compounds involved in activity cliffs, adapted from large-scale benchmarking studies [10] [1].

| Model / Representation | Sensitivity (Overall Test Set) | Sensitivity (Activity Cliff Compounds) | Relative Performance Drop |

|---|---|---|---|

| Random Forest (ECFP) | 0.75 | 0.28 | -63% |

| Graph Isomorphism Network (GIN) | 0.72 | 0.35 | -51% |

| Multilayer Perceptron (Physicochemical Descriptors) | 0.68 | 0.21 | -69% |

| Activity Cliff-Aware RL (ACARL) | N/A | N/A | Generates higher-affinity molecules [10] |

Table 2: Performance of Deep Learning Protein-Ligand Docking Methods on Challenging Benchmarks

This table compares the performance of different structure prediction methods on benchmark datasets designed to test generalization, such as docking to predicted (apo) protein structures and handling multi-ligand complexes [11]. Key metrics include the percentage of successful predictions with RMSD ≤ 2Å (SR-2) and chemical validity.

| Method | Astex Diverse (SR-2) | DockGen-E (SR-2) | PoseBusters Benchmark (SR-2) | Multi-Ligand Capability |

|---|---|---|---|---|

| AlphaFold 3 | High | < 25% | Moderate | Limited |

| Chai-1 | High | Moderate | Moderate (Less MSA-dependent) | Limited |

| Boltz-1 | High | Moderate | Moderate | Limited |

| Conventional Docking (Vina) | Lower than DL | Lower than DL | Lower than DL | Yes (with manual setup) |

| Key Challenge | Handling novel binding poses and multi-ligand targets remains difficult for all methods. |

Visualization Diagrams

Diagram 1: The Activity Cliff Effect on QSAR Prediction

The Activity Cliff Effect - This diagram contrasts the standard QSAR assumption (leading to correct predictions) with the activity cliff reality (leading to prediction errors).

Diagram 2: Activity Cliff-Aware Reinforcement Learning Workflow

ACARL Workflow - This diagram outlines the steps in the Activity Cliff-Aware Reinforcement Learning (ACARL) process, showing how the Activity Cliff Index (ACI) is integrated into the optimization loop [10].

The Scientist's Toolkit

| Item / Resource | Function / Application | Key Characteristics |

|---|---|---|

| PoseBench [11] | A comprehensive benchmark for evaluating protein-ligand docking and structure prediction methods, especially under challenging conditions like using predicted protein structures and multi-ligand docking. | Includes primary ligand and multi-ligand datasets; facilitates systematic evaluation of deep learning and conventional methods. |

| Matched Molecular Pairs (MMPs) [9] | A substructure-based method to systematically identify pairs of compounds that differ only at a single site. Used to define "MMP-cliffs," a chemically intuitive type of activity cliff. | Provides a clear, interpretable similarity criterion that aligns well with medicinal chemistry practices. |

| Activity Cliff Index (ACI) [10] | A quantitative metric to detect and rank the intensity of activity cliffs by comparing structural similarity with differences in biological activity. | Enables the integration of activity cliff awareness into automated molecular design algorithms like reinforcement learning. |

| Federated Learning Platforms [12] | A computational technique that enables collaborative training of machine learning models across multiple institutions without sharing raw data. | Helps build more robust ADMET and QSAR models by increasing the chemical space coverage of training data, which can improve performance on cliffs. |

| Structure-Based Docking Software [10] [13] | Used to validate predictions and provide an independent, physics-based assessment of binding affinity that can capture activity cliffs missed by ligand-based models. | Software like AutoDock Vina and DOCK3.7 provide control protocols for large-scale virtual screening. |

In the field of quantitative structure-activity relationship (QSAR) modeling, the activity landscape is a conceptual and graphical framework that integrates chemical similarity and biological activity relationships for a set of compounds [14]. This landscape view allows researchers to visualize structure-activity relationships (SARs) as a three-dimensional surface, where the x- and y-axes represent chemical structure (often projected from high-dimensional descriptor space), and the z-axis represents biological activity [14] [6].

Within these landscapes, activity cliffs (ACs) represent the most prominent form of SAR discontinuity. An activity cliff is defined as a pair of structurally similar compounds that exhibit a large difference in potency against the same biological target [15] [9]. These cliffs directly challenge the fundamental similarity principle in medicinal chemistry - that structurally similar compounds should have similar biological effects [15] [1]. For QSAR modelers, activity cliffs present significant challenges as they represent discontinuities that are difficult for standard machine learning algorithms to capture, often forming a major source of prediction error [15] [1] [14].

The systematic identification and analysis of activity cliffs through landscape visualization techniques provides crucial insights for understanding SAR discontinuity and its impact on 3D-QSAR prediction accuracy. This technical support document addresses common challenges researchers face when working with activity landscape networks and SAR maps.

Troubleshooting Guide: Activity Landscape Analysis

FAQ: Landscape Generation and Interpretation

Q: What are the primary computational methods for generating activity landscapes from compound data?

A: Activity landscape generation involves two key computational steps:

- Chemical Space Projection: High-dimensional chemical descriptors (e.g., ECFP4 fingerprints) are projected into 2D space using dimensionality reduction techniques. Multi-dimensional scaling (MDS) and Neuroscale (using radial basis function neural networks) have been identified as preferred methods as they effectively preserve original similarity relationships [16].

- Surface Interpolation: A continuous activity surface is interpolated from sparse compound potency values using methods like Gaussian process regression (GPR) [16]. The resulting surface can be color-coded by potency using a gradient (e.g., green for low potency, yellow for medium, red for high potency) to enhance visualization of SAR trends [16].

Q: Why do my QSAR models consistently fail to predict certain compounds, and how can activity landscape analysis help diagnose this issue?

A: Prediction failures often cluster around activity cliffs [15] [1]. Systematic studies have shown that QSAR models frequently fail to predict ACs, which form a major source of prediction error [15] [1]. The presence of activity cliffs indicates SAR discontinuities that violate the smooth-function assumption underlying many machine learning algorithms [14]. To diagnose this:

- Calculate the Structure-Activity Landscape Index (SALI) for compound pairs in your dataset [14] [6]

- Identify compounds involved in multiple activity cliffs ("cliffy compounds") [9]

- Evaluate model performance separately on "cliffy" versus "non-cliffy" compounds - a significant performance drop on cliffy compounds indicates susceptibility to SAR discontinuities [15]

Q: How do I choose appropriate similarity thresholds for reliable activity cliff detection?

A: Similarity thresholds depend on your molecular representation and research goals:

- For fingerprint-based approaches (e.g., Tanimoto similarity on ECFP4), no universal threshold exists, but values typically range from 0.7-0.9 for stringent cliff identification [9]

- For matched molecular pairs (MMPs), which identify pairs differing at only a single site, no similarity threshold is needed as the method inherently identifies structural analogs [9]

- Consider using consensus activity cliffs that are identified across multiple representation methods to minimize representation bias [9]

Q: What are the limitations of 3D activity landscape visualizations for SAR analysis?

A: Key limitations include:

- Projection artifacts: 2D projection of high-dimensional chemical space may distort true molecular relationships [16]

- Interpolation uncertainty: Sparse data regions have less reliable surface interpolation [17]

- Scale dependence: Landscape topography changes with similarity metrics, descriptors, and projection methods [6]

- Subjective interpretation: Without quantitative measures, different researchers may draw different conclusions from the same landscape [16]

FAQ: Technical Challenges and Solutions

Q: My dataset contains compounds from multiple structural classes, resulting in a fragmented landscape. How can I improve visualization and analysis?

A: For heterogeneous datasets:

- Apply network-like similarity graphs (NSGs) instead of continuous landscapes [7] [17]. NSGs represent compounds as nodes connected by edges when similarity exceeds a threshold, naturally handling disparate structural classes [17]

- Use potency-based coloring (e.g., green-to-red gradient) and node sizing to represent activity and SAR significance [17]

- Implement local SALI analysis to identify cliffs within structural neighborhoods rather than globally [6]

Q: How can I distinguish true activity cliffs from experimental noise or measurement artifacts?

A: Implement these validation steps:

- Dose-response verification: For potential cliffs, check if both compounds have full dose-response curves rather than single-point measurements [6]

- Contextual analysis: Identify if compounds participate in multiple overlapping cliffs - true SAR determinants typically affect multiple pairs [9]

- Triangulation: Look for coordinated activity cliffs where multiple similar compounds show consistent potency trends, reducing the likelihood of artifact [9]

Q: What strategies can improve QSAR model performance in regions of high SAR discontinuity?

A: When activity cliffs cannot be avoided:

- Incorporate pairwise information: Use models that explicitly consider compound pairs rather than individual molecules [15] [1]

- Employ graph neural networks: Recent studies show graph isomorphism networks (GINs) can be competitive with or superior to classical fingerprints for AC prediction tasks [15] [1]

- Implement domain of applicability: Use similarity to training set to identify regions where models extrapolate unreliable [18]

- Leverage one-shot learning: When activity of one cliff compound is known, sensitivity for predicting the other significantly improves [15] [1]

Essential Methodologies for SAR Landscape Analysis

Quantitative Measures for SAR Characterization

Table 1: Key Numerical Indices for SAR Landscape Analysis

| Index Name | Formula | Application | Interpretation | ||

|---|---|---|---|---|---|

| Structure-Activity Landscape Index (SALI) [14] [6] | `SALI(i,j) = | Ai - Aj | / (1 - sim(i,j))` | Quantifies the magnitude of activity cliffs between compound pairs | Higher values indicate more significant activity cliffs; undefined for identical compounds |

| Structure-Activity Relationship Index (SARI) [14] | SARI = 0.5 × (score_cont + (1 - score_disc)) |

Characterizes overall SAR continuity and discontinuity in a dataset | Values closer to 1 indicate higher SAR continuity; values closer to 0 indicate higher discontinuity | ||

| SAR Network Connectivity [9] | Network density and hub identification | Identifies compounds involved in multiple cliffs (AC generators) | Highly connected nodes represent SAR determinants with strong structural influence |

Experimental Protocol: SALI Network Analysis

This protocol enables systematic identification and visualization of activity cliffs in compound datasets.

Step 1: Data Preparation and Standardization

- Gather compounds with consistent potency measurements (preferably Ki or Kd values)

- Standardize molecular structures: remove salts, neutralize charges, generate canonical tautomers

- Calculate molecular descriptors/fingerprints (ECFP4 recommended)

Step 2: Similarity and Potency Difference Matrix Calculation

- Compute pairwise Tanimoto similarity using ECFP4 fingerprints

- Calculate pairwise absolute potency differences in logarithmic units (pIC50, pKi)

- Apply similarity threshold (typically 0.7-0.9) to focus on structurally similar pairs

Step 3: SALI Calculation and Cliff Identification

- Compute SALI values for all pairs passing similarity threshold

- Apply potency difference threshold (typically 100-fold or 2 log units)

- Identify activity cliff pairs as those exceeding both similarity and potency difference thresholds

Step 4: Network Visualization and Analysis

- Construct network with compounds as nodes and significant cliffs as edges

- Implement potency-based node coloring (green→yellow→red gradient)

- Size nodes by connectivity (number of cliffs participated in)

- Use graph layout algorithms to position structurally similar compounds close together

Experimental Protocol: 3D Activity Landscape Generation

Step 1: Chemical Space Projection

- Compute ECFP4 fingerprints for all compounds

- Calculate pairwise Tanimoto distance matrix (1 - Tanimoto similarity)

- Apply Multi-Dimensional Scaling (MDS) or Neuroscale to project to 2D coordinates

- Validate projection quality by stress function or Shepard plot

Step 2: Activity Surface Interpolation

- Assign compound potency values as z-coordinates

- Implement Gaussian Process Regression (GPR) to interpolate continuous surface

- Set appropriate kernel function and hyperparameters for smoothness

Step 3: Visualization and Interpretation

- Create 3D surface plot with potency-based color coding

- Overlay actual compound positions as markers

- Identify rugged regions (potential activity cliffs) and smooth regions (SAR continuity)

- Generate views from multiple perspectives for comprehensive analysis

Research Reagent Solutions: Computational Tools for SAR Visualization

Table 2: Essential Computational Tools for Activity Landscape Analysis

| Tool Category | Specific Implementation | Function in SAR Visualization | Key Features |

|---|---|---|---|

| Molecular Descriptors | ECFP4 fingerprints [16] | Molecular structure representation for similarity calculation | Topological atom environments; 1024-bit folded representation; Tanimoto similarity |

| Chemical Space Projection | Multi-Dimensional Scaling (MDS) [16] | Dimension reduction for landscape creation | Preserves pairwise distances; deterministic results |

| Neuroscale (RBF network) [16] | Alternative projection method | Smooth nonlinear projection; generalizes to new points | |

| Surface Modeling | Gaussian Process Regression (GPR) [16] | Activity surface interpolation from sparse data | Provides uncertainty estimates; flexible kernel functions |

| Network Analysis | SALI Network Visualization [14] [6] | Graph-based cliff analysis | Interactive thresholding; directed edges (potency flow) |

| Landscape Quantification | SALI Calculator [14] [6] | Numerical cliff identification | Pairwise analysis; integration with similarity metrics |

| SARI Implementation [14] | Global SAR characterization | Continuity/discontinuity scoring; dataset-level assessment |

Visual Workflows for SAR Landscape Analysis

Activity Cliff Identification Workflow

3D Activity Landscape Generation Process

QSAR Modeling with Activity Cliff Awareness

Advanced 3D-QSAR Frameworks for Modeling Complex SAR Landscapes

Leveraging 3D Shape and Electrostatic Similarity as Robust Descriptors

Frequently Asked Questions (FAQs)

Q1: Why does my 3D-QSAR model show high internal accuracy but fail to predict new compound activities accurately? This is a classic symptom of Activity Cliffs (ACs) and incorrect molecular alignment. ACs are pairs of structurally similar compounds that exhibit a large difference in binding affinity, creating discontinuities in the structure-activity relationship (SAR) landscape that are difficult for QSAR models to learn [15] [14]. If your model was not validated on a sufficient number of ACs or if the molecular alignments were inadvertently tweaked based on activity data, the model's predictive power will be low [2] [19]. To resolve this, ensure your alignment is fixed before running the QSAR model and validate your model's performance on a test set rich in ACs [2] [9].

Q2: What is the most critical step to ensure a robust 3D-QSAR model? The most critical step is achieving a correct and consistent alignment of your molecule set. In 3D-QSAR, unlike 2D methods, the alignment of molecules provides the majority of the signal. An incorrect alignment introduces noise that can render the model invalid [2]. The alignment must be performed blind to the activity data to avoid introducing bias and over-optimistic performance metrics [2].

Q3: How can I visually identify regions in the binding site that favor specific interactions using my 3D-QSAR model? Modern 3D-QSAR methodologies that use field-based descriptors can provide visual interpretation of the model. The model can highlight favorable spatial regions for specific chemical features, such as H-bond acceptors (magenta) or donors (yellow), within the binding site. These interpretable pictures can inspire novel ideas for hit optimization by suggesting where to add or modify functional groups [20] [21].

Q4: My dataset contains activity cliffs. Should I remove these compounds to build a smoother model? No, removing activity cliffs is not recommended. While ACs pose a challenge for prediction, they contain rich SAR information that is highly valuable for understanding key structural modifications that drastically impact potency [15] [9]. Instead of removing them, ensure your model validation strategy explicitly tests the model's ability to predict these cliff-forming compounds. Knowledge of ACs can be powerful for escaping flat regions in the SAR landscape during lead optimization [15] [14].

Q5: What is the advantage of a consensus 3D-QSAR model? A consensus model, which combines predictions from multiple individual models using different similarity descriptors and machine learning techniques, is more robust than a single model. This approach helps average out individual model variances and provides a more reliable final prediction [21]. Furthermore, some implementations provide a confidence estimate for each prediction, helping you identify which compounds fall within the model's domain of applicability [20] [21].

Troubleshooting Guides

Issue: Poor Model Predictive Power on External Test Sets

Problem Your 3D-QSAR model demonstrates satisfactory performance during cross-validation (e.g., high q²) but performs poorly when predicting the activity of a new, external test set.

Diagnosis and Solutions

| Potential Cause | Diagnostic Steps | Corrective Actions |

|---|---|---|

| Incorrect Molecular Alignment [2] | Visually inspect alignments, especially for poorly predicted compounds. Check if inactive compounds are systematically aligned differently from actives. | 1. Define a robust alignment rule: Use a field- and shape-guided method or substructure alignment on a common core.2. Use multiple references: Identify 3-4 representative molecules to constrain the alignment of the entire set.3. Fix alignments before any modeling: Do not adjust alignments after seeing QSAR results. |

| High Prevalence of Activity Cliffs (ACs) [15] | Calculate the Structure-Activity Landscape Index (SALI) for compound pairs: SALI = |Activity_i - Activity_j| / (1 - Similarity_i,j). High SALI values indicate ACs. |

1. Do not remove ACs: They are key SAR information.2. Validate on ACs explicitly: Ensure your test set contains a representative proportion of cliff-forming compounds.3. Use consensus models: They can be more robust to SAR discontinuities [21]. |

| Inadequate Model Validation [19] | Check if the same data was used for descriptor selection, model training, and validation. | 1. Use Double Cross-Validation: Employ a nested loop where an inner loop performs model selection and an outer loop provides an unbiased error estimate.2. Use a true external test set: A set of compounds completely withheld from the entire model development process. |

Issue: Handling Activity Cliffs in SAR Analysis

Problem You have identified pairs of highly similar molecules with large potency differences, and your current models cannot rationalize or predict these cliffs.

Diagnosis and Solutions

| Potential Cause | Diagnostic Steps | Corrective Actions |

|---|---|---|

| 2D Descriptors are Insufficient | Compare 2D structural similarity (e.g., ECFP fingerprints) with 3D shape and electrostatic similarity for the cliff pair. | Switch to 3D Descriptors: Use 3D-QSAR descriptors derived from molecular shape and electrostatic potentials, which are more sensitive to the subtle changes that cause cliffs [20] [21]. |

| Involvement in Multiple Cliffs | Represent the data as an Activity Cliff Network, where nodes are compounds and edges are significant SALI values. | Analyze AC Networks: Identify clusters and hubs (highly potent compounds connected to many less potent ones). These hubs are rich sources of SAR information and should be the focus of analysis [9]. |

| Limitation of Single-Site Modification View | Check if the cliff pair is a Matched Molecular Pair (MMP), differing at only a single site. | Expand to Analog Series: Systematically enumerate and analyze analog pairs with single or multiple substitution sites from the same series to capture a broader context of the SAR [9]. |

Experimental Protocols

Protocol 1: Robust Molecular Alignment for 3D-QSAR

Objective: To generate a consistent and unbiased 3D alignment for a set of analogues for use in 3D-QSAR modeling.

Materials:

- Software: A molecular modeling package with field-based alignment capabilities (e.g., OpenEye Orion, Cresset Forge).

- Compounds: A curated set of molecules with known activities.

Methodology:

- Identify Reference Molecule(s): Select a molecule that is representative of the data set. If possible, use a compound with a known bioactive conformation from a protein crystal structure.

- Initial Alignment: Align the entire dataset to the initial reference molecule using a field- and shape-guided method.

- Visual Inspection and Refinement: Manually inspect the alignments. Pay special attention to molecules with substituents that go into regions not covered by the initial reference.

- For any poorly aligned molecules, pick a good example and manually adjust its alignment to a chemically reasonable conformation. Promote this molecule to an additional reference.

- Re-align with Multiple References: Re-align the entire dataset using all reference molecules (e.g., using a substructure alignment algorithm with a 'Maximum' scoring mode).

- Iterate: Repeat steps 3 and 4 until a chemically sensible alignment is achieved for all molecules in the set.

- Crucial Step: Freeze the alignments. Do not modify them after this point. The activity data must not influence the alignment process [2].

Workflow Diagram:

Protocol 2: Identifying and Analyzing Activity Cliffs

Objective: To systematically identify and analyze activity cliffs within a dataset to understand SAR discontinuities.

Materials:

- Dataset: Compounds with standardized structures and consistent potency measurements (e.g., Ki, IC50).

- Software: Cheminformatics toolkit (e.g., RDKit, Python libraries) for calculating descriptors and similarities.

Methodology:

- Data Preparation: Ensure activities are in a common unit (e.g., nM) and convert to a logarithmic scale (e.g., pKi, pIC50).

- Calculate Molecular Similarity: Calculate the pairwise structural similarity for all compounds in the dataset. The Tanimoto coefficient (Tc) based on 2D fingerprints (e.g., ECFP4) is commonly used [9].

- Calculate Potency Difference: Calculate the absolute difference in activity for all compound pairs:

ΔActivity = |Activity_i - Activity_j|. - Identify Activity Cliffs: Apply the Structure-Activity Landscape Index (SALI) [14]:

- For each compound pair (i, j), calculate

SALI_i,j = |Activity_i - Activity_j| / (1 - Similarity_i,j). - Pairs with a high SALI value are activity cliffs. A common threshold is a potency difference of at least 100-fold (e.g., 2 log units in pKi) and high structural similarity (e.g., Tc ≥ 0.85) [9].

- For each compound pair (i, j), calculate

- Visualize with a SALI Network:

- Create a network where nodes represent compounds and edges connect pairs with a SALI value above your threshold.

- Direct edges from less active to more active compounds.

- The resulting network will reveal clusters and hub compounds (highly active compounds connected to many less active analogs), which are key for SAR analysis [14].

Workflow Diagram:

The Scientist's Toolkit: Key Research Reagents & Software

| Category | Item | Function in Research |

|---|---|---|

| Software & Platforms | OpenEye Orion | Provides a 3D-QSAR implementation that uses shape and electrostatic descriptors from ROCS and EON as a consensus model, offering prediction confidence estimates [20] [21]. |

| Cresset Software Suite | Offers field-based tools for molecular alignment and 3D-QSAR, emphasizing the critical role of alignment in model success [2]. | |

| PyL3dMD | An open-source Python package for calculating over 2000 3D molecular descriptors directly from molecular dynamics trajectories, enabling the incorporation of conformational flexibility [22]. | |

| Molecular Descriptors | 3D Shape & Electrostatics | Core descriptors for modern 3D-QSAR, derived from molecular fields. They capture the 3D pharmacophore and steric/electronic features critical for binding [20] [21]. |

| WHIM Descriptors | Weighted Holistic Invariant Molecular descriptors capture 3D information about molecular size, shape, symmetry, and atom distribution in an invariant reference frame [22]. | |

| GETAWAY Descriptors | Geometry, Topology, and Atom-Weights Assembly descriptors combine structural and electronic information to characterize molecular interactions [22]. | |

| Validation Techniques | Double Cross-Validation | A nested validation method where an inner loop performs model selection and an outer loop provides an unbiased estimate of prediction error, crucial for reliable error estimation under model uncertainty [19]. |

| SALI Networks | A network-based visualization tool for activity cliffs, allowing researchers to quickly "zoom in" on the most significant SAR discontinuities and identify hub compounds [14]. |

Frequently Asked Questions (FAQs)

Q1: What is the primary advantage of using Graph Neural Networks over traditional machine learning for QSAR?

Graph Neural Networks (GNNs), such as Graph Isomorphism Networks (GINs), offer an "end-to-end" learning architecture that automatically learns concise and informative molecular representations directly from molecular graph structures. Unlike traditional methods that rely on pre-defined molecular descriptors or fingerprints, GNNs can capture complex structural patterns without requiring expert-crafted features, which is particularly beneficial for navigating complex structure-activity relationships (SARs) and activity cliffs [23] [1].

Q2: My GIN model performs well on the training set but generalizes poorly to new data. What could be wrong?

This is a classic sign of overfitting. Key strategies to address this include:

- Hyperparameter Tuning: Systematically optimize hyperparameters like learning rate and dropout. Evidence suggests that these factors are more critical for final performance than the specific GNN architecture chosen [24].

- Appropriate Data Splitting: Ensure your training and test sets are separated by a time split (e.g., using the earliest 80% of compounds for training and the latest 20% for testing) to simulate real-world prediction scenarios and avoid data leakage [25].

- Model Simplification: Consider streamlining your GNN architecture. A simplified gCNN architecture, for instance, has been shown to yield performance improvements on difficult-to-classify test sets [25].

Q3: How can I interpret the predictions made by a GNN QSAR model, which is often seen as a "black box"?

Saliency maps are a powerful tool for adding explainability. This technique highlights molecular substructures that are most relevant to the model's activity prediction by connecting internal neural network weights back to the input molecular graph. This allows researchers to visualize key substructure-activity relationships, making the model's decisions more transparent and interpretable [25].

Q4: Why does my model fail to predict 'activity cliffs' (ACs), and how can GINs help?

Activity cliffs—pairs of structurally similar compounds with large potency differences—are a major source of prediction error for QSAR models because they defy the traditional similarity principle [1] [9]. While modern QSAR models, including GNNs, often struggle with ACs when the activities of both compounds are unknown, using graph isomorphism features (as in GINs) has been shown to be competitive with or superior to classical molecular representations for AC classification tasks. This makes GINs a strong baseline model for identifying these critical SAR discontinuities [1].

Q5: In practice, when should I use a GIN instead of a classical method like ECFPs with a Random Forest?

The choice depends on the problem context. Classical featurizations like Extended-Connectivity Fingerprints (ECFPs) consistently deliver robust performance for general QSAR prediction and are often faster to train [1] [26]. GINs and other GNNs shine when learning from the inherent graph structure of molecules is paramount, such as when dealing with complex SARs or when you need to generate highly informative, data-driven molecular representations without relying on pre-defined descriptors [23] [1]. Performance evaluations across diverse datasets indicate that no single architecture universally outperforms others, emphasizing the importance of problem-specific tuning [24].

Troubleshooting Guides

Issue 1: Poor Predictive Performance on Activity Cliffs

Problem: Model predictions are inaccurate for pairs of structurally similar molecules that have large differences in potency, leading to high prediction error and misleading SAR analysis.

Diagnosis Steps:

- Identify Activity Cliffs: Systematically identify activity cliffs in your dataset. A common definition is a pair of compounds with a high structural similarity (e.g., Tanimoto coefficient > 0.85 based on ECFP4 fingerprints) but a large potency difference (e.g., at least a 100-fold difference in activity) [9].

- Evaluate AC-Specific Performance: Isolate these AC pairs in a dedicated test set and evaluate your model's sensitivity specifically on them, comparing it to the model's overall performance [1].

Solutions:

- Leverage GIN Representations: Implement a model using Graph Isomorphism Networks (GINs), which have demonstrated strong baseline performance for AC-prediction by learning more expressive molecular representations [1] [26].

- Incorporate Pairwise Information: For critical AC-prediction tasks, consider moving beyond single-molecule prediction. Develop a twin neural network model that takes pairs of molecules as input, explicitly learning the relationship between them to better capture the cliff phenomenon [26].

- Data Augmentation: If available, incorporate known AC pairs into your training process to help the model learn these discontinuous relationships.

Issue 2: Suboptimal GNN Architecture and Training

Problem: The GNN model fails to converge, is unstable during training, or delivers subpar accuracy compared to simple baseline models.

Diagnosis Steps:

- Establish a Baseline: First, train a classical model (e.g., Random Forest on ECFPs) to establish a performance baseline [25].

- Check Hyperparameters: Review your learning rate, network depth (number of message-passing layers), and hidden layer dimensions. Suboptimal settings in these areas are a common cause of poor performance [24].

Solutions:

- Prioritize Hyperparameter Optimization: Direct modeling efforts towards a systematic hyperparameter search. A study on GSK internal datasets concluded that hyperparameters like learning rate and dropout are crucial and can have a greater impact than the choice of GNN architecture itself [24].

- Simplify the Architecture: Avoid unnecessarily complex models. A streamlined gCNN architecture, optimized across hundreds of protein targets, can often yield more robust and better performance [25].

- Use Advanced Aggregators: In architectures like GraphSAGE, experiment with different neighborhood aggregator functions (e.g., mean, LSTM, or pooling aggregators) to improve the inductive learning capabilities and stability of your model [27].

Experimental Protocol: Benchmarking GINs for QSAR and Activity-Cliff Prediction

Objective: To systematically evaluate the performance of Graph Isomorphism Networks (GINs) against classical molecular representation methods for standard QSAR prediction and the specific task of activity-cliff classification.

Methodology:

- Data Curation:

- Select benchmark datasets from public repositories like ChEMBL for specific targets (e.g., dopamine receptor D2, factor Xa) [1].

- Standardize molecular structures (e.g., using RDKit): desalt, remove solvents, and eliminate duplicates [1].

- Apply a chronological split where the earliest 80% of data is used for training/validation and the latest 20% is held out for testing. This prevents data leakage and simulates a realistic drug discovery scenario [25].

Molecular Featurization:

Model Training and Evaluation:

- Train multiple models by combining featurization methods (GIN, ECFP, PDV) with regression techniques (Random Forest, Multilayer Perceptron) [1].

- For QSAR prediction, evaluate the root-mean-square error (RMSE) and R² on the test set for the continuous activity prediction of individual molecules.

- For Activity-Cliff classification, use the trained models to predict the activity of each compound in a pair of similar molecules. Classify the pair as an AC if the predicted activity difference exceeds a threshold. Report the sensitivity (true positive rate) for AC detection [1].

Table 1: Key Performance Metrics from a Comparative Study on Activity-Cliff Prediction [1]

| Molecular Representation | Regression Technique | AC Sensitivity (Activities Unknown) | AC Sensitivity (One Activity Known) | General QSAR Performance |

|---|---|---|---|---|

| Graph Isomorphism Network (GIN) | Multilayer Perceptron | Low | Substantially Increased | Competitive, can be superior |

| Extended-Connectivity Fingerprints (ECFP) | Random Forest | Low | Substantially Increased | Consistently Good |

| Physicochemical-Descriptor Vectors (PDV) | k-Nearest Neighbours | Low | Substantially Increased | Variable |

Workflow Diagram: GIN-based QSAR with Saliency Mapping

GIN QSAR Workflow with Interpretation

The Scientist's Toolkit: Essential Research Reagents & Software

Table 2: Key Software and Computational Tools for GNN-based QSAR

| Tool Name | Type / Category | Primary Function in GNN-QSAR | Key Feature / Note |

|---|---|---|---|

| RDKit | Cheminformatics Library | Converts SMILES strings to molecular graph objects; calculates classical descriptors. | Open-source foundation for data preprocessing and model prototyping [25]. |

| PyTorch Geometric | Deep Learning Library | Provides pre-built GNN layers (e.g., GINConv) and graph data utilities. | Simplifies the implementation and training of GNN models in PyTorch [27]. |

| DeepChem | Deep Learning Library | Offers end-to-end tools for molecular ML, including GraphConv models and datasets. | A comprehensive ecosystem for drug discovery and quantum chemistry [25]. |

| MOE (Molecular Operating Environment) | Commercial Software Platform | Integrated suite for molecular modeling, cheminformatics, and QSAR modeling. | Supports classical QSAR workflows and structure-based design [28]. |

| StarDrop | Commercial Software Platform | Platform for small molecule design and optimization with QSAR and ADMET prediction. | Features AI-guided lead optimization and sensitivity analysis [28]. |

| DataWarrior | Open-Source Program | Combines chemical intelligence, data visualization, and QSAR model development. | Excellent for interactive data analysis and generating molecular descriptors [28]. |

In the field of computer-aided drug design, structure-activity relationship (SAR) analysis forms the cornerstone of molecular optimization. However, a significant challenge arises from activity cliffs (ACs)—phenomena where minute structural modifications between similar compounds lead to dramatic changes in biological activity [15] [18]. These discontinuities in the SAR landscape consistently challenge traditional quantitative structure-activity relationship (QSAR) models, which often assume smooth transitions in activity with gradual structural changes [15]. Research has demonstrated that standard QSAR models frequently fail to predict activity cliffs, resulting in substantial prediction errors even when employing sophisticated deep learning approaches [15].

The Activity Cliff-Aware Reinforcement Learning (ACARL) framework represents a paradigm shift in addressing this fundamental challenge. By explicitly incorporating activity cliff awareness into de novo molecular design, ACARL marks the first AI-driven approach to directly target SAR discontinuities [29] [10]. This technical support document provides comprehensive guidance for researchers implementing this innovative framework, addressing common experimental challenges and providing detailed methodological protocols to ensure successful deployment in drug discovery pipelines.

FAQ: Understanding ACARL Fundamentals

Q1: What exactly is an "activity cliff" and why does it challenge traditional QSAR models?

A1: Activity cliffs are pairs of structurally similar compounds that exhibit unexpectedly large differences in binding affinity for a given target [15]. For example, the addition of a single hydroxyl group to a molecular scaffold might increase inhibition by nearly three orders of magnitude [15].

Key reasons for QSAR challenges include:

- Violation of similarity principle: Most QSAR models operate on the fundamental principle that structurally similar molecules should have similar activities, which activity cliffs directly contradict [15].

- Statistical underrepresentation: Activity cliff compounds are rare in most datasets, making it difficult for models to learn these discontinuous patterns [10].

- Prediction inconsistencies: Studies show QSAR models generate analogous predictions for structurally similar molecules, which fails precisely for activity cliff compounds [10].

Q2: How does ACARL fundamentally differ from other reinforcement learning approaches in molecular design?

A2: ACARL introduces two novel components that specifically address SAR discontinuities:

- Activity Cliff Index (ACI): A quantitative metric that systematically identifies activity cliffs by comparing structural similarity with differences in biological activity [10]. This index enables the model to detect and prioritize these critical regions in chemical space.

- Contrastive Loss Function: Unlike traditional RL that equally weighs all samples, ACARL employs a tailored contrastive loss that actively prioritizes learning from activity cliff compounds, focusing model optimization on high-impact SAR regions [29] [10].

Q3: What protein targets has ACARL been validated against, and what were the key results?

A3: Experimental evaluations across multiple biologically relevant protein targets have demonstrated ACARL's superior performance in generating high-affinity molecules compared to state-of-the-art algorithms [29] [10]. The framework has shown particular strength in:

- Generating compounds with improved binding affinity

- Maintaining structural diversity across generated molecules

- Effectively modeling complex SAR patterns seen in real-world drug targets

Table: Key Performance Metrics of ACARL Framework

| Evaluation Metric | Performance Advantage | Significance in Drug Discovery |

|---|---|---|

| Binding Affinity | Superior to state-of-the-art algorithms | Higher potency candidates |

| Structural Diversity | Maintains or improves diversity | Reduces novelty limitations |

| SAR Modeling | Better captures complex activity patterns | More predictive optimization |

Troubleshooting Common Experimental Challenges

Problem 1: Low Sensitivity in Activity Cliff Detection

Symptoms: Model fails to identify known activity cliff compounds; minimal contrastive loss impact during training.

Solutions:

- Verify Molecular Representations: Implement multiple representation methods (ECFPs, graph isomorphism networks, physicochemical-descriptor vectors) to ensure comprehensive cliff detection [15].

- Adjust ACI Thresholds: Systematically modify Activity Cliff Index parameters to optimize for your specific target landscape.

- Incorporate Domain Knowledge: Utilize matched molecular pairs (MMPs) - defined as two compounds differing only at a single substructure site - as a complementary similarity criterion [10].

Problem 2: Training Instability in RL Phase

Symptoms: Erratic policy updates; volatile reward signals; failure to converge.

Solutions:

- Implement Reward Normalization: Scale docking scores and similarity metrics to compatible value ranges using the relationship: ΔG = RTlnKᵢ, where R is the universal gas constant and T is temperature [10].

- Gradient Clipping: Apply constraints to prevent explosive gradients during contrastive loss optimization.

- Staged Training: Begin with standard RL objectives before gradually introducing the contrastive loss component.

Problem 3: Limited Generalization Across Protein Targets

Symptoms: Model performs well on training targets but fails to generate quality compounds for novel targets.

Solutions:

- Incorporate Transfer Learning: Leverage knowledge from source tasks with abundant data to improve performance on target tasks with limited data [30].

- Utilize Protein Embeddings: Implement AlphaFold-derived protein representations to capture structural relationships and enable better generalization [31].

- Data Augmentation: Expand training diversity through validated augmentation techniques, particularly important for targets with sparse ligand bioactivity data [31].

Experimental Protocols & Methodologies

Protocol 1: Activity Cliff Identification and Quantification

Purpose: Systematically identify activity cliff compounds in molecular datasets.

Procedure:

- Calculate Molecular Similarity:

Quantify Activity Differences:

- Convert bioactivity measurements (Kᵢ, Kd, IC₅₀) to pChEMBL values (pChEMBL = -log₁₀(activity)) [31].

- Calculate absolute activity differences for all compound pairs.

Apply Activity Cliff Index:

Table: Molecular Representation Methods for Activity Cliff Detection

| Representation Type | Key Features | AC Detection Performance |

|---|---|---|

| Extended-Connectivity Fingerprints (ECFPs) | Circular topology, structural keys | Consistent best performer for general QSAR [15] |

| Graph Isomorphism Networks (GINs) | Adaptive, learns from graph structure | Competitive or superior for AC classification [15] |

| Physicochemical-Descriptor Vectors (PDVs) | Traditional QSAR descriptors, interpretable | Moderate performance [15] |

Protocol 2: ACARL Model Implementation

Purpose: Implement the complete ACARL framework for de novo molecular design.

Procedure:

- Base Model Architecture:

Contrastive Loss Integration:

- Implement the tailored contrastive loss function that amplifies activity cliff compounds.

- Balance weighting between standard RL objectives and contrastive components.

Training Pipeline:

- Phase 1: Pretrain on large molecular datasets (e.g., ChEMBL, ZINC) to establish chemical validity [32].

- Phase 2: Implement RL fine-tuning with integrated contrastive loss.

- Phase 3: Validate using docking simulations against target proteins.

ACARL Framework Experimental Workflow

Protocol 3: Model Validation and SAR Analysis

Purpose: Validate generated compounds and analyze structure-activity relationships.

Procedure:

- Docking Simulations:

SAR Landscape Visualization:

Domain of Applicability Assessment:

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Resources for ACARL Implementation

| Resource Category | Specific Tools/Databases | Key Functionality |

|---|---|---|

| Bioactivity Data | ChEMBL [10], Papyrus [31] | Source of standardized bioactivity data with quality assessments |

| Molecular Representations | ECFPs [15], Graph Isomorphism Networks [15] | Encode molecular structure for similarity calculations and model input |

| Protein Structure Data | AlphaFold Protein Embeddings [31] | Target-aware conditioning for generalized molecular generation |

| Validation Tools | Molecular Docking Software [10], GuacaMol Benchmark [10] | Assess binding affinity and benchmark generation performance |

| Scaffold Libraries | ZINC [32], Enamine Real [31] | Source of synthesizable building blocks for de novo design |

ACARL Component Interaction Logic